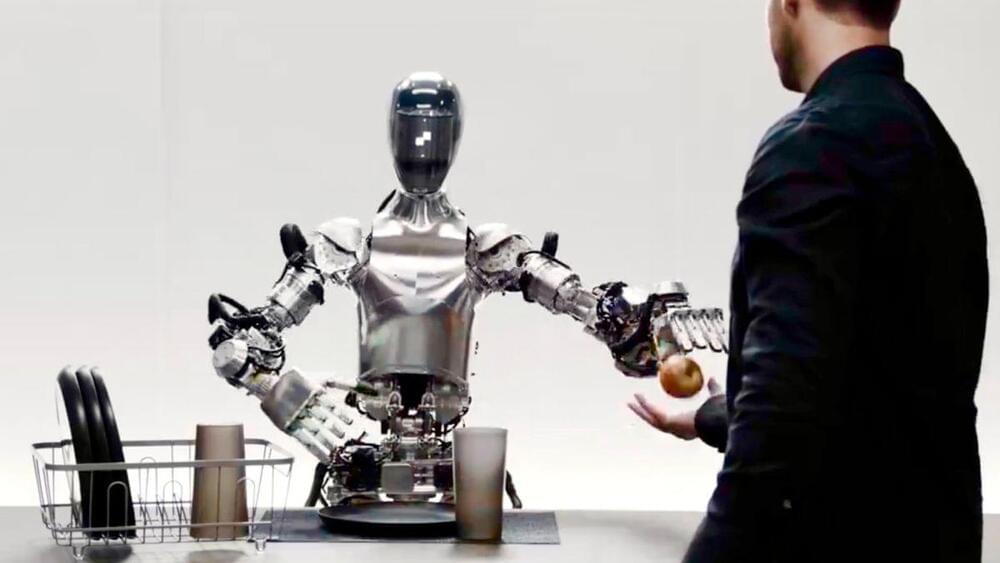

Figure 1 learned how to make coffee by watching a human do it, and now it can speak to you like a person.

Yay face_with_colon_three

Mercedes-Benz is partnering with U.S.-based robotics firm Apptronik to explore ways that the latter’s humanoid robots can be used at its factories.

A month ago, we were impressed by these robots being able to pick things up, put them in the right spot, open doors and charge themselves. But new video released hours ago makes it clear that autonomous humanoid work is starting to accelerate like mad.

Norwegian robotics company 1X is OpenAI’s other bet in the humanoid game – the bigger recent headline being its investment in, and collaboration with American company Figure.

1X’s Eve robots don’t have legs; they roll around on wheeled platforms. They don’t have the extraordinary hands that companies like Sanctuary AI, Figure and Tesla are making, settling instead for stumpy-looking claw grippers. And they’re pretty underwhelming to look at, too – their smiley faces, frankly, look silly, and as we’ve noted before, they’re draped in little tracksuity arrangements that make them appear like they’re late for their luge race.

AI image generation company Stability AI is in big trouble.

Several key AI developers who worked on Stable Diffusion, the company’s popular text-to-image generator, have resigned, Forbes reports.

Stability AI CEO Emad Mostaque announced the news during an all-hands meeting last week, per Forbes, revealing that three of the five researchers who originally created the foundational tech that powers Stable Diffusion at two German universities, had left.

Importantly, the superior temporal sulcus (STS), and superior temporal gyrus (STG) are considered core areas for multisensory integration (17, 38), including for olfactory–visual integration (44). The STS was significantly connected to the IPS during multisensory integration, as indicated by the PPI analysis ( Fig. 3 ) focusing on functional connectivity of IPS and whole-brain activation. Likewise, the anterior and middle cingulate cortex, precuneus, and hippocampus/amygdala were activated when testing sickness-cue integration-related whole-brain functional connectivity with the IPS but were not activated when previously testing for unisensory odor or face sickness perception. In this context, hippocampus/amygdala activation may represent the involvement of an associative neural network responding to threat (24) represented by a multisensory sickness signal. This notion supports the earlier assumption of olfactory-sickness–driven OFC and MDT activation, suggested to be part of a neural circuitry serving disease avoidance. Last, the middle cingulate cortex has recently been found to exhibit enhanced connectivity with the anterior insula during a first-hand experience of LPS-induced inflammation (26), and this enhancement has been interpreted as a potential neurophysiological mechanism involved in the brain’s sickness response. Applied to the current data, the middle cingulate cortex, in the context of multisensory-sickness–driven associations between IPS and whole-brain activations, may indicate a shared representation of an inflammatory state and associated discomfort.

In conclusion, the present study shows how subtle and early olfactory and visual sickness cues interact through cortical activation and may influence humans’ approach–avoidance tendencies. The study provides support for sensory integration of information from cues of visual and olfactory sickness in cortical multisensory convergences zones as being essential for the detection and evaluation of sick individuals. Both olfaction and vision, separately and following sensory integration, may thus be important parts of circuits handling imminent threats of contagion, motivating the avoidance of sick conspecifics (3, 5).

From Beijing Institute for General Artificial Intelligence (BIGAI) 2Peking University 3Carnegie Mellon University 4Tsinghua University.

🦁 Introducing LEO: an embodied generalist agent in 3D World🌎

Everything 👉 http://embodied-generalist.github.io

Elon Musk made some striking predictions about the impact of artificial intelligence (AI) on jobs and income at the inaugural AI Safety Summit in the U.K. in November.

The serial entrepreneur and CEO painted a utopian vision where AI renders traditional employment obsolete but provides an “age of abundance” through a system of “universal high income.”

“It’s hard to say exactly what that moment is, but there will come a point where no job is needed,” Musk told U.K. Prime Minister Rishi Sunak. “You can have a job if you want to have a job or sort of personal satisfaction, but the AI will be able to do everything.”

Artificial general intelligence (AGI) — often referred to as “strong AI,” “full AI,” “human-level AI” or “general intelligent action” — represents a significant future leap in the field of artificial intelligence. Unlike narrow AI, which is tailored for specific tasks, such as detecting product flaws, summarizing the news, or building you a website, AGI will be able to perform a broad spectrum of cognitive tasks at or above human levels. Addressing the press this week at Nvidia’s annual GTC developer conference, CEO Jensen Huang appeared to be getting really bored of discussing the subject — not least because he finds himself misquoted a lot, he says.

The frequency of the question makes sense: The concept raises existential questions about humanity’s role in and control of a future where machines can outthink, outlearn and outperform humans in virtually every domain. The core of this concern lies in the unpredictability of AGI’s decision-making processes and objectives, which might not align with human values or priorities (a concept explored in-depth in science fiction since at least the 1940s). There’s concern that once AGI reaches a certain level of autonomy and capability, it might become impossible to contain or control, leading to scenarios where its actions cannot be predicted or reversed.

When sensationalist press asks for a timeframe, it is often baiting AI professionals into putting a timeline on the end of humanity — or at least the current status quo. Needless to say, AI CEOs aren’t always eager to tackle the subject.