A new approach to monitoring arachnid behavior could help understand their social dynamics, as well as their habitat’s health.

Oriole Networks, a UCL spinout, has raised £10mn in seed funding to build AI “super brains” that promise to accelerate the training of Large Language Models (LLMs).

Founded in 2023 by UCL scientists, the startup has developed a new method that harnesses the power of light to connect thousands of AI chips. This results in a network of chips, where the power of each individual GPU is combined to form a “super brain.”

According to James Regan, CEO at Oriole Networks, this “enables the direct connection of a very large number of nodes enabling it to function as a single machine.”

The advanced civilization in my story have harnessed the power of many of the stars in their galaxy and using them for different purposes, one being Matrioska brains. Some of these super computers will be to run the AI in the real world as well as for other calculations, Others will be to run detailed virtual worlds. The earliest Simulations will be Computer simulated worlds with artifical life within but later the advanced species will try to create simulations to the subatomic level.

It has been stated that a Matrioshka brain with the full output of the sun can simulate 1 trillion to a quadrillion minds, how this translates to how much world/simulation space can exist and to what detail i am not sure. I believe our sun’s output per second is $3.86 \cdot 10^{26}$ W and our galaxies is $4\cdot 10^{58} \ W/s$, although with 400 billions stars in our galaxy I am not sure how of that energy is from other sources than the stars.

If we look past the uncertainty of subatomic partcles we have $10^{80}$ particles in a space of $10^{185}$ plank volumes in our observable universe, if we use time frames of $10^{-13}$ seconds this gives $10^{13}$ time frames per real second. With $10^{80}$ particles we can have $10^{160}$ interactions for a full simulation but a simulation where only the observed/ observable details needs to be simulated can run off much less computing.

Thesis:

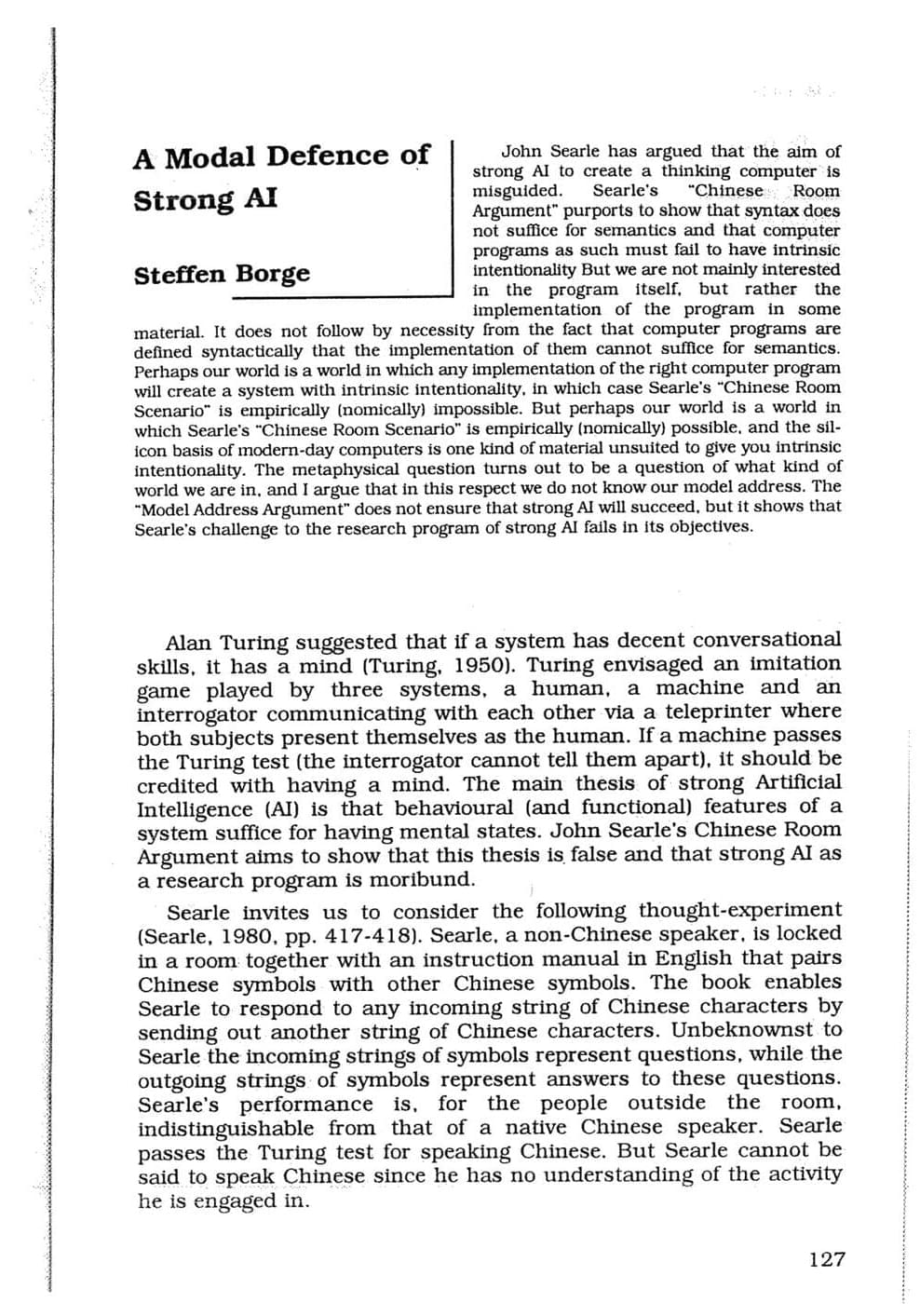

Part I: It has been proven that the human mind cannot be analogous to an electronic (or any other type of) computer, and the functioning of an intellective mind cannot be reproduced (though it can certainly be simulated) by any type of mechanical device, including modern artificial intelligence systems.

Part II: It is further impossible that the human mind is a purely material thing (including some “emergent property” of matter).

Thank you to today’s sponsors:⚡️ Sign up and download for FREE using my link: https://bit.ly/GrammarlyITPique Tea: 15% off + Free Frother Link: https://bit.ly/GrammarlyITPique

Mental health chatbots can help treat symptoms of depression, according to findings from an NTU research team. These apps can interact with people to show empathy and encouragement, to improve moods. CNA spoke to Dr Laura Martinengo, Research Fellow at Lee Kong Chian School of Medicine at NTU.

Follow us:

CNA: https://cna.asia.

CNA Lifestyle: http://www.cnalifestyle.com.

Facebook: / channelnewsasia.

Instagram: / channelnewsasia.

Twitter: / channelnewsasia.

TikTok: / channelnewsasia.