A web-based vibe coding tool that removes barriers and empowers creators worldwide to develop with artificial intelligence. FOR IMMEDIATE RELEASE — Press Release Is Available On PR Newswire https://www.

A web-based vibe coding tool that removes barriers and empowers creators worldwide to develop with artificial intelligence. FOR IMMEDIATE RELEASE — Press Release Is Available On PR Newswire https://www.

I’ve just hopped on a video call with the CEO of Retro Biosciences, the Sam Altman-backed longevity company, when I mention it’s quite hot.

Joe Betts-LaCroix takes my passing comment as a cue to muse on the wonders of air conditioning, and how energy and heat were once synonymous — until they weren’t.

As a multi-hyphenate scientist, entrepreneur, and once-inventor of the world’s smallest computer, Betts-LaCroix is excited by paradigm change.

At the helm of what is essentially Altman’s playground for experimenting with pushing the limits of the human lifespan, Betts-LaCroix is hoping to engineer the same shift that air conditioning brought to hot summer days for your brain and body. Ideally, one day, decouple aging from decline and disease.

The experimental memory pill works by clearing out “gunk in the cells” linked to Alzheimer’s and Parkinson’s, Betts-LaCroix said. If the pill works, it will restart stalled autophagy processes in the body, cleaning up damage, “especially in the brain cells,” he said.

In contrast, other new Alzheimer’s drugs, like Eisai’s Leqembi and Eli Lilly’s Kisunla, slow down cognitive decline by flushing out sticky amyloid plaques that are a hallmark of the disease.

The body movements performed by humans and other animals are known to be supported by several intricate biological and neural mechanisms. While roboticists have been trying to develop systems that emulate these mechanisms for decades, the processes driving these systems’ motions remain very different.

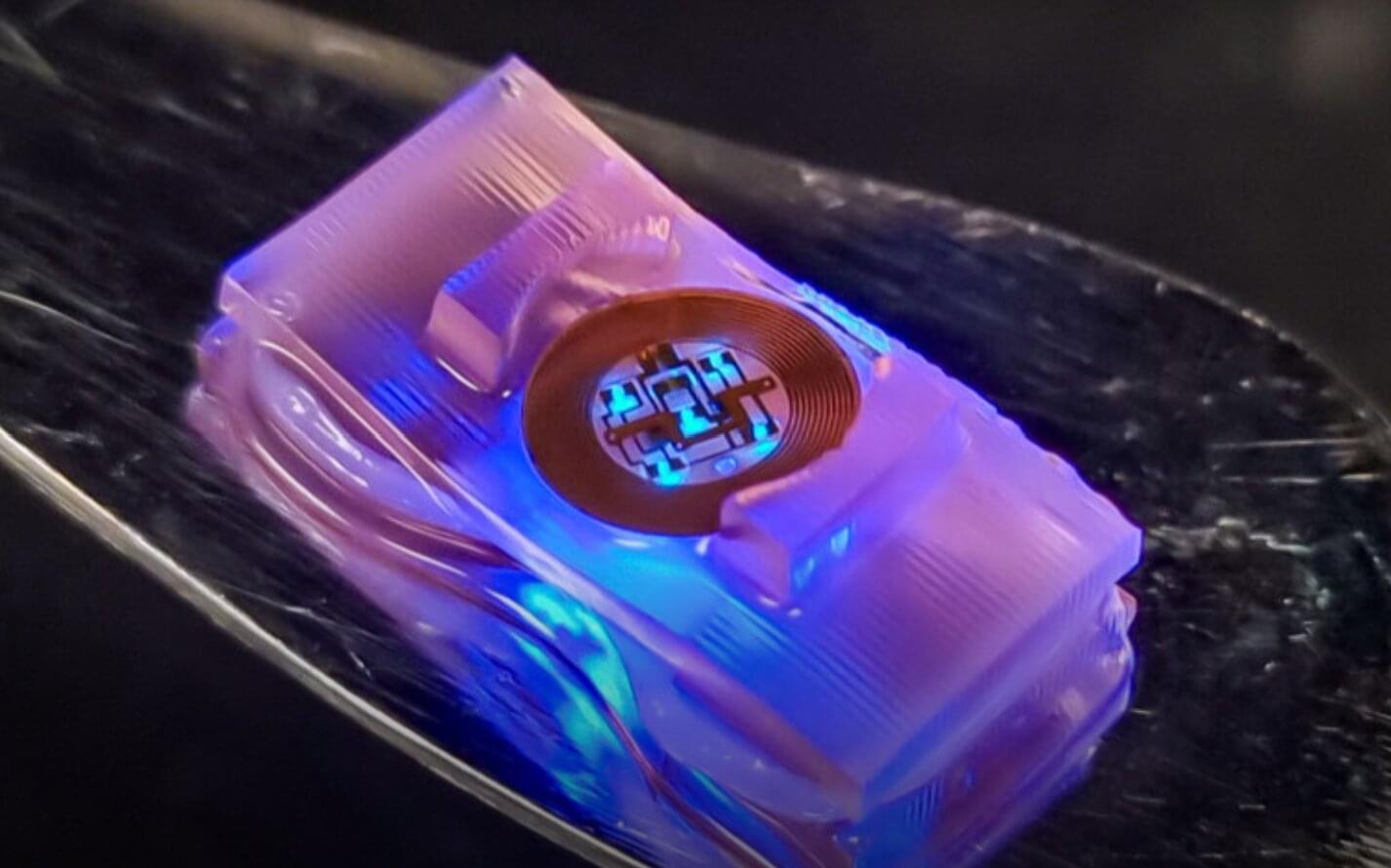

Researchers at University of Illinois at Urbana-Champaign, Northwestern University and other institutes recently developed new biohybrid robots that combine living cells from mice with 3D printed hydrogel structures with wireless optoelectronics.

These robots, presented in a paper published in Science Robotics, have neuromuscular junctions where the neurons can be controlled using optogenetic techniques, emulating the neural mechanisms that support human movements.

A new military test has showcased potential that large drones can work as motherships for smaller loitering munitions. The plan could get a push following a recent air launch of a Switchblade 600 loitering munition (LM) from a General Atomics’ Block 5 MQ-9A unmanned aircraft system (UAS).

It marked the first time a Switchblade 600 has ever been launched from an unmanned aircraft.

The flight testing took place from July 22–24 at the U.S. Army Yuma Proving Grounds Test Range.

Think twice about eliminating those pesky ants at your next family picnic. Their behavior may hold the key to reinventing how engineering materials, traffic control and multi-agent robots are made and utilized, thanks to research conducted by recent graduate Matthew Loges and Assistant Professor Tomer Weiss from NJIT’s Ying Wu College of Computing.

The two earned a best presentation award for their research paper titled “Simulating Ant Swarm Aggregations Dynamics” at the ACM SIGGRAPH Symposium for Computer Animation (SCA), and a qualifying poster nomination for the undergraduate research competition at the 2025 ACM SIGGRAPH (Special Interest Group on Computer Graphics and Interactive Techniques) conference.

Their study began with the observation that ant swarms behave in a manner similar to both fluid and elastic materials. The duo began work in the summer of 2024. Loges became interested in research after he took an elective class with Weiss, IT 360 Computer Graphics for Visual Effects, at the Department of Informatics. This was his first project and research paper.

OpenAI is rolling out the GPT-5 Codex model to all Codex instances, including Terminal, IDE extension, and Codex Web (chatgpt.com/codex).

Codex is an AI agent that allows you to automate coding-related tasks. You can delegate your complex tasks to Codex and watch it execute code for you.

Even if you don’t know programming languages, you can use Codex to “vibe code” your apps and web apps.

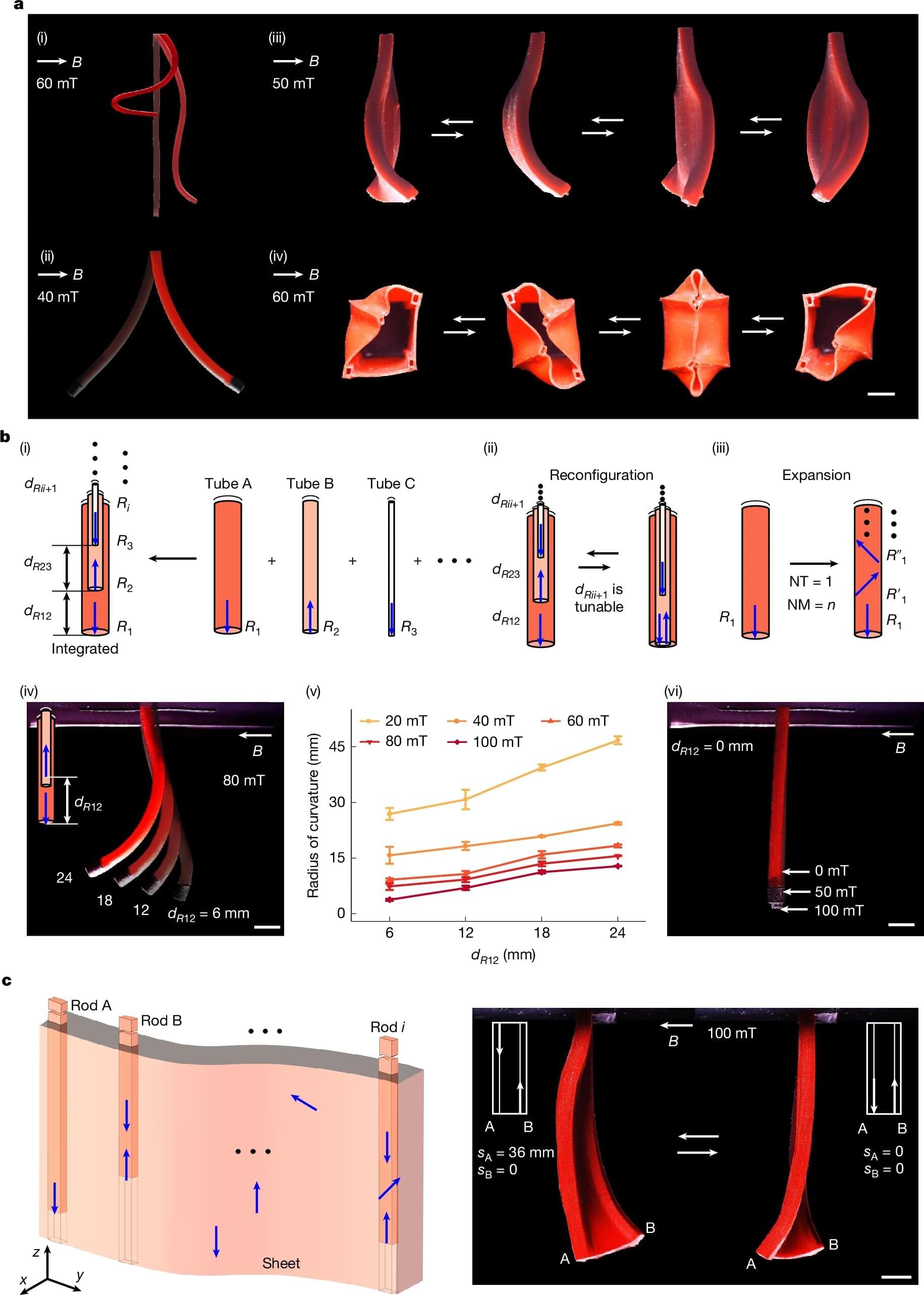

Until now, when scientists created magnetic robots, their magnetization profiles were generally fixed, enabling only a specific type of shape programming capability using applied external magnetic fields. Researchers at the Max Planck Institute for Intelligent Systems (MPI-IS) have now proposed a new magnetization reprogramming method that can drastically expand the complexity and diversity of the shape-programming capabilities of such robots.

They built a soft robot with a magnetization profile that can be altered in real time and in situ. Their findings are published in Nature.

Led by Prof. Dr. Metin Sitti in the Physical Intelligence (PI) Department at MPI-IS in collaboration with Koç University in Istanbul, Turkey, the team stacked several tubes inside each other like Matryoshka dolls.

Tesla continues to advance and solidify its momentum in the electric vehicle market through significant technological innovations, expansions, and achievements in autonomous driving, AI-powered technologies, and overall growth.

## Questions to inspire discussion.

Robo Taxi Service Expansion.

🚕 Q: How has Tesla’s robo taxi service in California expanded its operations? A: Tesla’s robo taxi service now operates until 2 a.m. with only 4 hours of downtime, indicating operational readiness and confidence in the system’s performance.

🌎 Q: What hiring moves suggest Tesla’s plans for global robo taxi expansion? A: Tesla is hiring a senior software engineer in Fremont to develop backend systems for real-time pricing and fees for robo taxi rides worldwide.

🌙 Q: How is Tesla preparing for expanded robo taxi coverage across the US? A: Tesla is hiring autopilot data collection supervisors for night and afternoon shifts in Arizona, Florida, Texas, and Nevada, indicating planned expansion of services.