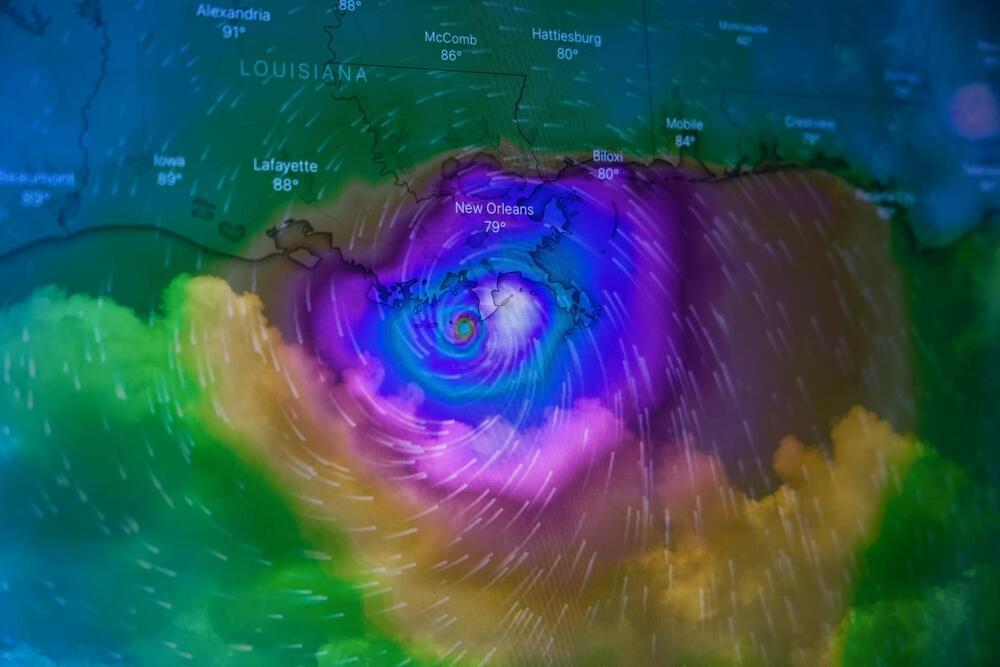

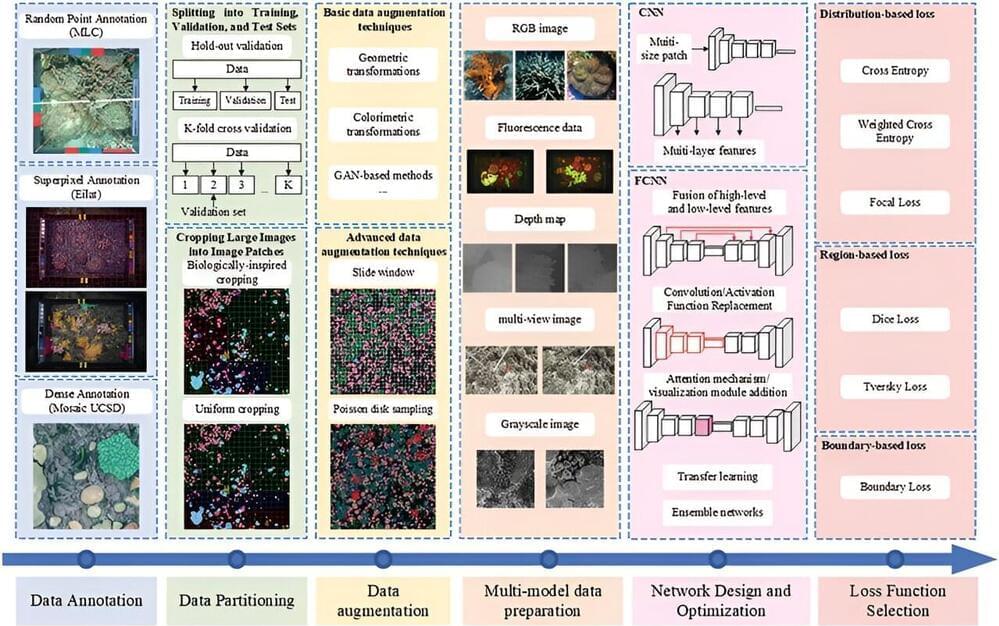

Much like the invigorating passage of a strong cold front, major changes are afoot in the weather forecasting community. And the end game is nothing short of revolutionary: an entirely new way to forecast weather based on artificial intelligence that can run on a desktop computer.

Today’s artificial intelligence systems require one resource more than any other to operate—data. For example, large language models such as ChatGPT voraciously consume data to improve answers to queries. The more and higher quality data, the better their training, and the sharper the results.