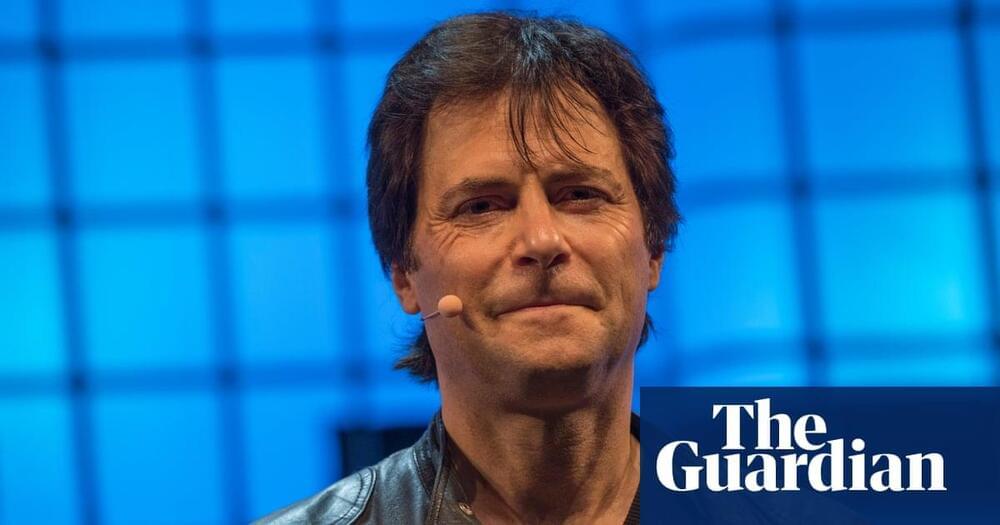

Max Tegmark argues that the downplaying is not accidental and threatens to delay, until it’s too late, the strict regulations needed.

Summary: Researchers developed a drone that flies autonomously using neuromorphic image processing, mimicking animal brains. This method significantly improves data processing speed and energy efficiency compared to traditional GPUs.

The study highlights the potential for tiny, agile drones for various applications. The neuromorphic approach allows the drone to process data up to 64 times faster while consuming three times less energy.

Championing an aerospace renaissance — elizabeth reynolds, managing director, US, starburst aerospace.

Elizabeth Reynolds is Managing Director, US of Starburst Aerospace (https://starburst.aero/), a global Aerospace and Defense (A\&D) startup accelerator and strategic advisory practice championing today’s aerospace renaissance, aligning early-stage technology innovators with government and commercial stakeholders and investors to modernize infrastructure in space, transportation, communications, and intelligence.

Elizabeth’s team works alongside hundreds of technology startups developing new aircraft, spacecraft, satellites, drones, sensors, autonomy, robotics, and much more.

Elizabeth brings 15 years of experience in deep tech entrepreneurship, growth, business operations, and strategy to the team. Prior to joining Starburst, she served as an executive for biotech, medtech, mobility, and interactive media companies, from founding through IPO. She is also an advisor to a number of startups and nonprofits supporting STEM education.

Starburst has offices in Los Angeles, Washington, D.C., Paris, Munich, Singapore, Seoul, Tel Aviv, and Madrid, has grown into a global team of 70 dedicated team members with a portfolio of 140+ startups, 20 accelerator programs, and 2 active venture funds which strive to provide enabling growth services for deep tech leaders disrupting the industry and working towards a safer, greener, and more connected world.

This video explores the 4th to the 10th dimensions of time. Watch this next video about the 10 stages of AI: • The 10 Stages of Artificial Intelligence.

🎁 5 Free ChatGPT Prompts To Become a Superhuman: https://bit.ly/3Oka9FM

🤖 AI for Business Leaders (Udacity Program): https://bit.ly/3Qjxkmu.

☕ My Patreon: / futurebusinesstech.

➡️ Official Discord Server: / discord.

💡 Future Business Tech explores the future of technology and the world.

Examples of topics I cover include:

• Artificial Intelligence \& Robotics.

• Virtual and Augmented Reality.

• Brain-Computer Interfaces.

• Transhumanism.

• Genetic Engineering.

SUBSCRIBE: https://bit.ly/3geLDGO

Disclaimer:

Some links in this description are affiliate links.

As an Amazon Associate, I earn from qualifying purchases.

This video explores the 4th to the 10th dimensions of time. Other related terms: advanced civilization, ai, artificial intelligence, future business tech, future technology, future tech, future business technologies, future technologies, aliens, higher dimensions, 10th dimension, 4th dimension, 5th dimension, 6th dimension, 7th dimension, 8th dimension, 9th dimension, time travel, etc.

Please support us on Patreon to get access to the private Discord server, bi-weekly calls, early access and ad-free listening.

/ mlst.

Aman Bhargava from Caltech and Cameron Witkowski from the University of Toronto to discuss their groundbreaking paper, “What’s the Magic Word? A Control Theory of LLM Prompting.” They frame LLM systems as discrete stochastic dynamical systems. This means they look at LLMs in a structured way, similar to how we analyze control systems in engineering. They explore the “reachable set” of outputs for an LLM. Essentially, this is the range of possible outputs the model can generate from a given starting point when influenced by different prompts. The research highlights that prompt engineering, or optimizing the input tokens, can significantly influence LLM outputs. They show that even short prompts can drastically alter the likelihood of specific outputs. Aman and Cameron’s work might be a boon for understanding and improving LLMs. They suggest that a deeper exploration of control theory concepts could lead to more reliable and capable language models.

What’s the Magic Word? A Control Theory of LLM Prompting (Aman Bhargava, Cameron Witkowski, Manav Shah, Matt Thomson)

https://arxiv.org/abs/2310.

LLM Control Theory Seminar (April 2024)

• LLM Control Theory Seminar (April 2024)

Society for the pursuit of AGI (Cameron founded it)

https://agisociety.mydurable.com/

New research says that quantifying progress in AI must account for the fact that the humans AI is measured against are actually a variable, noisy benchmark.