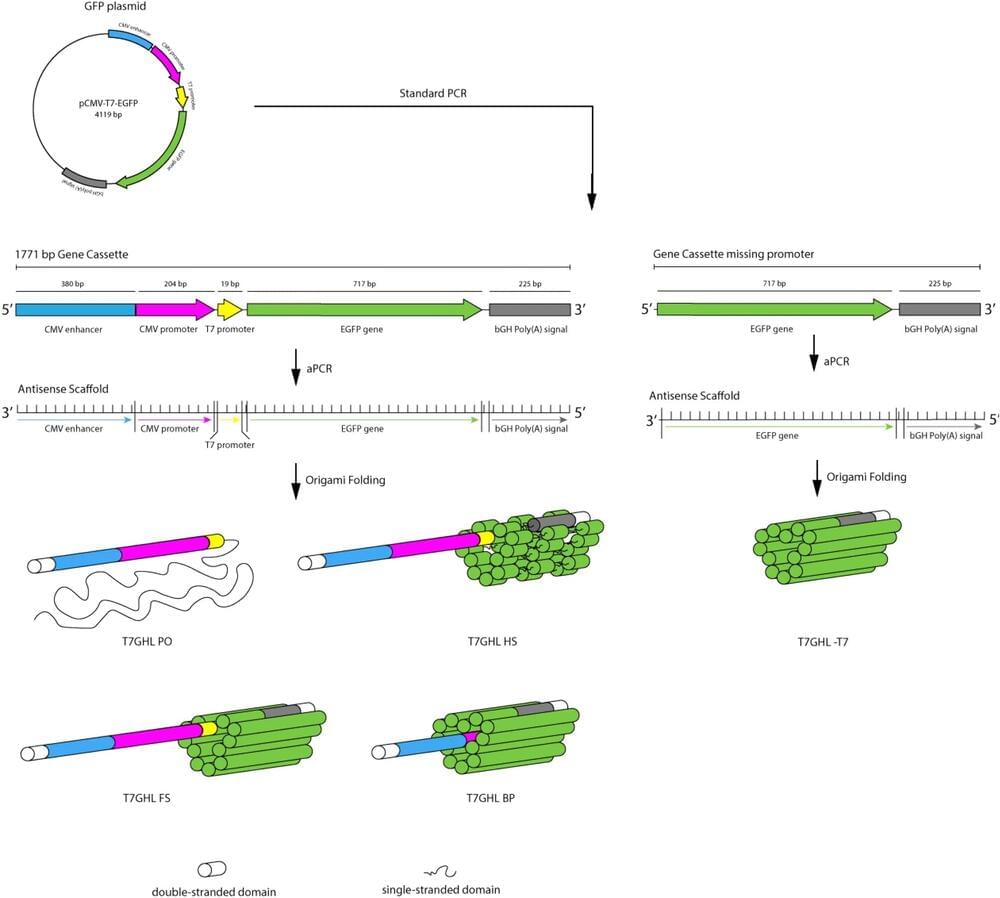

Scientists have been making nanoparticles out of DNA strands for two decades, manipulating the bonds that maintain DNA’s double-helical shape to sculpt self-assembling structures that could someday have jaw-dropping medical applications.

The study of DNA nanoparticles, however, has focused mostly on their architecture, turning the genetic code of life into components for fabricating minuscule robots. A pair of Iowa State University researchers in the genetics, development, and cell biology department—professor Eric Henderson and recent doctoral graduate Chang-Yong Oh—hope to change that by showing nanoscale materials made of DNA can convey their built-in genetic instructions.

“So far, most people have been exploring DNA nanoparticles from an engineering perspective. Little attention has been paid to the information held in those DNA strands,” Oh said.