The startup competes against the likes of Google and ChatGPT-maker OpenAI.

Remember to watch part 1: https://youtu.be/tANAl15CCLE

Welcome to the year 2,324, where humanity has transcended its Earthly origins to build civilizations across the solar system. Mars, Titan, and even the clouds of Venus are now home to more than 2.5 billion people, thanks to anti-aging technologies and AI-driven advancements. But how did we get here? And what does life look like in this brave new world? In this continuation of my speculative future series, I explore the political structures, societal shifts, and technological innovations that define our interplanetary existence. Get ready for a journey through a transformed solar system!

Like, comment, and subscribe to join me in imagining our cosmic future!

Credit to ‘StolenMadWolf’ for creating the collection that several of the solar system flags in this video are based on: https://www.deviantart.com/stolenmadwolf/gallery/82886572/sol-flag-collection

Besides Pokémon, there might have been no greater media franchise for a child of the 90s than the Transformers, mysterious robots fighting an intergalactic war but which can inexplicably change into various Earth-based object, like trucks and airplanes. It led to a number of toys which can also change shapes from fighting robots into various ordinary objects as well. And, perhaps in a way of life imitating art, plenty of real-life robots have features one might think were inspired by this franchise like this transforming quadruped robot.

Called the CYOBot, the robot has four articulating arms with a wheel at the end of each. The arms can be placed in a wide array of positions for different operating characteristics, allowing the robot to move in an incredibly diverse way. It’s based on a previous version called the CYOCrawler, using similar articulating arms but with no wheels. The build centers around an ESP32-S3 microcontroller, giving it plenty of compute power for things like machine learning, as well as wireless capabilities for control or access to more computing power.

Both robots are open source and modular as well, allowing a range of people to use and add on to the platform. Another perk here is that most parts are common or 3D printed, making it a fairly low barrier to entry for a platform with so many different configurations and options for expansion and development. If you prefer robots without wheels, though, we’d always recommend looking at Strandbeests for inspiration.

A rare species of bee was found on land where the company was planning to put a nuclear-powered artificial intelligence data center, the Financial Times reported, citing people familiar with the matter. Meta CEO Mark Zuckerberg reportedly told employees during an all-hands meeting that the rare bees would further complicate a deal with an existing nuclear power plant to build the data center.

Even the exact definition of AGI is still heavily debated, making it a murky milestone.

Regardless, the stakes are high: the AI industry has poured untold billions of dollars into building out datacenters to train AI models, an investment that’s likely many years away from paying off.

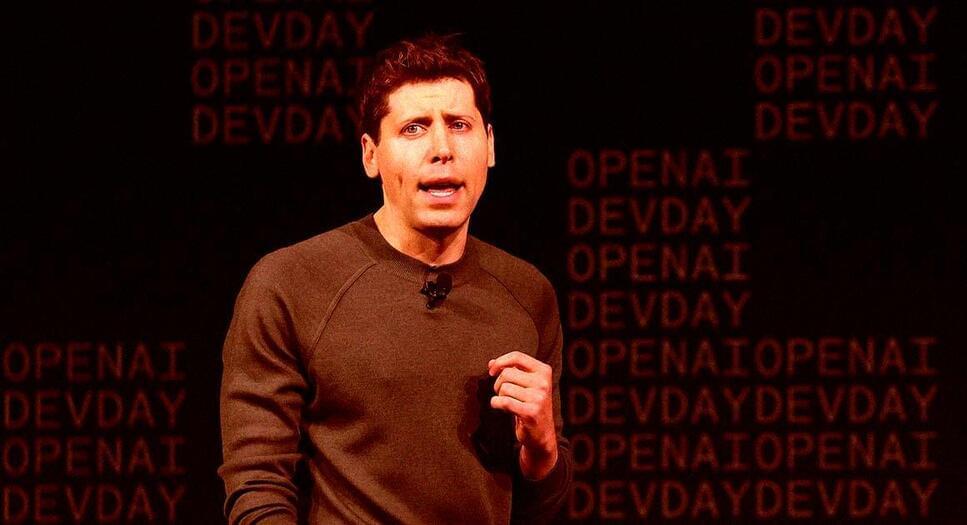

Naturally, OpenAI CEO and hypeman Sam Altman has remained optimistic. During a Reddit AMA this week, he even claimed that AGI is “achievable with current hardware.”