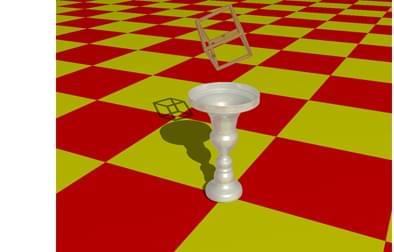

The most observant of our readers might have already noticed a small little detail about Oasis, a caveat that only the most eagle-eyed OSINT enthusiasts would catch – it’s basically a 1-to-1 copy of Mojang’s Minecraft.

And those readers would be right. Essentially, the “first AI-generated game” is nothing more than blatant plagiarism of everyone’s favorite sandbox, trained on thousands of hours of Minecraft gameplay and recordings of corresponding user actions, which resulted in a nearly identical, but worse in every aspect, “game” with a similar visual style, UI, gameplay mechanics, fonts, visual effects, animations, and so on.

One thing that doesn’t exist in the original Minecraft but is front and center in Oasis is, of course, AI hallucinations. Those who have tried it confirm that the experience is incredibly unstable, with environments often morphing into something else when not in the player’s direct line of sight, making the “first AI-generated game” a proof of concept at best, something that its creators, to their credit, openly admit, describing the current iteration of Oasis as a “technical demo.”