The ChatGPT maker reveals details of what’s officially known as OpenAI o1, which shows that AI needs more than scale to advance.

The following declassified nuclear test footage has been enhanced using AI with techniques such as slow motion, frame interpolation, upscaling, and colorization. This helps improve the clarity and visual quality of the original recordings, which were often degraded or limited by the technology of the time. Experiencing these shots with enhanced detail brings the devastating power of atomic weapons into focus and offers a clearer perspective on their catastrophic potential and impact.

Music generated with Suno AI.

Instagram: / hashem.alghaili \r.

Facebook: / sciencenaturepage.

ChatGPT maker OpenAI is rumored to be imminently releasing a brand-new AI model, internally dubbed “Strawberry,” that has a “human-like” ability to reason.

As Bloomberg reports, a person familiar with the project says it could be released as soon as this week.

We’ve seen rumors surrounding an OpenAI model capable of reasoning swirl for many months now. In November, Reuters and The Information reported that the company was working on a shadowy project called Q — pronounced Q-Star — which was alleged to represent a breakthrough in OpenAI’s efforts to realize artificial general intelligence, the theoretical point at which an AI could outperform a human.

Elon Musk is not smiling: Oracle and Elon Musk’s AI startup xAI recently ended talks on a potential $10 billion cloud computing deal, with xAI opting to build its own data center in Memphis, Tennessee.

At the time, Musk emphasised the need for speed and control over its own infrastructure. “Our fundamental competitiveness depends on being faster than any other AI company. This is the only way to catch up,” he added.

XAI is constructing its own AI data center with 100,000 NVIDIA chips. It claimed that it will be the world’s most powerful AI training cluster, marking a significant shift in strategy from cloud reliance to full infrastructure ownership.

The AI scene is electrified with groundbreaking advancements this month, keeping us all at the edge of our seats. A mind-blowing AI robot with human-like intelligence has the world in shock. Google DeepMind’s JEST AI learns at an astonishing 13x faster pace. OpenAI’s SearchGPT and CriticGPT, the force behind ChatGPT’s prowess, are disrupting industries. STRAWBERRY, their most powerful AI yet, takes center stage. GPT4ALL 3.0 is the AI sensation causing a frenzy, while OpenAI’s AI Health Coach promises personalized wellness solutions. Llama 3.1 emerges as a contender, and NeMo AI boasts a massive 128k context capacity, running locally and free. Microsoft’s new AI Search could redefine how we navigate information, while OpenAI’s latest unnamed model has the tech world buzzing with anticipation.

Become a Member of the channel and Supporter of AI Revolution → / @airevolutionx.

#ai #ainews.

Timestamps:

00:01:02 AI Robot with Human Brain.

00:09:08 Google DeepMind’s JEST AI Learns 13x Faster.

00:18:04 OpenAI’s New SearchGPT

00:26:33 OpenAI’s New AI CriticGPT

00:37:05 STRAWBERRY — OpenAI’s MOST POWERFULL AI Ever!

00:45:40 AGI Levels by OpenAI

00:47:11 Grok 2

00:55:01 GPT4ALL 3.0

01:02:52 Humanoid Robots Now Taking Human Jobs.

01:12:04 OpenAI Launching the AI Health Coach.

01:20:17 New Llama 3.1 is The Most Powerful AI Model Ever!

01:29:26 This AI Reads Your Mind And Shows You Images!

01:37:15 New AI DESTROYS GPT-4o.

01:45:19 GPT-4o Mini.

01:54:09 NeMo AI

02:02:28 Microsoft New AI Search.

02:05:10 New Mistral Large 2 Model

Researchers at Rolls-Royce University Technology Centre (UTC) in Manufacturing and On-Wing Technology at the University of Nottingham have developed ultra-thin soft robots, designed for exploring narrow spaces in challenging built environments. The research is published in the journal Nature Communications.

These advanced robots, featuring multimodal locomotion capabilities, are set to transform the way industries, such as power plants, bridges and aero engines, conduct inspections and maintenance.

The innovative robots, known as Thin Soft Robots (TS-Robots), boast a thin thickness of just 1.7mm, enabling them to access and navigate in confined spaces, such as millimeter-wide gaps beneath doors or within complex machinery.

Google researchers have created an innovative AI model called Health Acoustic Representations (HeAR), designed to identify acoustic biomarkers for diseases like tuberculosis.

It can listen to human sounds and flag early signs of disease.

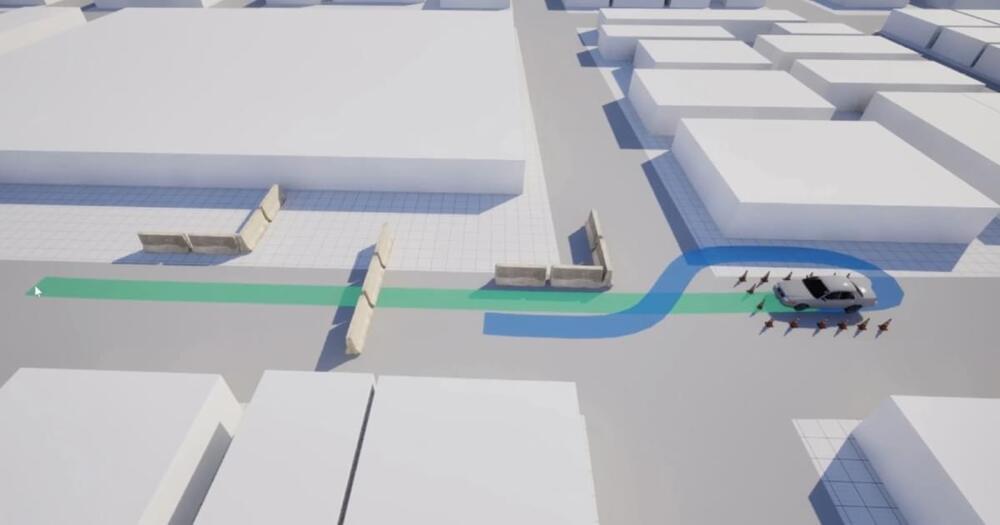

Game Developer jourverse, who is currently working on a tutorial series focused on building a traffic system in Unreal Engine 5, shared a demo project file for this procedural road network integrated with vehicle AI for obstacle avoidance, using A* for pathfinding.

The developer explained that both the A* algorithm and the road editor mode are implemented in C++, with no use of neural networks. Vehicle AI operations like spline following, reversing, and performing 3-point turns are handled through Blueprints. The vehicle AI navigates using two paths: the green spline for the main route and the blue spline for obstacle avoidance. The main spline leverages road network nodes to determine the path to the target via A* on FPathNode, which includes adjacent road nodes.

For obstacle detection, the vehicle employs polynomial regression to predict its future position. Upon detecting an obstacle, a grid of sphere traces is generated to map the obstacle’s location, and another A* algorithm is employed to create a path around the obstacle.