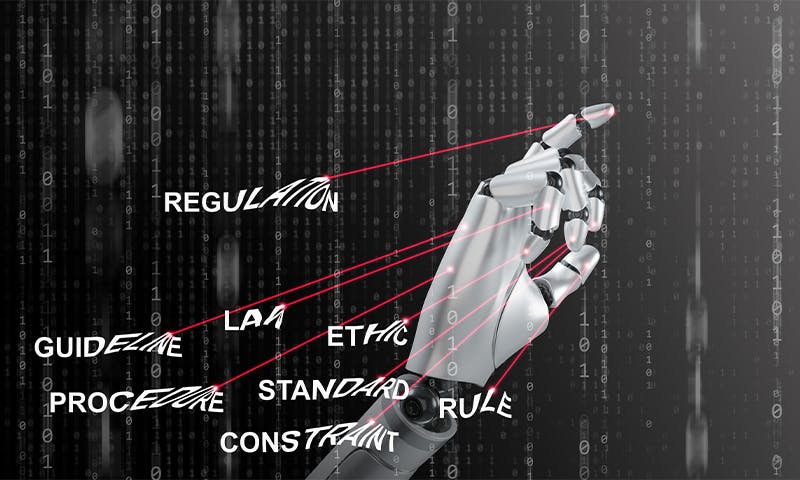

Why the “hard problem” of AI—controlling our superintelligent creations—is impossible.

This ultrathin device safely delivers gene therapies to the inner ear, offering hope for hearing restoration.

Elon Musk’s xAI announced Monday it raised $6 billion in a Series C funding round, putting the company’s value at more than $40 billion as it continues to strengthen its AI products and infrastructure.

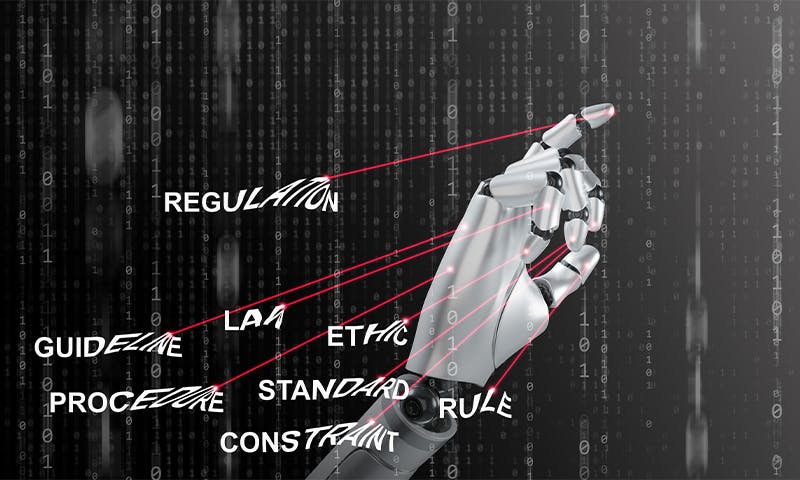

Urban construction land expansion damages natural ecological patches, changing the relationship between residents and ecological land. This is widespread due to global urbanization. Considering nature and society in urban planning, we have established an evaluation system for urban green space construction to ensure urban development residents’ needs while considering natural resource distribution. This is to alleviate the contradiction of urban land use and realize the city’s sustainable development. Taking the Fengdong New City, Xixian New Area as an example, the study used seven indicators to construct an ecological source evaluation system, four types of factors to identify ecological corridors and ecological nodes using the minimum cumulative resistance model, and a Back Propagation neural network to determine the weight of the evaluation system, constructing an urban green space ecological network. We comprehensively analyzed and retained 11 ecological source areas, identified 18 ecological corridors, and integrated and selected 13 ecological nodes. We found that the area under the influence of ecosystem functions is 12.56 km2, under the influence of ecological demands is 1.40 km2, and after comprehensive consideration is 22.88 km2. Based on the results, this paper concludes that protecting, excavating, and developing various urban greening factors do not conflict with meeting the residents’ ecological needs. With consideration of urban greening factors, cities can achieve green and sustainable development. We also found that the BP neural network objectively calculates and analyzes the evaluation factors, corrects the distribution value of each factor, and ensures the validity and practicability of the weights. The main innovation of this study lies in the quantitative analysis and spatial expression of residents’ demand for ecological land and the positive and negative aspects of disturbance. The research results improve the credibility and scientificity of green space construction so that urban planning can adapt and serve the city and its residents.

The world of artificial intelligence (AI) has made remarkable strides in recent years, particularly in understanding human language. At the heart of this revolution is the Transformer model, a core innovation that allows large language models (LLMs) to process and understand language with an efficiency that previous models could only dream of. But how do Transformers work? To explain this, let’s take a journey through their inner workings, using stories and analogies to make the complex concepts easier to grasp.

Apple’s latest machine learning research could make creating models for Apple Intelligence faster, by coming up with a technique to almost triple the rate of generating tokens when using Nvidia GPUs.

One of the problems in creating large language models (LLMs) for tools and apps that offer AI-based functionality, such as Apple Intelligence, is inefficiencies in producing the LLMs in the first place. Training models for machine learning is a resource-intensive and slow process, which is often countered by buying more hardware and taking on increased energy costs.

Earlier in 2024, Apple published and open-sourced Recurrent Drafter, known as ReDrafter, a method of speculative decoding to improve performance in training. It used an RNN (Recurrent Neural Network) draft model combining beam search with dynamic tree attention for predicting and verifying draft tokens from multiple paths.

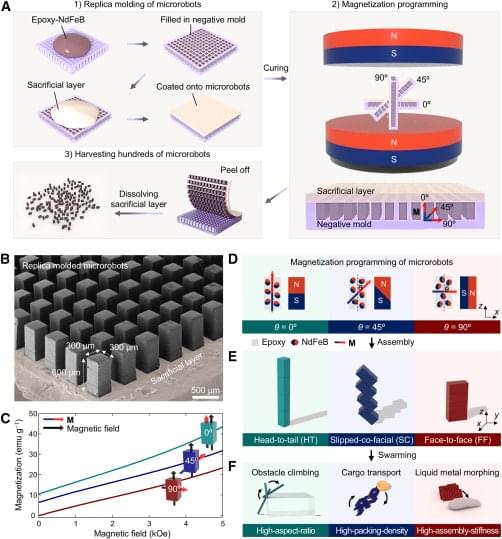

What if robots could work together like ants to move objects, clear blockages, and guide living creatures? Discover more!

Scientists at Hanyang University in Seoul, South Korea, have developed small magnetic robots that work together in swarms to perform complex tasks, such as moving and lifting objects much more significant than themselves. These microrobot swarms, controlled by a rotating magnetic field, can be used in challenging environments, offering solutions for tasks like minimally invasive treatments for clogged arteries and guiding small organisms.

The researchers tested how microrobot swarms with different configurations performed various tasks. They discovered that swarms with a high aspect ratio could climb obstacles five times higher than a single robot’s body length and throw themselves over them. In another demonstration, a swarm of 1,000 microrobots formed a raft on water, surrounding a pill 2,000 times heavier than a single robot, allowing the swarm to transport the drug through the liquid. On land, a swarm moved cargo 350 times heavier than each robot, while another swarm unclogged tubes resembling blocked blood vessels. Using spinning and orbital dragging motions, the team also developed a system where robot swarms could guide the movements of small organisms.

Researchers at the Tokyo-based startup Sakana AI have developed a new technique that enables language models to use memory more efficiently, helping enterprises cut the costs of building applications on top of large language models (LLMs) and other Transformer-based models.

The technique, called ‘universal transformer memory,’ uses special neural networks to optimize LLMs to keep bits of information that matter and discard redundant details from their context.

From VentureBeat.

Join my AI Academy — https://www.skool.com/postagiprepardness 🐤 Follow Me on Twitter https://twitter.com/TheAiGrid🌐 Checkout My website — https://theaigrid…