Biannual publication spotlights ‘visual experiments and conceptually refined pieces’

Introduction to O1 Models: The O1 series represents a shift from quick, intuitive thinking to slower, more deliberate reasoning. These models are designed to handle complex problems by thoroughly analyzing multiple data sets and reasoning through various dimensions of a problem.

#Microsoft #MicrosoftAzure

Stanford and Seoul National University researchers have developed an artificial sensory nerve system that can activate the twitch reflex in a cockroach and identify letters in the Braille alphabet.

The work, reported May 31 in Science, is a step toward creating artificial skin for prosthetic limbs, to restore sensation to amputees and, perhaps, one day give robots some type of reflex capability.

“We take skin for granted but it’s a complex sensing, signaling and decision-making system,” said Zhenan Bao, a professor of chemical engineering and one of the senior authors. “This artificial sensory nerve system is a step toward making skin-like sensory neural networks for all sorts of applications.”

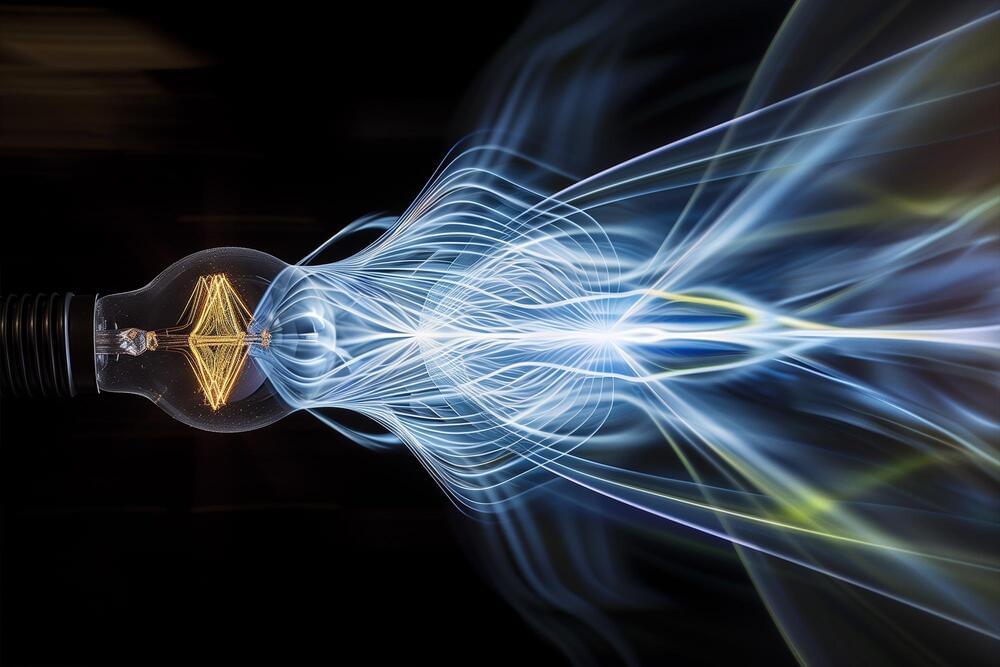

Researchers at the University of Michigan discovered a way to produce bright, twisted light using technology akin to an Edison bulb.

This breakthrough revisits the principles of blackbody radiation, offering the potential for advanced robotic vision systems capable of distinguishing subtle variations in light properties, such as those emitted by living organisms or objects.

Bright, twisted light: a surprising innovation.

Although each condition occurs in a small number of individuals, collectively these diseases exert a staggering human and economic toll because they affect some 300 million people worldwide. Yet, with a mere 5 to 7 percent of these conditions having an FDA-approved drug, they remain largely untreated or undertreated.

Developing new medicines represents a daunting challenge, but a new artificial intelligence tool can propel the discovery of new therapies from existing medicines, offering hope for patients with rare and neglected conditions and for the clinicians who treat them.

The AI model, called TxGNN, is the first one developed specifically to identify drug candidates for rare diseases and conditions with no treatments.

Identifies possible therapies for thousands of diseases, including ones with no current treatments.

Out the free AMD loaner offer. Test the Ryzen PRO laptops yourself and experience the benefits they can bring to your business:

https://tinyurl.com/222dzww9

The Paper:

Indium Selenide breakthrough ➜ https://www.nature.com/articles/s41586-024-08156-8

Timestamps.

00:00 — New Semiconductor.

04:26 — How it works.

07:23 — Outlook and Alternatives.

11:30 — Top 5 Technologies of 2024

The videos I mentioned:

1. Probabilistic Computing https://youtu.be/hJUHrrihzOQ

2. First Functional Graphene Chip https://youtu.be/wGzBuspS9JI?si=saNoFiCw63B5BhPr.

3. Photonic Chip: https://youtu.be/TJ8vywX9asU

4. Quantum Computing: https://youtu.be/eINcrZGDQD0

5. AI: https://youtu.be/WeYM3dn_XvM

Thumbnail image credit: Akanksha Jain.

My course on Technology and Investing ➜ https://www.anastasiintech.com/course.

“Facial recognition is essential to human interaction, and we were curious about how the brain processes ambiguous or incomplete facial images—especially when they’re hidden from conscious awareness. We believe understanding these mechanisms can shed light on subconscious visual processing,” said study author Makoto Michael Martinsen, a PhD student conducting research under the Visual Perception and Cognition Laboratory and the Cognitive Neurotechnology Laboratory at the Toyohashi University of Technology.

To investigate how the brain processes face-like stimuli unconsciously, the researchers used a method called Continuous Flash Suppression (CFS). In this technique, participants were presented with a dynamic series of high-contrast masking images in one eye while a target image—such as a face-like stimulus—was shown to the other eye. The rapid flashing of the mask suppressed the perception of the target image, rendering it temporarily invisible to the participant. By measuring the time it took for the target image to “break through” the suppression and reach conscious awareness, the researchers could infer how efficiently the brain processed the image.

The study included 24 participants, all university students aged 20 to 24, with normal or corrected-to-normal vision. They were exposed to two types of visual stimuli: grayscale images of faces and binary images resembling faces. These binary images were created using black-and-white contrasts to simulate minimal facial features, such as contours and the general arrangement of facial elements. Each image was presented in both upright and inverted orientations to assess the impact of orientation on recognition.