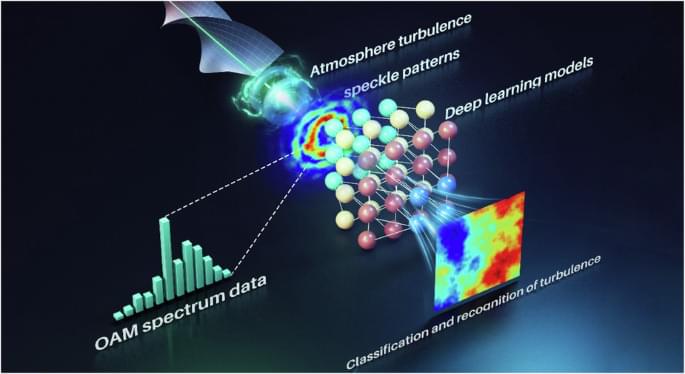

AI algorithms analyzes the OAM spectrum and intensity patterns of twisted light as it propagates through a turbulent medium, enabling the detection of the key features of the medium.

A breakthrough in artificial intelligence.

Artificial Intelligence (AI) is a branch of computer science focused on creating systems that can perform tasks typically requiring human intelligence. These tasks include understanding natural language, recognizing patterns, solving problems, and learning from experience. AI technologies use algorithms and massive amounts of data to train models that can make decisions, automate processes, and improve over time through machine learning. The applications of AI are diverse, impacting fields such as healthcare, finance, automotive, and entertainment, fundamentally changing the way we interact with technology.

Diverse Applications Beyond Elderly Care

While companion robots have traditionally been used to support the elderly, their utility is expanding to other demographics prone to loneliness, such as office workers and university students. Dr. Li’s research in China reveals that these groups often experience social isolation and lack the resources for meaningful companionship. The physical presence of robots like Moflin and LOVOT, which offer tactile interactions, differentiates them from virtual assistants and enhances their effectiveness in providing emotional support.

[Read More: Can AI Step Out from Virtual to Real Companionship?].

Summary: Researchers have developed a Genetic Progression Score (GPS) using artificial intelligence to predict the progression of autoimmune diseases from preclinical symptoms to full disease. The GPS model integrates genetic data and electronic health records to provide personalized risk scores, improving prediction accuracy by 25% to 1,000% over existing models.

This method identifies individuals at higher risk earlier, enabling timely interventions and better disease management. The framework could also be adapted to study other underrepresented diseases, offering a breakthrough in personalized medicine and health equity.

Farewell, hydraulic Atlas. Hello, fully electric Atlas. Boston Dynamics just showed off the next generation of Atlas and it’s… electric⚡🔋 #bostondynamics #atlas #humanoidrobot #tech #robot #electric.

Never miss a deal again! See CNET’s browser extension 👉 https://bit.ly/3lO7sOU

Check out CNET’s Amazon Storefront: https://www.amazon.com/shop/cnet?tag=lifeboatfound-20.

Follow us on TikTok: https://www.tiktok.com/@cnetdotcom.

Follow us on Instagram: https://www.instagram.com/cnet/

Follow us on X: https://www.x.com/cnet.

Like us on Facebook: https://www.facebook.com/cnet.

Visit CNET.com: https://www.cnet.com/

New NVIDIA AI Blueprints for building agentic AI applications are poised to help enterprises everywhere automate work.

With the blueprints, developers can now build and deploy custom AI agents. These AI agents act like “knowledge robots” that can reason, plan and take action to quickly analyze large quantities of data, summarize and distill real-time insights from video, PDF and other images.

This member-only story is on us. Upgrade to access all of Medium.

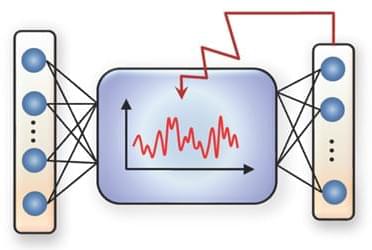

Multimodal retrieval-augmented generation (RAG) is transforming how AI applications handle complex information by merging retrieval and generation capabilities across diverse data types, such as text, images, and video.

Unlike traditional RAG, which typically focuses on text-based retrieval and generation, multimodal RAG systems can pull in relevant content from both text and visual sources to generate more contextually rich, comprehensive responses.