Mondal, S., Maity, R. & Nag, A. Sci Rep 15, 4,827 (2025). https://doi.org/10.1038/s41598-025-85765-x.

Mondal, S., Maity, R. & Nag, A. Sci Rep 15, 4,827 (2025). https://doi.org/10.1038/s41598-025-85765-x.

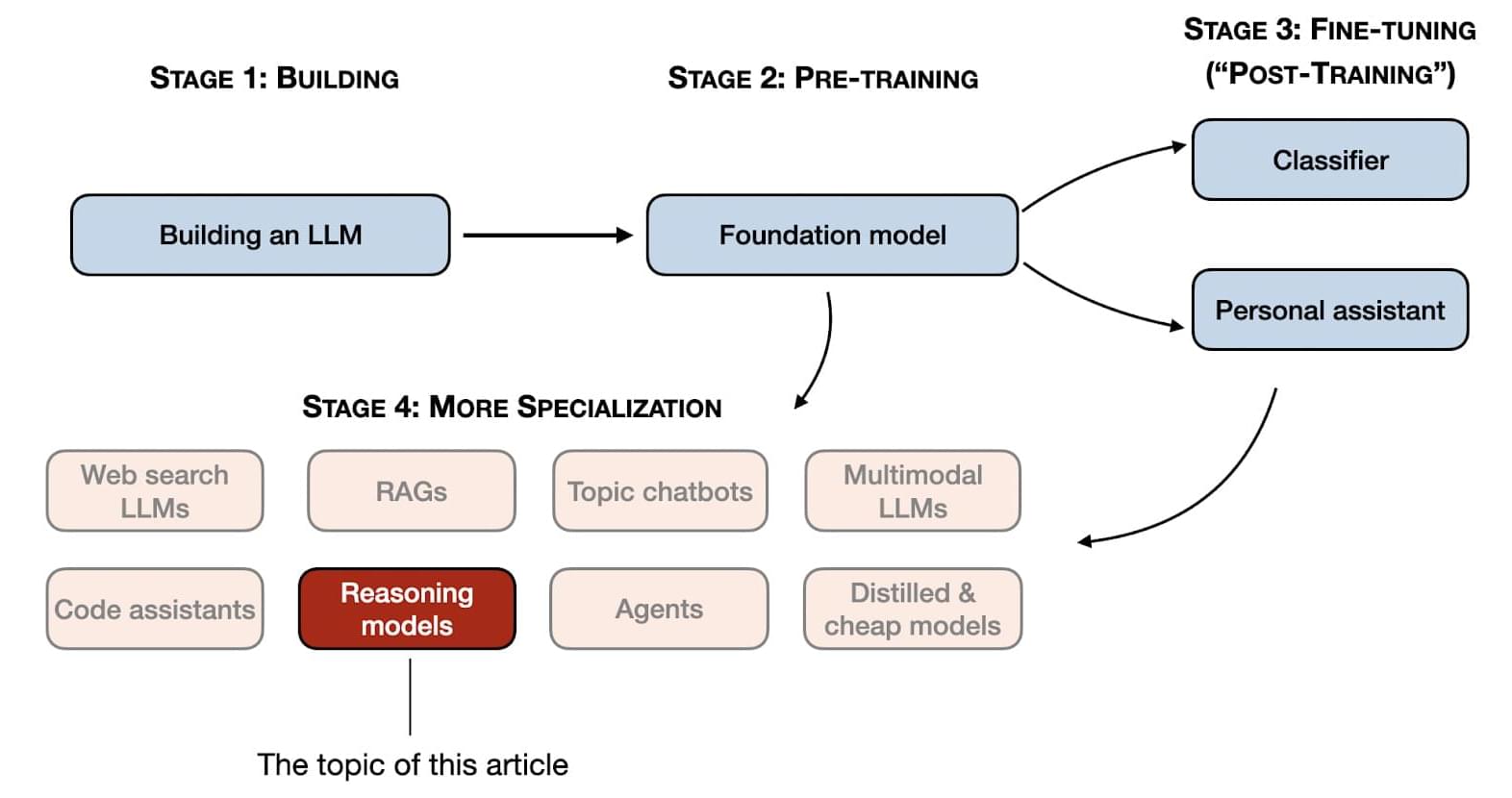

A small team of AI researchers from Stanford University and the University of Washington has found a way to train an AI reasoning model for a fraction of the price paid by big corporations that produce widely known products such as ChatGPT. The group has posted a paper on the arXiv preprint server describing their efforts to inexpensively train chatbots and other AI reasoning models.

Corporations such as Google and Microsoft have made clear their intentions to be leaders in the development of chatbots with ever-improving skills. These efforts are notoriously expensive and tend to involve the use of energy-intensive server farms.

More recently, a Chinese company called DeepSeek released an LLM equal in capabilities to those being produced by countries in the West developed at far lower cost. That announcement sent stock prices for many tech companies into a nosedive.

Extraterrestrial landers sent to gather samples from the surface of distant moons and planets have limited time and battery power to complete their mission. Aerospace and computer science engineering researchers at The Grainger College of Engineering, University of Illinois Urbana-Champaign trained a model to autonomously assess and scoop quickly, then watched it demonstrate its skill on a robot at a NASA facility.

Aerospace Ph.D. student Pranay Thangeda said they trained their robotic lander arm to collect scooping data on a variety of materials, from sand to rocks, resulting in a database of 6,700 points of knowledge. The two terrains in NASA’s Ocean World Lander Autonomy Testbed at the Jet Propulsion Laboratory were brand new to the model that operated the JPL robotic arm remotely.

The study, “Learning and Autonomy for Extraterrestrial Terrain Sampling: An Experience Report from OWLAT Deployment,” was published in the AIAA Scitech Forum.

In today’s AI news, OpenAI released its o3-mini model one week ago, offering both free and paid users a more accurate, faster, and cheaper alternative to o1-mini. Now, OpenAI has updated the o3-mini to include an updated chain of thought.

In other advancements, Hugging Face and Physical Intelligence have quietly launched Pi0 (Pi-Zero) this week, the first foundational model for robots that translates natural language commands directly into physical actions. “Pi0 is the most advanced vision language action model,” said Remi Cadene, a research scientist at Hugging Face.

S Luxo Jr., Apple And, one year later the Rabbit R1 is actually good now. It launched to reviews like “avoid this AI gadget”, but 12 months have passed. Where is the Rabbit R1 now? Well with a relentless pipeline of updates and novel AI ideas…it’s actually pretty good now!?

In videos, Moderator Shirin Ghaffary (Reporter, Bloomberg News) leads a expert panel which includes; Chase Lochmiller (Crusoe, CEO) Costi Perricos (Deloitte, Global GenAI Business Leader) Varun Mohan (Codeium, Co-Founder and CEO) that ask, how are we building the infrastructure to support this massive global technological revolution?

Meanwhile, Humans are terrible at detecting lies, says psychologist Riccardo Loconte… but what if we had an AI-powered tool to help? He introduces his team’s work successfully training an AI to recognize falsehoods.

And, Mo Gawdat, the former Chief Business Officer for Google X, bestselling author, the founder of ‘One Billion Happy’ foundation, and co-founder of ‘Unstressable,’ joins Professor Scott Galloway to discuss the state of AI — where it stands today, how it’s evolving, and what that means for our future.

We close out with, Dialogue at UTokyo GlobE held an event with CEO Sam Altman of OpenAI and CPO, Kevin Weil. President Teruo Fujii and Executive Vice President Kaori Hayashi welcomed the two guests, along with 36 students whose major ranged from engineering to medicine to philosophy.

Thats all for today, but AI is moving fast — like, comment, and subscribe for more AI news! Please vote for me in the Entrepreneur of Impact Competition today! Thank you for supporting my partners and I — it’s how I keep Neural News Network free.

[](https://open.substack.com/pub/remunerationlabs/p/openais-o3-…Share=true)

This OpenAI update is available to free and paid users and could make getting the results you want easier.

A Canadian startup called Xanadu has built a new quantum computer it says can be easily scaled up to achieve the computational power needed to tackle scientific challenges ranging from drug discovery to more energy-efficient machine learning.

Aurora is a “photonic” quantum computer, which means it crunches numbers using photonic qubits—information encoded in light. In practice, this means combining and recombining laser beams on multiple chips using lenses, fibers, and other optics according to an algorithm. Xanadu’s computer is designed in such a way that the answer to an algorithm it executes corresponds to the final number of photons in each laser beam. This approach differs from one used by Google and IBM, which involves encoding information in properties of superconducting circuits.

Japanese researchers have crafted a revolutionary device that can record and playback your dreams for you to watch. Yes, you read that right! Imagine being able to watch your dreams if they were movies… This machine enables you to do something similar. This incredible technology employs advancements in brain imaging and artificial intelligence (AI), reinforcing one to delve into the mysterious realms of dreams in exceptional ways.

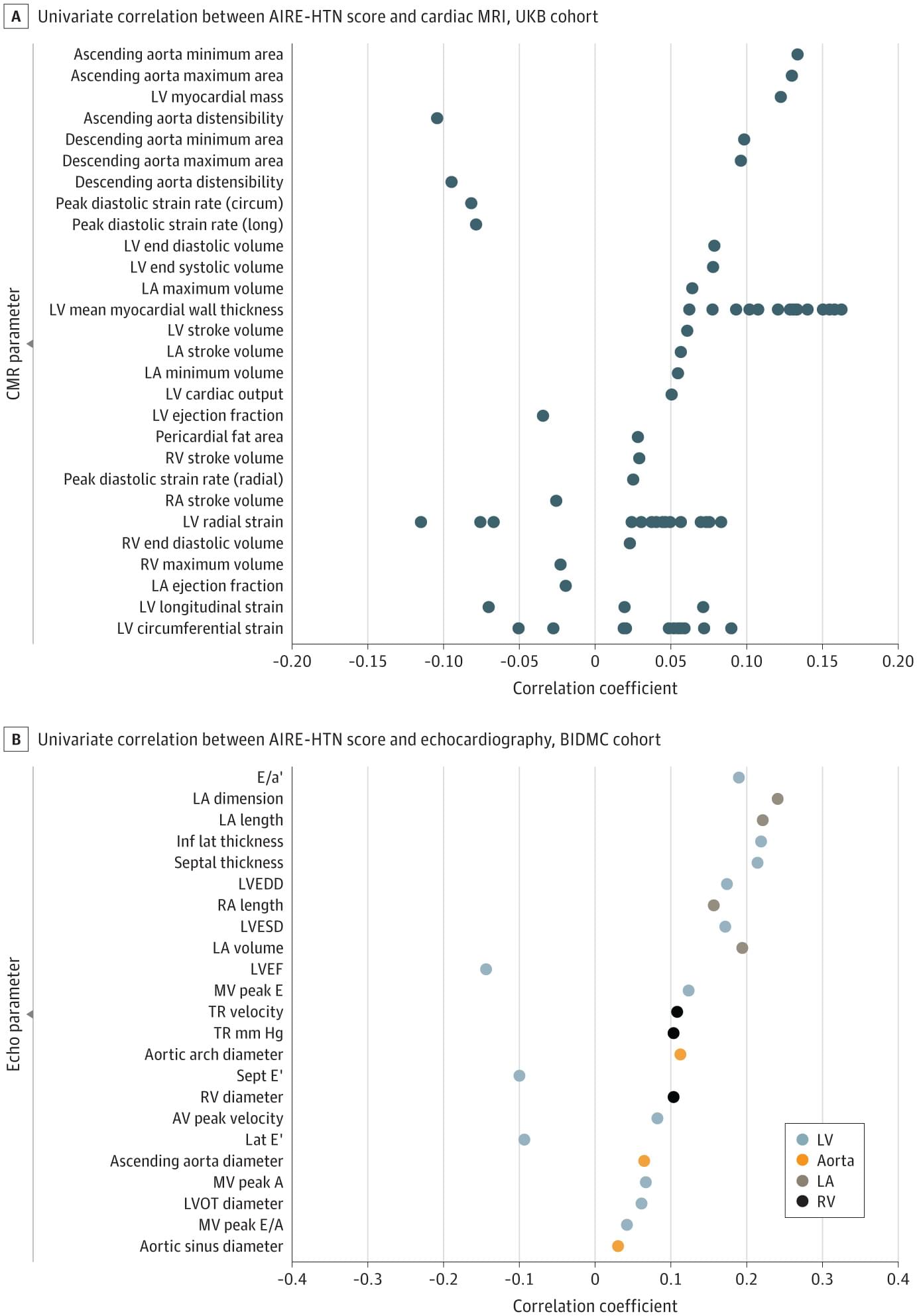

Question Can an electrocardiography (ECG)–based artificial intelligence risk estimator for hypertension (AIRE-HTN) predict incident hypertension and stratify risk for incident hypertension-associated adverse events?

Findings In this prognostic study including an ECG algorithm trained on 189 539 patients at Beth Israel Deaconess Medical Center and externally validated on 65 610 patients from UK Biobank, AIRE-HTN predicted incident hypertension and stratified risk for cardiovascular death, heart failure, myocardial infarction, ischemic stroke, and chronic kidney disease.

Meaning Results suggest that AIRE-HTN can predict the development of hypertension and may identify at-risk patients for enhanced surveillance.