Forget DJI drones – the Falcon Mini looks like way more fun to fly.

AUSTIN (KXAN) — Beep beep — Uber rideshare users can now book a driverless ride around Austin, as the ridehailing company officially launches its partnership with Waymo.

Waymo and Uber announced in September the planned collaboration between the two companies, with rollouts poised in both Austin and Atlanta in 2025. Beginning Tuesday, Uber users can match with one of Waymo’s Jaguar I-PACE vehicles while booking an UberX, Uber Green, Uber Comfort or Uber Comfort Electric vehicle.

“Starting today, Austin riders can be matched with a Waymo autonomous vehicle on the Uber app, making their next trip even more special,” Uber CEO Dara Khosrowshahi said in the announcement. “With Waymo’s technology and Uber’s proven platform, we’re excited to introduce our customers to a future of transportation that is increasingly electric and autonomous.”

REDMOND, Wash. — March 3, 2025 — On Monday, Microsoft Corp. is unveiling Microsoft Dragon Copilot, the first AI assistant for clinical workflow that brings together the trusted natural language voice dictation capabilities of DMO with the ambient listening capabilities of DAX, fine-tuned generative AI and healthcare-adapted safeguards. Part of Microsoft Cloud for Healthcare, Dragon Copilot is built on a secure modern architecture that enables organizations to deliver enhanced experiences and outcomes across care settings for providers and patients alike.

Clinician burnout in the U.S. dropped from 53% in 2023 to 48% in 2024, in part due to technology advancements. However, with an aging population, and persistent burnout felt across the profession, a significant U.S. workforce shortage is projected. In response, health systems are adopting AI to streamline administrative tasks, enhance care access, and enable faster clinical insights to improve healthcare globally.

“At Microsoft, we have long believed that AI has the incredible potential to free clinicians from much of the administrative burden in healthcare and enable them to refocus on taking care of patients,” said Joe Petro, corporate vice president of Microsoft Health and Life Sciences Solutions and Platforms. “With the launch of our new Dragon Copilot, we are introducing the first unified voice AI experience to the market, drawing on our trusted, decades-long expertise that has consistently enhanced provider wellness and improved clinical and financial outcomes for provider organizations and the patients they serve.”

Anthropic closed a $3.5 billion series E funding round, valuing the AI company at $61.5 billion post-money, the firm announced today. Lightspeed Venture Partners led the round with a $1 billion contribution, cementing Anthropic’s status as one of the world’s most valuable private companies and demonstrating investors’ unwavering appetite for leading AI developers despite already astronomical valuations.

The financing attracted participation from an impressive roster of investors including Salesforce Ventures, Cisco Investments, Fidelity Management & Research Company, General Catalyst, D1 Capital Partners, Jane Street, Menlo Ventures and Bessemer Venture Partners.

With this investment, Anthropic will advance its development of next-generation AI systems, expand its compute capacity, deepen its research in ten and alignment, and accelerate its international expansion, the company said in its announcement.

Anthropic’s dramatic valuation reflects its exceptional commercial momentum. The company’s annualized revenue reached $1 billion by December 2024, representing a tenfold increase year-over-year, according to people familiar with the company’s finances. That growth has accelerated further, with revenue reportedly increasing by 30% in just the first two months of 2025, according to a Bloomberg report.

Founded in 2021 by former OpenAI researchers including siblings Dario and Daniela Amodei, Anthropic has positioned itself as a more research-focused and safety-oriented alternative to its chief rival. The company’s Claude chatbot has gained significant market share since its public launch in March 2023, particularly in enterprise applications.

Krishna Rao, Anthropic’s CFO, said in a statement that the investment fuels our development of more intelligent and capable AI systems that expand what humans can achieve, adding that continued advances in scaling across all aspects of model training are powering breakthroughs in intelligence and expertise.

A new high-performance quantum processor boasts 105 superconducting qubits and rivals Google’s acclaimed Willow processor.

In the quest for useful quantum computers, processors based on superconducting qubits are especially promising. These devices are both programmable and capable of error correction. In December 2024, researchers at Google Quantum AI in California reported a 105-qubit superconducting processor known as Willow (see Research News: Cracking the Challenge of Quantum Error Correction) [1]. Now Jian-Wei Pan at the University of Science and Technology of China and colleagues have demonstrated their own 105-qubit processor, Zuchongzhi 3.0 (Fig. 1) [2]. The two processors have similar performances, indicating a neck-and-neck race between the two groups.

Quantum advantage is the claim that a quantum computer can perform a specific task faster than the most powerful nonquantum, or classical, computer. A standard task for this purpose is called random circuit sampling, and it works as follows. The quantum computer applies a sequence of randomly ordered operations, known as a random circuit, to a set of qubits. This circuit transforms the qubits in a unique and complex way. The computer then measures the final states of the qubits. By repeating this process many times with different random circuits, the quantum computer records a probability distribution of final qubit states.

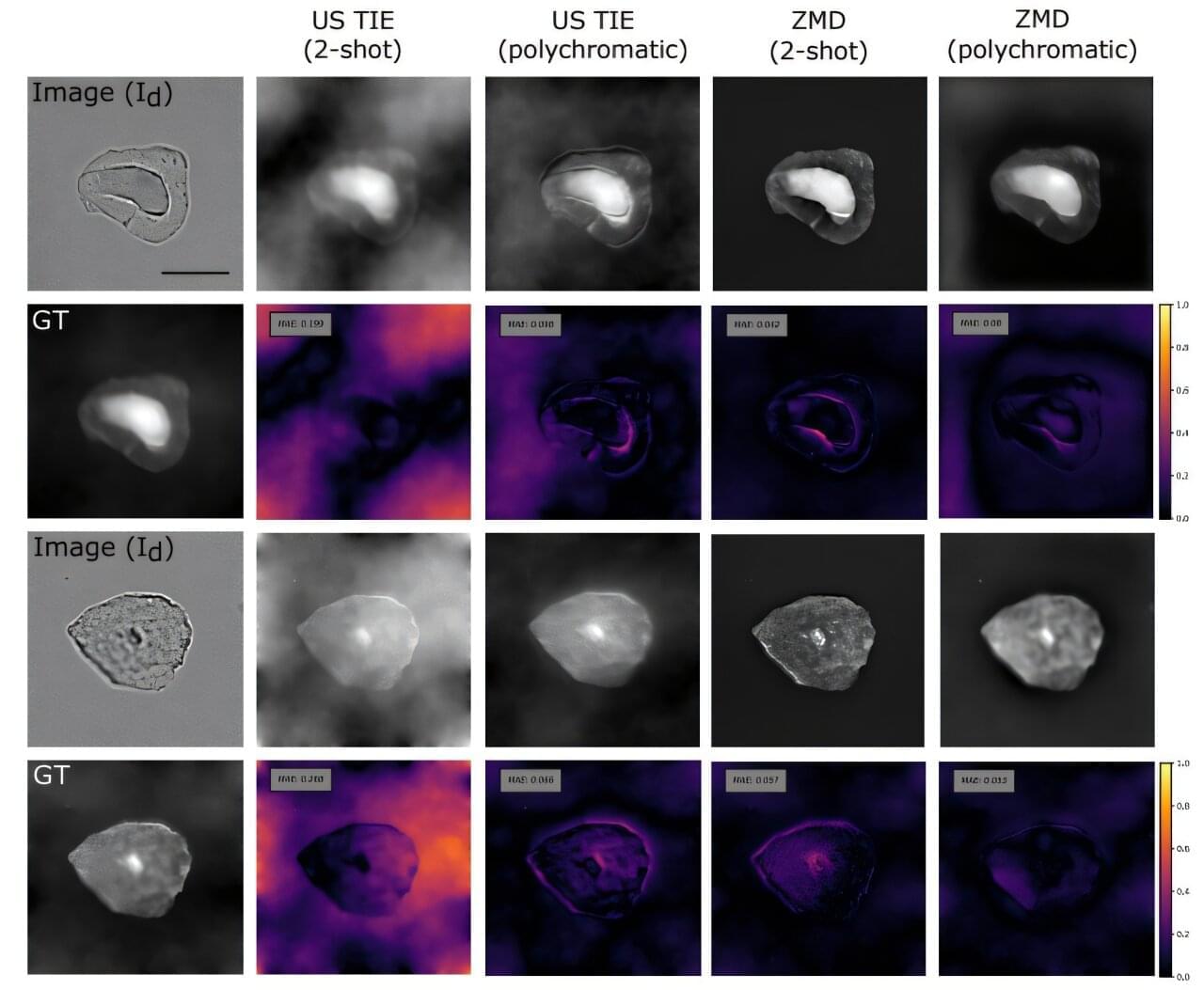

Quantitative phase imaging (QPI) is a microscopy technique widely used to investigate cells. Even though earlier biomedical applications based on QPI have been developed, both acquisition speed and image quality need to improve to guarantee a widespread reception.

Scientists from the Görlitz-based Center for Advanced Systems Understanding (CASUS) at Helmholtz-Zentrum Dresden-Rossendorf (HZDR) as well as Imperial College London and University College London suggest leveraging an optical phenomenon called chromatic aberration—that usually degrades image quality—to produce suitable images with standard microscopes.

From connectome to computation:

predicting neural function with machine learning.

Janne Lappalainen.

University of Tubingen & Tubingen AI Center.

Presentation and Q&A

At the Carboncopies Foundation February 2025 workshop:

The brain emulation challenge: functionalizing brain data, ground-truthing and the role of artificial data in advancing neuroscience.

*This video was recorded at Foresight’s Whole Brain Emulation Workshop 2023.*

https://foresight.org/whole-brain-emulation-workshop-2023/

*Niccolò Zanichelli, Università degli Studi di Parma*

What can AI do for Whole Brain Emulation.

https://www.linkedin.com/in/niccol%C3%B2-zanichelli-99a7881a3/

WBE is a potential technology to generate software intelligence that is human-aligned simply by being based directly on human brains. Generally past discussions have assumed a fairly long timeline to WBE, while past AGI timelines had broad uncertainty. There were also concerns that the neuroscience of WBE might boost AGI capability development without helping safety, although no consensus did develop. Recently many people have updated their AGI timelines towards earlier development, raising safety concerns. That has led some people to consider whether WBE development could be significantly speeded up, producing a differential technology development re-ordering of technology arrival that might lessen the risk of unaligned AGI by the presence of aligned software intelligence.

Whether this is a viable strategy depends on.

(1) AGI timelines not being ultra-short.

(2) whether WBE development can be speeded up significantly by a concerted effort, (3) this speedup doesn’t introduce other risks or ethical concerns.

The goals of this workshop is to try to.

(A) review the current state of the art in WBE related technology.

(B) outline plausible development paths and necessary steps for full WBE

© determine whether there is potential for speeding up WBE development.

(D) whether there are strategic, risk or ethical issues speaking against this.

This two-day event invites leading researchers, entrepreneurs, and funders to drive progress. Explore new opportunities, form lasting collaborations, and join us in driving cooperation toward shared long-term goals. Including mentorship hours, breakouts, and speaker & sponsor gathering.

“A good ratio of oxygen to methane is key to combustion,” said Justin Long.

Can methane flare burners be advanced to produce less methane? This is what a recent study published in Industrial & Engineering Chemistry Research hopes to address as a team of researchers from the University of Michigan (U-M) and the Southwest Research Institute (SwRI) developed a methane flare burner with increased combustion stability and efficiency compared to traditional methane flare burners. This study has the potential to develop more environmentally friendly burners to combat human-caused climate change, specifically since methane is a far larger contributor to climate change than carbon dioxide.

For the study, the researchers used a combination of machine learning and novel manufacturing methods to test several designs of a methane flare burner that incorporates crosswinds to simulate real-world environments. The burner design includes splitting the methane flow in three directions while enabling oxygen flow from crosswinds to mix with the methane, enabling a much cleaner combustion. In the end, the researchers found that their design achieves 98 percent combustion efficiency, meaning it produces 98 percent less methane than traditional burners.

“A good ratio of oxygen to methane is key to combustion,” said Justin Long, who is a Senior Research Engineer at SwRI. “The surrounding air needs to be captured and incorporated to mix with the methane, but too much can dilute it. U-M researchers conducted a lot of computational fluid dynamics work to find a design with an optimal air-methane balance, even when subjected to high-crosswind conditions.”

Technology may one day grant us a Utopia in which virtually all tasks are performed by robots and artificial intelligence. In such a post-scarcity civilization, people may have difficulty finding a purpose to existence. Today we will explore how this may come about, what the consequences of this existential threat might be, and what purposes people may find for themselves in such a future.

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a… Group: / 1,583,992,725,237,264 Reddit:

/ isaacarthur Twitter:

/ isaac_a_arthur on Twitter and RT our future content. SFIA Discord Server:

/ discord Listen or Download the audio of this episode from Soundcloud: Episode’s Audio-only version:

/ purpose Episode’s Narration-only version:

/ purpose-narration-only Credits: Post-Scarcity Civilizations: Purpose ep 140 Season 4, Episode 26 Writers: Isaac Arthur Editors: A.T. Long Darius Said Dillon Olander Jerry Guern Justin Dixon Mark Warburton Matthew Acker Matthew Campbell Producer: Isaac Arthur Cover Artist: Jakub Grygier https://www.artstation.com/jakub_grygier Graphics Team Jeremy Jozwik Justin Dixon Ken York Kristijan Tavcar Narrator Isaac Arthur Music Manager: Luca De Rosa — [email protected] Music: Dracovallis, “Cynthia” https://dracovallis.bandcamp.com/ Stellardrone, “Breathe In The Light” https://stellardrone.bandcamp.com Kai Engel, “Endless Story About Sun and Moon” https://www.kai-engel.com/ Chris Zabriskie, “A New Day in a New Sector” http://chriszabriskie.com Kai Engel, “Morbid_Imagination” https://www.kai-engel.com/ Aerium, “The Islands moved while I was asleep”

/ @officialaerium Lombus, “Cosmic Soup” https://lombus.bandcamp.com Brandon Liew, “Into the Storm”

• Video.

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Listen or Download the audio of this episode from Soundcloud: Episode’s Audio-only version:

/ purpose.

Episode’s Narration-only version: / purpose-narration-only.

Credits: post-scarcity civilizations: purpose. ep 140 season 4, episode 26

Writers:

Isaac Arthur.

Editors: