The Oxyde would carry robots to the asteroid belt between Mars and Jupiter, opening up access to rare space metals.

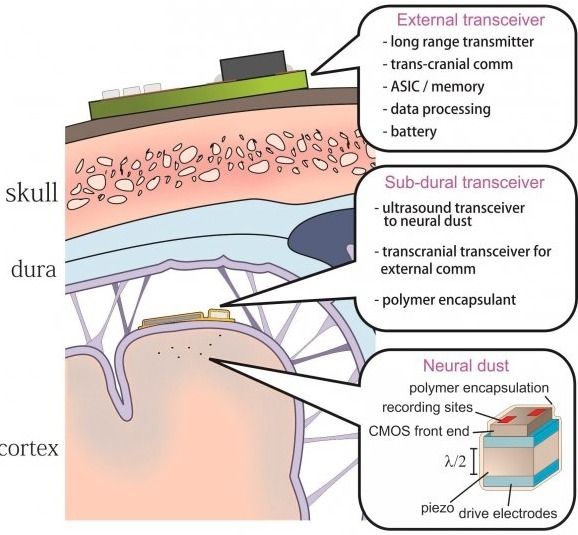

It’s the year 2021. A quadriplegic patient has just had one million “neural lace” microparticles injected into her brain, the world’s first human with an internet communication system using a wireless implanted brain-mind interface — and empowering her as the first superhuman cyborg. …

No, this is not a science-fiction movie plot. It’s the actual first public step — just four years from now — in Tesla CEO Elon Musk’s business plan for his latest new venture, Neuralink. It’s now explained for the first time on Tim Urban’s WaitButWhy blog.

The fledgling “flying car” industry just received a major boost as Google cofounder Larry Page officially launched his new startup out of stealth.

Founded in 2015, Kitty Hawk has been known to exist for some time already, but we’ve hitherto had no real idea about what it was all about — beyond it having something to do with flying cars. But earlier this morning, company CEO Sebastian Thrun, who once headed up Google’s self-driving car efforts and later went on to found online learning platform Udacity, tweeted out a link to the company’s website and Twitter page. Kitty Hawk’s website now offers some clue as to what we can expect.

It transpires that Kitty Hawk isn’t working on a “flying car,” as such, instead it’s working on a personal “ultralight aircraft” designed to be flown in “uncongested” areas, particularly over fresh water — it can’t be flown in cities. And, crucially, it doesn’t require a pilot’s license, which opens things up to widespread recreational use, though this will naturally raise some safety concerns.

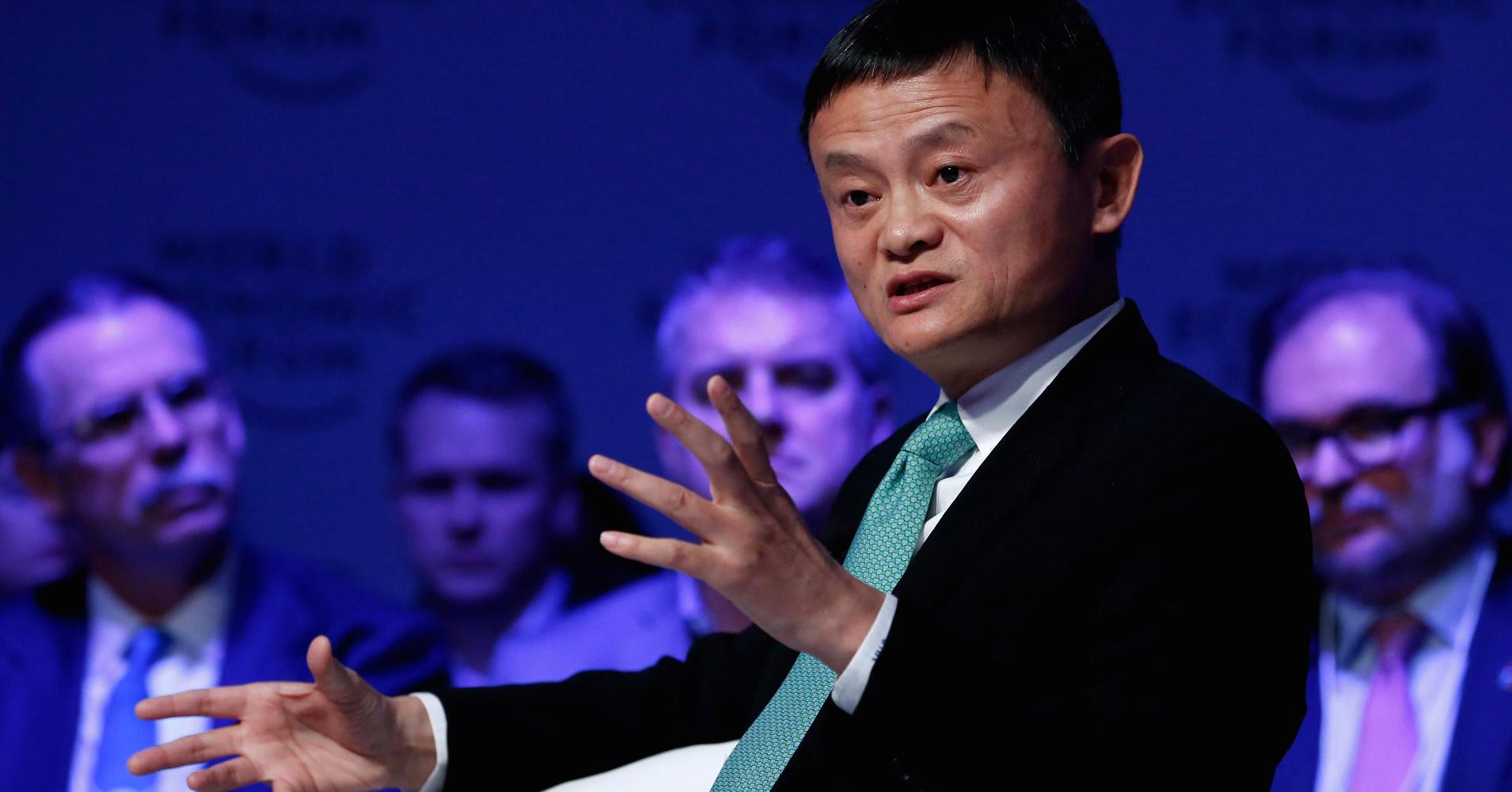

Alibaba Chairman Jack Ma warned on Monday that society could see decades of pain thanks to disruption caused by the internet and new technologies to different areas of the economy.

In a speech at a China Entrepreneur Club event, the billionaire urged governments to bring in education reform and outlined how humans need to work with machines.

“In the coming 30 years, the world’s pain will be much more than happiness, because there are many more problems that we have come across,” Ma said in Chinese, speaking about potential job disruptions caused by technology.

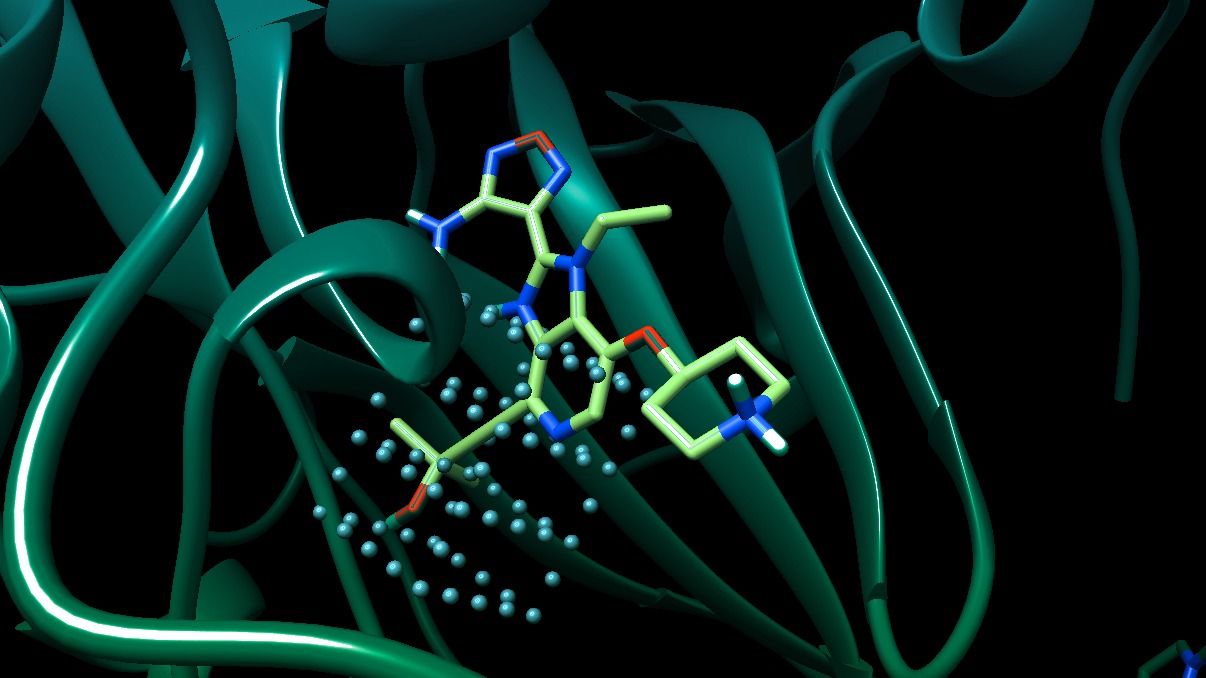

Artificial intelligence algorithms are being taught to generate art, human voices, and even fiction stories all on their own—why not give them a shot at building new ways to treat disease?

Atomwise, a San Francisco-based startup and Y Combinator alum, has built a system it calls AtomNet (pdf), which attempts to generate potential drugs for diseases like Ebola and multiple sclerosis. The company has invited academic and non-profit researchers from around the country to detail which diseases they’re trying to generate treatments for, so AtomNet can take a shot. The academic labs will receive 72 different drugs that the neural network has found to have the highest probability of interacting with the disease, based on the molecular data it’s seen.

Atomwise’s system only generates potential drugs—the compounds created by the neural network aren’t guaranteed to be safe, and need to go through the same drug trials and safety checks as anything else on the market. The company believes that the speed at which it can generate trial-ready drugs based on previous safe molecular interactions is what sets it apart.

I remember posting that video in here a few months ago. Some lab in California was testing their AI’s to drive cars in the game. I wish they’d let them goof around in Multiplayer it would be interesting to mess with one. How would it re act if it got attacked, if a random person hopped in a car and started playing with the radio or other weird stuff.

By Tina Amini 2017/04/21 17:23:46 UTC

By Tina Amini 2017/04/21 17:23:46 UTC

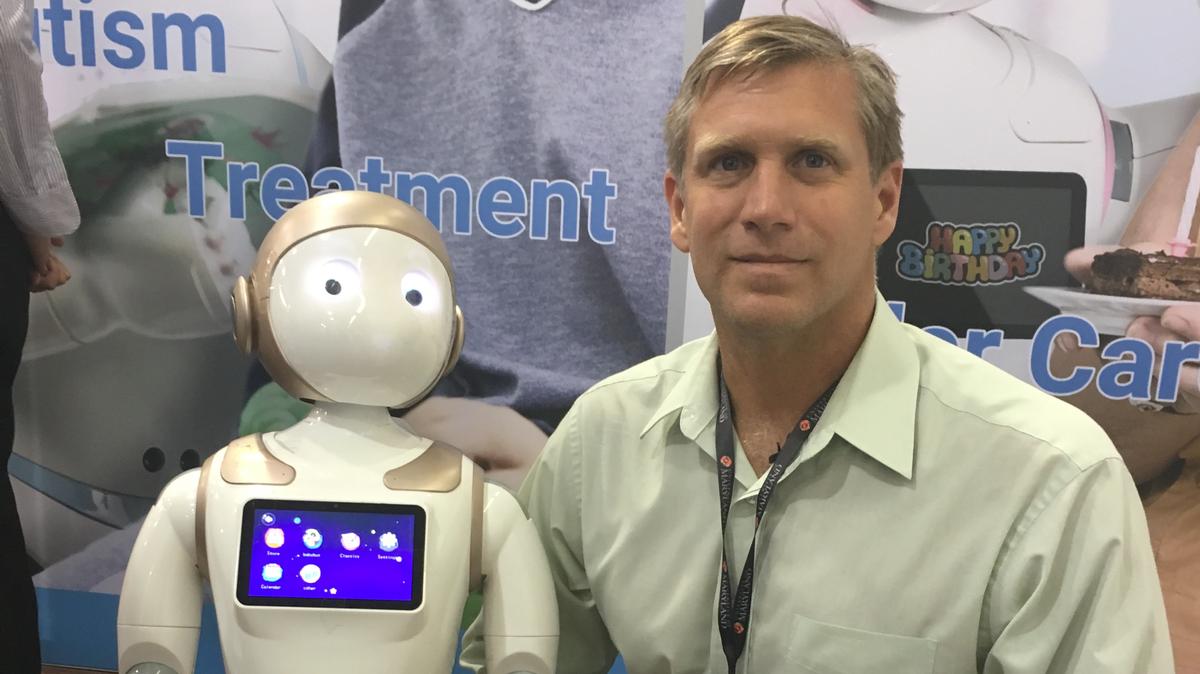

My new Vice Motherboard article on interviewing four humanoid robots: https://motherboard.vice.com/en_us/article/i-talked-to-four-humanoid-robots #transhumanism

“Human relationships can be hard to define.”

Over the last 18 months, I’ve found myself in the strange habit of hanging out and interviewing English-speaking humanoid robots. I was able to chat with four machines, each which possessed some level of artificial intelligence. Even though none of them could fully carry on normal conversations, they all had something to say. And sometimes, what they say and how they say it, is a piercing glimpse into the future of humanity.

Three of the robots I talked to were mass-production models: Pepper, Meccanoid, and iPal. The fourth was Han, which was presented by AI expert Dr. Ben Goertzel, chief scientist at Hanson Robotics. The various price tags of these bots range from $200 on Amazon, to potentially many millions of dollars for something like Han. The production robots are all between three to four feet tall and are mobile. Han is just an upper body, the torso of which rests against whatever he’s placed upon.

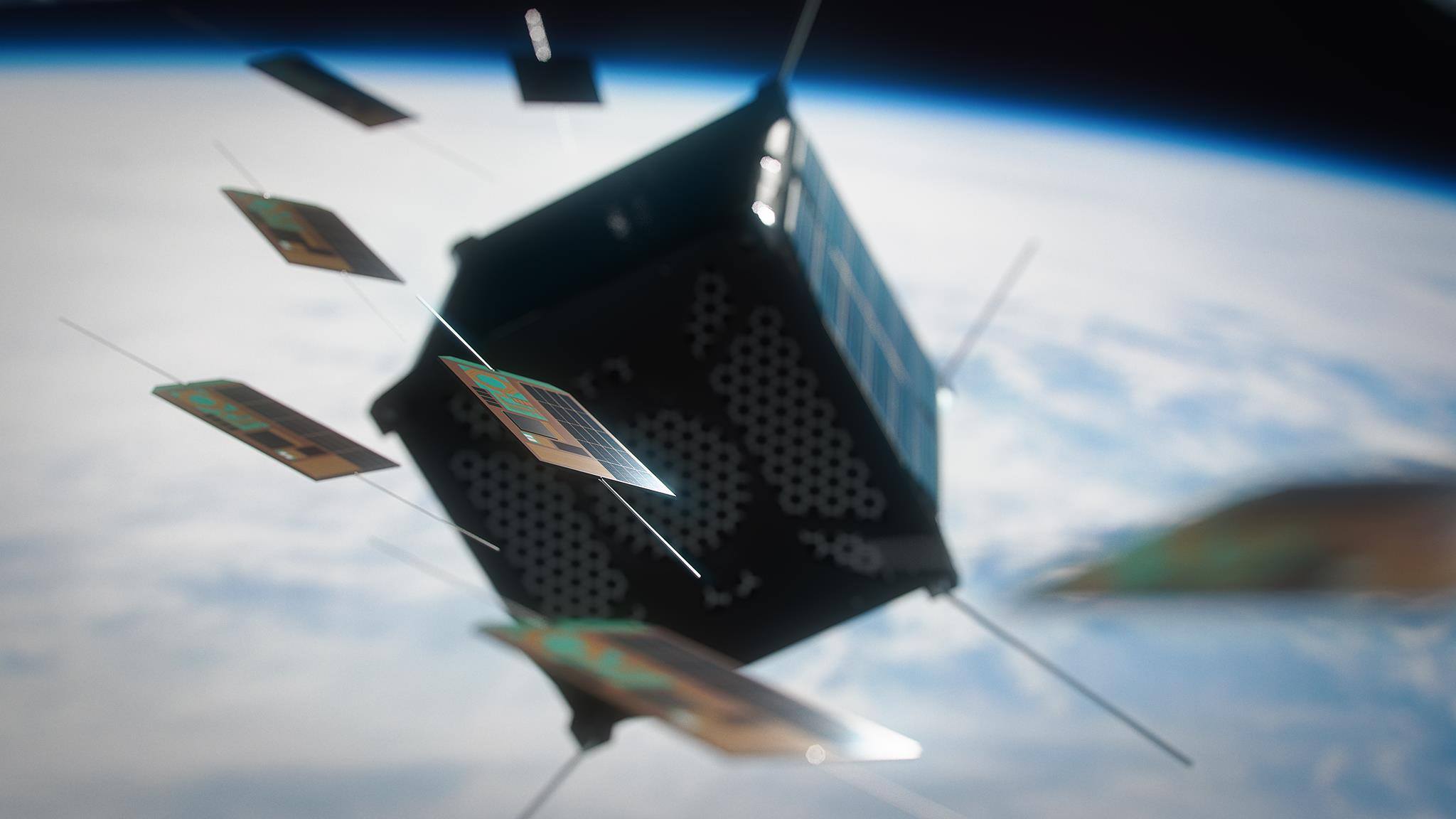

Conceptual artist Efflam Mercier, who has previously created images of an AI interstellar probe has now created images of ChipSats, a new class of space system that has the size of a fingernail and a mass of less than 10g (atto-sat class). i4is has started developing a ChipSat in the context of Project Glowworm.