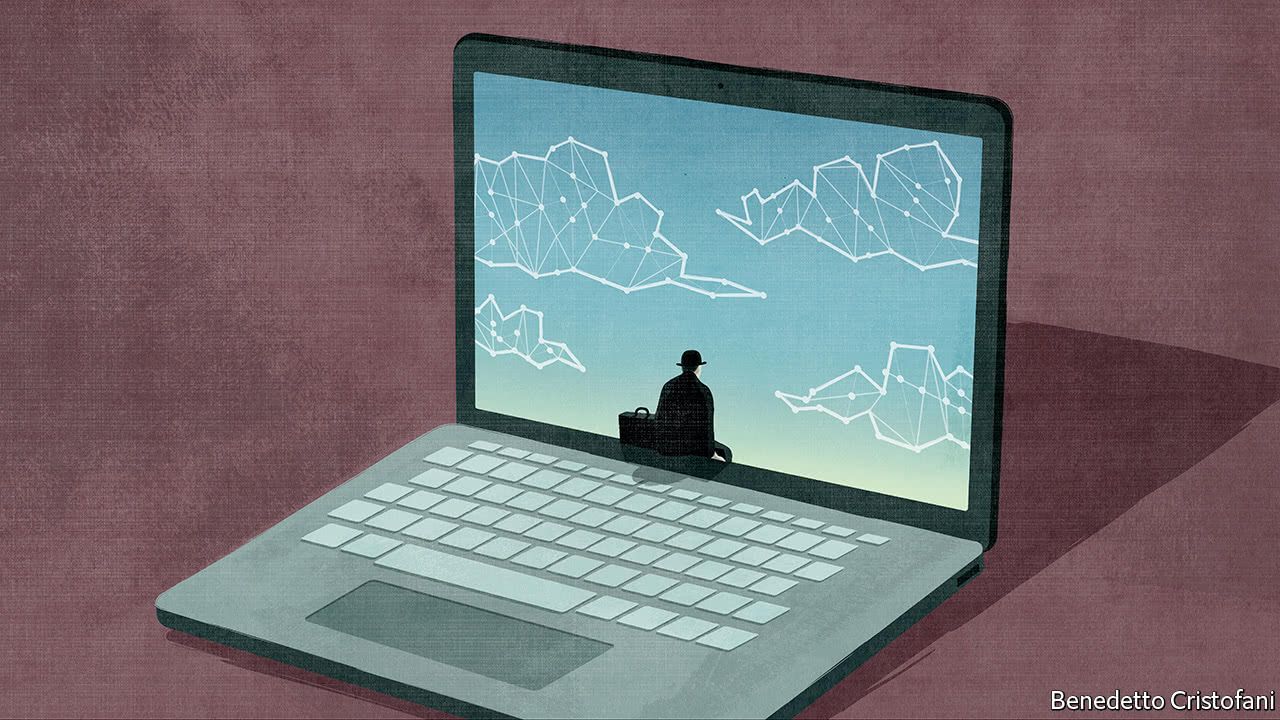

A longer-term concern is the way AI creates a virtuous circle or “flywheel” effect, allowing companies that embrace it to operate more efficiently, generate more data, improve their services, attract more customers and offer lower prices. That sounds like a good thing, but it could also lead to more corporate concentration and monopoly power—as has already happened in the technology sector.

LIE DETECTORS ARE not widely used in business, but Ping An, a Chinese insurance company, thinks it can spot dishonesty. The company lets customers apply for loans through its app. Prospective borrowers answer questions about their income and plans for repayment by video, which monitors around 50 tiny facial expressions to determine whether they are telling the truth. The program, enabled by artificial intelligence (AI), helps pinpoint customers who require further scrutiny.

AI will change more than borrowers’ bank balances. Johnson & Johnson, a consumer-goods firm, and Accenture, a consultancy, use AI to sort through job applications and pick the best candidates. AI helps Caesars, a casino and hotel group, guess customers’ likely spending and offer personalised promotions to draw them in. Bloomberg, a media and financial-information firm, uses AI to scan companies’ earnings releases and automatically generate news articles. Vodafone, a mobile operator, can predict problems with its network and with users’ devices before they arise. Companies in every industry use AI to monitor cyber-security threats and other risks, such as disgruntled employees.

Get our daily newsletter

Upgrade your inbox and get our Daily Dispatch and Editor’s Picks.