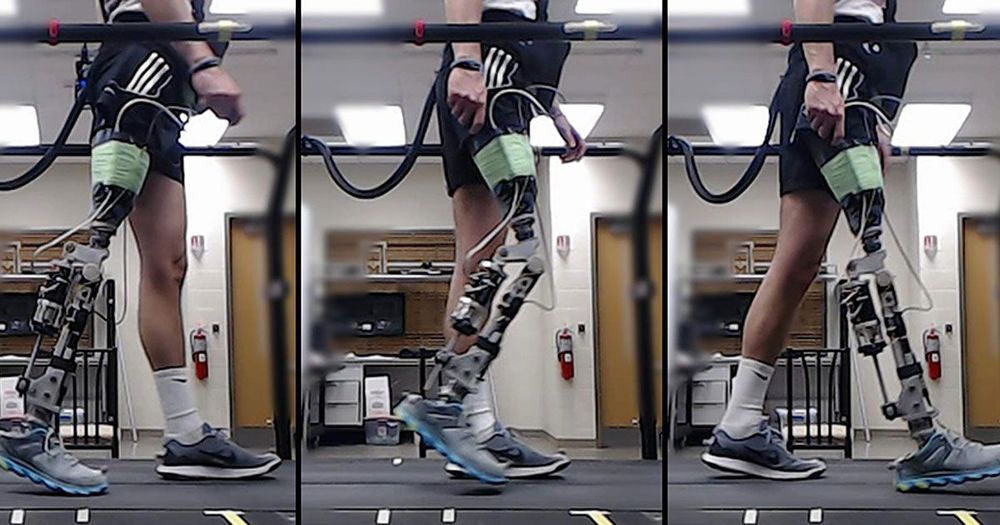

Increasing amounts of attention are being paid to the study of Soft Sensors and Soft Systems. Soft Robotic Systems require input from advances in the field of Soft Sensors. Soft sensors can help a soft robot to perceive and to act upon its immediate environment. The concept of integrating sensing capabilities into soft robotic systems is becoming increasingly important. One challenge is that most of the existing soft sensors have a requirement to be hardwired to power supplies or external data processing equipment. This requirement hinders the ability of a system designer to integrate soft sensors into soft robotic systems. In this article, we design, fabricate, and characterize a new soft sensor, which benefits from a combination of radio-frequency identification (RFID) tag design and microfluidic sensor fabrication technologies. We designed this sensor using the working principle of an RFID transporter antenna, but one whose resonant frequency changes in response to an applied strain. This new microfluidic sensor is intrinsically stretchable and can be reversibly strained. This sensor is a passive and wireless device, and as such, it does not require a power supply and is capable of transporting data without a wired connection. This strain sensor is best understood as an RFID tag antenna; it shows a resonant frequency change from approximately 860 to 800 MHz upon an applied strain change from 0% to 50%. Within the operating frequency, the sensor shows a standoff reading range of 7.5 m (at the resonant frequency). We characterize, experimentally, the electrical performance and the reliability of the fabrication process. We demonstrate a pneumatic soft robot that has four microfluidic sensors embedded in four of its legs, and we describe the implementation circuit to show that we can obtain movement information from the soft robot using our wireless soft sensors.

Soft RoboticsAhead of PrintFree AccessLijun Teng, Kewen Pan, Markus P. Nemitz, Rui Song, Zhirun Hu, and Adam A. Stokes Lijun TengThe School of Engineering, Institute for Integrated Mi…