Unmanned systems’ global inroads are including European agriculture. GNSS for precision guidance of tractors and harvesters is already in place. More recent innovations include fully driverless and smart systems, while drones remain poised to fly.

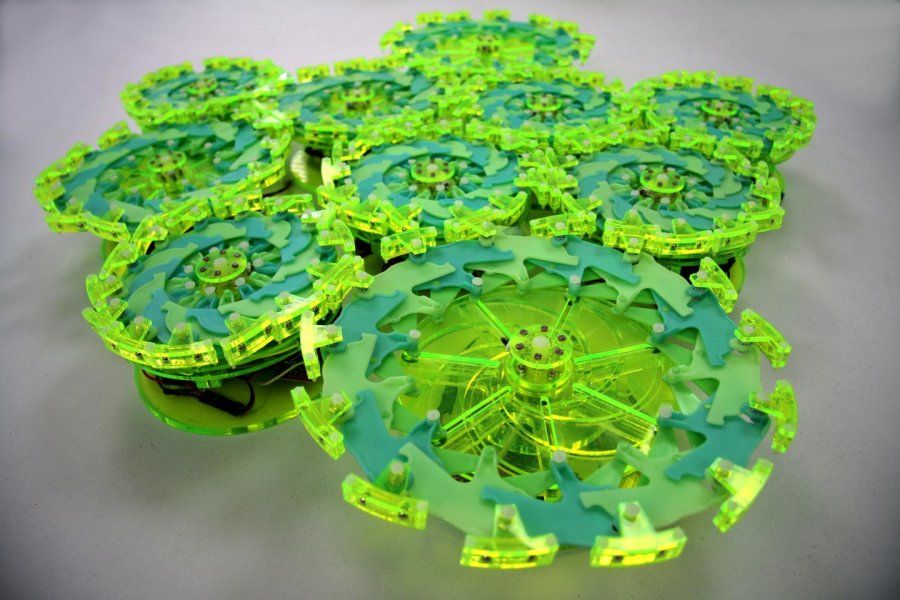

The experience of one Dutch company is instructive. Precision Makers is an up-and- coming manufacturer of automated farm systems. The company delivers two main products. One, a conversion kit called X-Pert, turns existing mowers and tractors into driverless machines. The other is a fully robotized, unmanned vehicle called Greenbot. Both systems enable automated precision operations, but while one has been successful in terms of sales, the other has not.

Precision Makers Business Development Director, Allard Martinet, told Inside Unmanned Systems, “Sales of our X-Pert conversion system have been very good. We started in 2008, first converting the Toro golf course mower, and then we expanded that into solutions for other vehicles. Today, there are more than 150 X-Pert converted vehicles running.”