No safety driver required.

It’s the first delivery system of its kind.

During the Fourth Eurosymposium on Healthy Ageing (EHA), which was held in Brussels, Belgium last November, we had the opportunity to meet Dr. Daniel Muñoz-Espín from the Oncology Department of the University of Cambridge.

Dr. Muñoz-Espín received his PhD from the Autonomous University of Madrid, Spain, within the viral DNA replication group at the Centre of Molecular Biology Severo Ochoa, where he worked under the supervision of one of the most famous Spanish scientists, Dr. Margarita Salas. Dr. Muñoz-Espín’s postdoctoral research resulted in several published papers and a 2013 patent focused on DNA replication; he then joined the Centro Nacional de Investigaciones Oncológicas, or CNIO, the Spanish National Centre for Cancer Research, specifically the team of Dr. Manuel Serrano, co-author of The Hallmarks of Aging. The research that Dr. Muñoz-Espín conducted during this time demonstrated how cellular senescence doesn’t play a role just in aging and cancer but also in normal embryonic development, where it contributes to the shaping of our bodies—a process that was termed “developmentally-programmed senescence”, whose concept was very favorably received by the scientific community.

Currently, Dr. Muñoz-Espín serves as Principal Investigator of the Cancer Early Detection Programme at the Department of Oncology of Cambridge University; with his current team, Dr. Muñoz-Espín developed a novel method to target senescent cells, which was reported in EMBO Molecular Medicine. This topic was the subject of Dr. Muñoz-Espín’s talk at EHA2018 and one of the many fascinating others that he discussed in this interview.

Amber Lab research’s robot locomotion to develop devices that help people with walking difficulties.

In recent years, Google has designed specialized chips for artificial intelligence technology. Facebook and Microsoft, which like most internet companies are major buyers of chips from Intel, have indicated that they are working on similar A.I. chips.

The retailer is now making its own server chips. It’s the latest sign that big internet outfits are willing to cut out longtime suppliers.

By Kate Gill

A.I. robot Sophia is getting a software upgrade, one that will inch her ー and perhaps A.I. ー even closer to humanity. According to her creator, not only will Sophia earn her citizenship in Malta, she will reach a level of advancement equal to human beings in roughly five to 10 years.

“In the long run, I think the broader implications are pretty clear,” Dr. Ben Goertzel, the CEO of SingularityNET and chief scientist at Hanson Robotics, told Cheddar Friday.

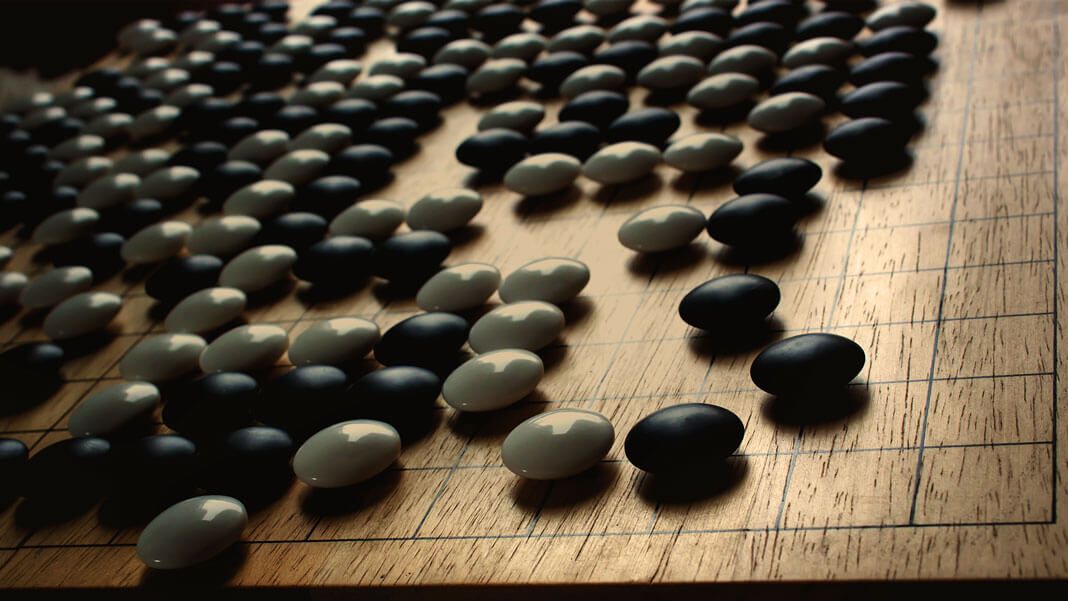

As impressive as all these feats were, game-playing AI typically exploit the properties of a single game and often rely on hand-crafted knowledge coded into them by developers. But DeepMind’s latest creation, AlphaZero, detailed in a new paper in Science, was built from the bottom up to be game-agnostic.

All it was given was the rules of each game, and it then played itself thousands of times, effectively using trial and error to work out the best tactics for each game. It was then pitted against the most powerful specialized AI for each game, including its predecessor AlphaGo, beating them comprehensively.

“This work has, in effect, closed a multi-decade chapter in AI researchers need to look to a new generation of games to provide the next set of challenges, ”IBM computer scientist Murray Campbell, who has worked on chess-playing computers, wrote in an opinion for Science.

Publication: Forbes story title: Human 2.0: is coming faster than you think deck: Will you evolve with the times? section: Innovation topic: artificial intelligence + big data special label: contributor group | Cognitive World author: by Neil Sahota date: October 1, 2018.

Fu Qiang examines flight simulator cockpit parts at Wright Brothers Science and Technology Development Co., Ltd. in Harbin, northeast China’s Heilongjiang Province, Dec. 14, 2018. If it were not for a common infatuation with flight simulation, chances are that Liu Zhongliang, Fu Qiang and Zhou Zhiyuan, who had once led three entirely distinct careers, might never come across one another, let alone team up and approach an aviation dream. The aviation enthusiast trio launched their hardware developing team in 2009. From the very first electronic circuit, to today’s flight simulator cockpits, the core spirit of autonomous design prevailed throughout the course of their venture. In 2014, Liu, Fu and Zhou left Zhengzhou in central China and relocated to Harbin. They were joined by Ge Jun, another aviation enthusiast, entering a business fast track as the four registered their company, named after the Wright Brothers. The prototype of a scale 1:1 Boeing 737–800 cockpit procedure trainer took shape in the same year. And in the year to come, the simulator cockpit was put to standardized production. The company’s products have obtained recognitions at various levels. In November 2016, a refined model of their cockpit procedure trainer obtained technical certification from the China Academy of Civil Aviation Science and Technology, one of the country’s top research institutes in the field. Later, another flight simulator cockpit prototype received Boeing authorizations. One aspiration of the team is to apply for higher-level technical certifications for their simulator cockpits, and become a viable contributor to the Chinese jetliner industry. (Xinhua/Wang Song)