Pedro Domingos has devoted his life to learning how computers learn. He says a breakthrough is coming.

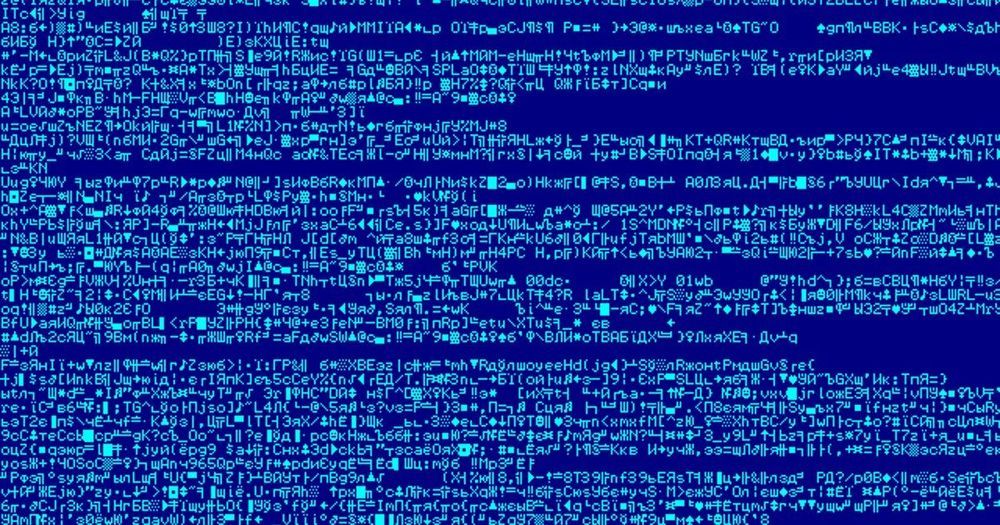

Tissue biopsy slides stained using hematoxylin and eosin (H&E) dyes are a cornerstone of histopathology, especially for pathologists needing to diagnose and determine the stage of cancers. A research team led by MIT scientists at the Media Lab, in collaboration with clinicians at Stanford University School of Medicine and Harvard Medical School, now shows that digital scans of these biopsy slides can be stained computationally, using deep learning algorithms trained on data from physically dyed slides.

Pathologists who examined the computationally stained H&E slide images in a blind study could not tell them apart from traditionally stained slides while using them to accurately identify and grade prostate cancers. What’s more, the slides could also be computationally “de-stained” in a way that resets them to an original state for use in future studies, the researchers conclude in their May 20 study published in JAMA Network Open.

This process of computational digital staining and de-staining preserves small amounts of tissue biopsied from cancer patients and allows researchers and clinicians to analyze slides for multiple kinds of diagnostic and prognostic tests, without needing to extract additional tissue sections.

Circa 2012

Arthur C. Clarke is famous for suggesting that any sufficiently advanced technology would be indistinguishable from magic. There’s no better of this than the ultra-speculative prospect of “utility fogs” — swarms of networked microscopic robots that could assume the shape and texture of virtually anything.

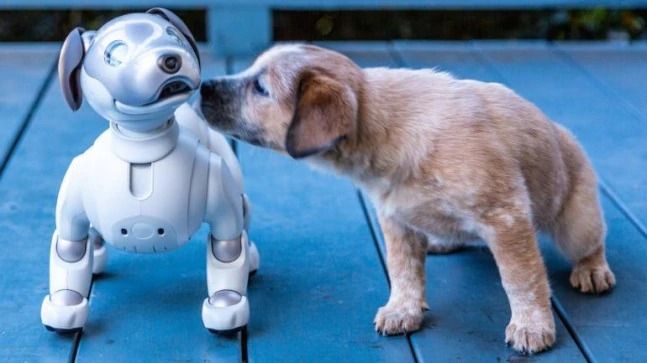

In this article, we explain why selling robots to individual buyers or regular families is not a very good business model, and what strategies are better for robot-making companies to profit.

#technology #robots #AI #innovation #future #business #startups #BusinessModel

https://facebook.com/LongevityFB https://instagram.com/longevityyy https://twitter.com/Longevityyyyy https://linkedin.com/company/longevityy

- Please also subscribe and hit the notification bell and click “all” on these YouTube channels:

https://youtube.com/Transhumania

https://youtube.com/BrentNally

https://youtube.com/EternalLifeFan

https://youtube.com/MaxEternalLife

https://youtube.com/LifespanIO

https://youtube.com/LifeXTenShow

https://youtube.com/BitcoinComOfficialChannel

https://youtube.com/RogerVer

https://youtube.com/RichardHeart

https://youtube.com/sciVive

Follow Peter Voss on social media:

Tweets by peterevoss

https://linkedin.com/in/vosspeter

https://facebook.com/petervoss

https://medium.com/@petervoss

Check out projects Peter focuses on:

https://agiinnovations.com

http://optimal.org/voss.html

SHOW NOTES

China’s space program will launch a Mars mission in July, according to its current plans. This will include deploying an orbital probe to study the red planet, and a robotic, remotely-controlled rover for surface exploration. The U.S. has also been planning another robotic rover mission for Mars, and it’s set to take off this summer, too – peak time for an optimal transit from Earth to Mars thanks to their relative orbits around the Sun.

This will be the first rover mission to Mars for China’s space program, and is one of the many ways that it’s aiming to better compete with NASA’s space exploration efforts. NASA has flown four previous Mars rover missions, and its fifth, with an updated rover called ‘Perseverance,’ is set to take place this years with a goal of making a rendezvous with Mars sometime in February 2021.

NASA’s mission also includes an ambitious rock sample return plan, which will include the first powered spacecraft launch from the red planet to bring that back. The U.S. space agency is also sending the first atmospheric aerial vehicle to Mars on this mission, a helicopter drone that will be used for short flights to collect additional data from above the planet’s surface.

U.S. apparel chain Gap is speeding up its rollout of warehouse robots for assembling online orders so it can limit human contact during the coronavirus pandemic, the company told Reuters.

Gap reached a deal early this year to more than triple the number of item-picking robots it uses to 106 by the fall. Then the pandemic struck North America, forcing the company to close all its stores in the region, including those of Banana Republic, Old Navy and other brands. Meanwhile, its warehouses faced more web orders and fewer staff to fulfill them because of social distancing rules Gap had put in place.

“We could not get as many people in our distribution centers safely,” said Kevin Kuntz, Gap’s senior vice president of global logistics fulfillment. So he called up Kindred AI, the vendor that sells the machines, to ask: “Can you get them here earlier?”