Believe it or not, that’s a robot dolphin.

Elon Musk announced that Tesla’s Full Self-Driving beta test will indeed be rolled out tonight. The Full Self-Driving beta will feature improvements from Tesla’s Autopilot rewrite. Musk has described the upcoming FSD beta as “profound,” which has only fueled excitement for its release.

While Musk confirmed the rollout of FSD’s limited beta, he did set some realistic expectations to the electric car community. The CEO stated that this limited beta will be rolled out in an “extremely slow and cautious” manner, likely to maximize safety. This bodes well for Tesla’s public Full Self-Driving rollout, as it shows that the company is taking a very conservative approach with regards to the release of its autonomous driving features.

Earlier this month, the Tesla CEO gave the public some information about the FSD beta coming out tonight. He said that Tesla’s new FSD build will be “capable of zero-intervention drives.”

NASA’s OSIRIS-REx spacecraft has made a historic touchdown on the asteroid Bennu, dodging boulders the size of buildings to collect samples from the surface for several seconds before safely backing away Tuesday evening.

The meticulous descent took 4.5 hours and by 6.12pm the spacecraft made touchdown where its 11-foot robotic arm acted like a pogo stick and bounced on the asteroid’s surface to collect dirt and dust before the craft launched back into space.

The crucial minutes in the mission started around 5.38pm when the spacecraft extended its arm and cameras toward the asteroid’s surface. By 6pm OSIRIS-REx made matchpoint burn, the spacecraft’s key final maneuver performed by firing its thrusters to match Bennu’s spin to center itself exactly over the landing spot.

Watch this video. You’ll want to remember where you were when the uprising truly began.

Head Image Caption: Street level view of 3D-reconstructed Chelsea, Manhattan

Historians and nostalgic residents alike take an interest in how cities were constructed and how they developed — and now there’s a tool for that. Google AI recently launched the open-source browser-based toolset “rǝ,” which was created to enable the exploration of city transitions from 1800 to 2000 virtually in a three-dimensional view.

Google AI says the name rǝ is pronounced as “re-turn” and derives its meaning from “reconstruction, research, recreation and remembering.” This scalable system runs on Google Cloud and Kubernetes and reconstructs cities from historical maps and photos.

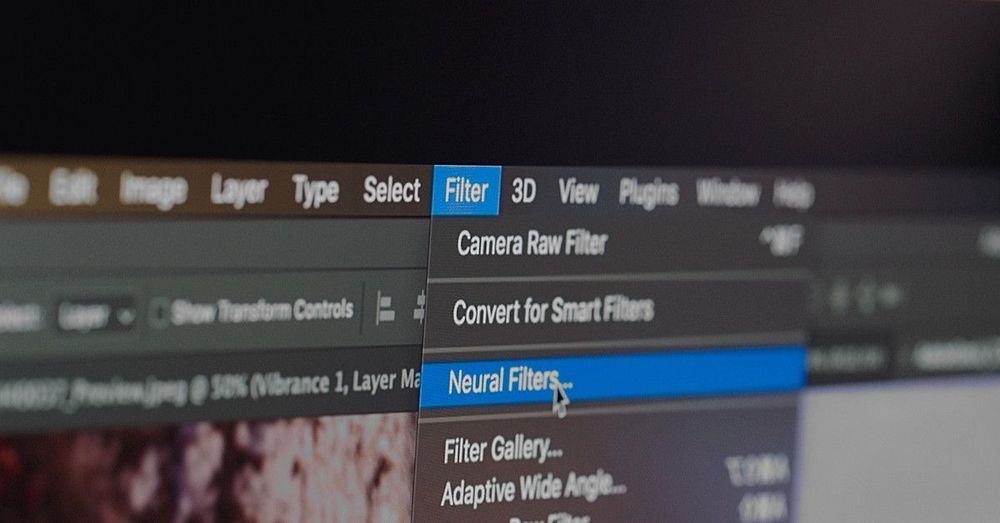

Adobe injects more AI into its tools.

The latest version of Adobe Photoshop comes with new neural filters — AI-powered filters that let you change someone’s age or expression with a few clicks. A whole suite of machine learning neural filters will eventually be available, including ones for colorizing photos, removing defects, and more.

Leading tech companies are increasingly using AI to influence our behaviour. But how persuasive do we find virtual assistants?