Three actions policymakers and business leaders can take today.

New developments in AI could spur a massive democratization of access to services and work opportunities, improving the lives of millions of people around the world and creating new commercial opportunities for businesses. Yet they also raise the specter of potential new social divides and biases, sparking a public backlash and regulatory risk for businesses. For the U.S. and other advanced economies, which are increasingly fractured along income, racial, gender, and regional lines, these questions of equality are taking on a new urgency. Will advances in AI usher in an era of greater inclusiveness, increased fairness, and widening access to healthcare, education, and other public services? Or will they instead lead to new inequalities, new biases, and new exclusions?

Three frontier developments stand out in terms of both their promised rewards and their potential risks to equality. These are human augmentation, sensory AI, and geographic AI.

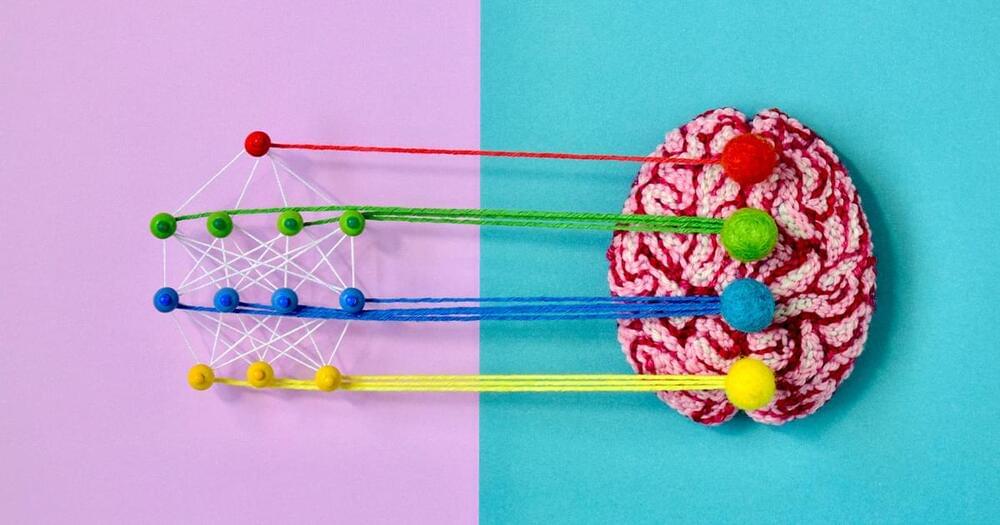

Human Augmentation

Variously described as biohacking or Human 2.0, human augmentation technologies have the potential to enhance human performance for good or ill.