Some argue that only a “Sputnik” moment will wake the American people and government to act with purpose, just as the 1957 Soviet launch of a satellite catalyzed new educational and technological investments. We disagree. We have been struck by the broad, bipartisan consensus in America to “get AI right” now. We are in a rare moment when challenge, urgency, and consensus may just align to generate the energy we need to extend our AI leadership and build a better future.

Congress asked us to serve on a bipartisan commission of tech leaders, scientists, and national security professionals to explore the relationship between artificial intelligence (AI) and national security. Our work is not complete, but our initial assessment is worth sharing now: in the next decade, the United States is in danger of losing its global leadership in AI and its innovation edge. That edge is a foundation of our economic prosperity, military power and ultimately the freedoms we enjoy.

As we consider the leadership stakes, we are struck by AI’s potential to propel us towards many imaginable futures. Some hold great promise; others are concerning. If past technological revolutions are a guide, the future will include elements of both.

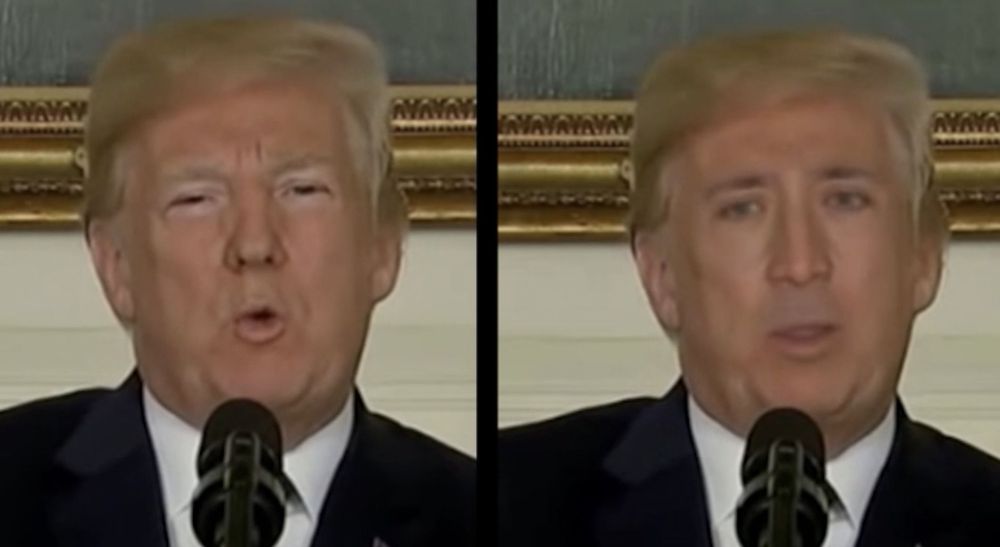

Some of us have dedicated our professional lives to advancing AI for the benefit of humanity. AI technologies have been harnessed for good in sectors ranging from health care to education to transportation. Today’s progress only scratches the surface of AI’s potential. Computing power, large data sets, and new methods have led us to an inflection point where AI and its sub-disciplines (including machine vision, machine learning, natural language understanding, and robotics) will transform the world.