A trio of researchers at Johannes Kepler University has used artificial intelligence to improve thermal imaging camera searches of people lost in the woods. In their paper published in the journal Nature Machine Intelligence, David Schedl, Indrajit Kurmi and Oliver Bimber, describe how they applied a deep learning network to the problem of people lost in the woods and how well it worked.

When people become lost in forests, search and rescue experts use helicopters to fly over the area where they are most likely to be found. In addition to simply scanning the ground below, the researchers use binoculars and thermal imaging cameras. It is hoped that such cameras will highlight differences in body temperature of people on the ground versus their surroundings making them easier to spot. Unfortunately, in some instances thermal imaging does not work as intended because of vegetation covering subsoil or the sun heating the trees to a temperature that is similar to the body temperature of the person that is lost. In this new effort, the researchers sought to overcome these problems by using a deep learning application to improve the images that are made.

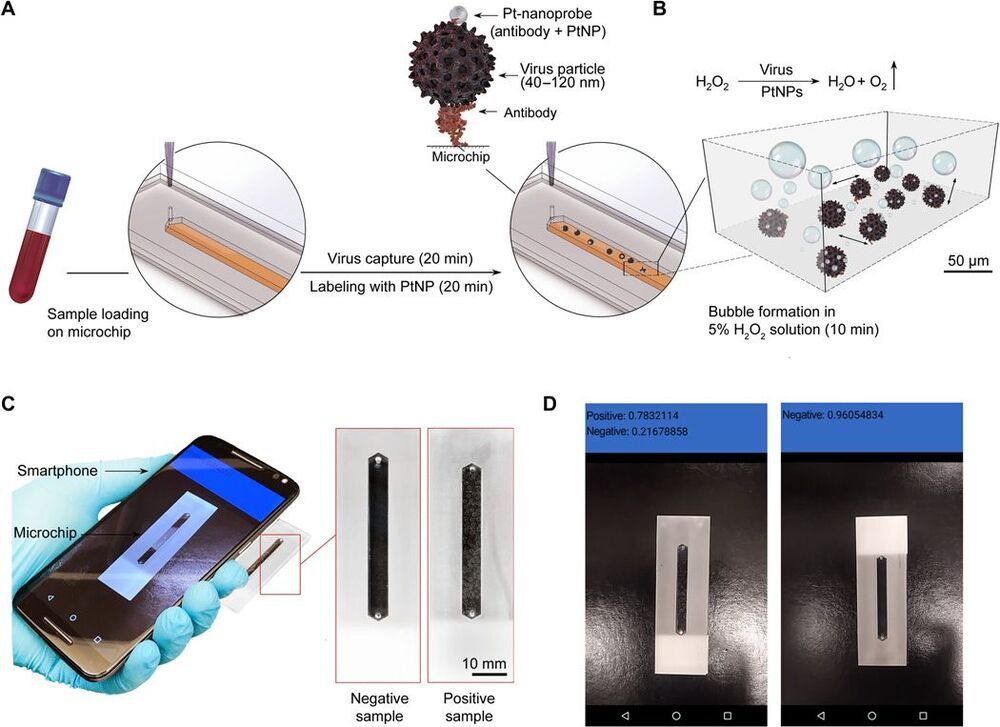

The solution the team developed involved using an AI application to process multiple images of a given area. They compare it to using AI to process data from multiple radio telescopes. Doing so allows several telescopes to operate as a single large telescope. In like manner, the AI application they used allowed multiple thermal images taken from a helicopter (or drone) to create an image as if it were captured by a camera with a much larger lens. After processing, the images that were produced had a much higher depth of field—in them the tops of the trees appeared blurred while people on the ground became much more recognizable. To train the AI system, the researchers had to create their own database of images. They used drones to take pictures of volunteers on the ground in a wide variety of positions.