Clip from Lew Later (7 Things Apple Should Steal From Android…) — https://youtu.be/GgNF1YuOOuc

Category: robotics/AI – Page 1,995

Nvidia and Harvard develop AI tool that speeds up genome analysis

Researchers affiliated with Nvidia and Harvard today detailed AtacWorks, a machine learning toolkit designed to bring down the cost and time needed for rare and single-cell experiments. In a study published in the journal Nature Communications, the coauthors showed that AtacWorks can run analyses on a whole genome in just half an hour compared with the multiple hours traditional methods take.

Most cells in the body carry around a complete copy of a person’s DNA, with billions of base pairs crammed into the nucleus. But an individual cell pulls out only the subsection of genetic components that it needs to function, with cell types like liver, blood, or skin cells using different genes. The regions of DNA that determine a cell’s function are easily accessible, more or less, while the rest are shielded around proteins.

AtacWorks, which is available from Nvidia’s NGC hub of GPU-optimized software, works with ATAC-seq, a method for finding open areas in the genome in cells pioneered by Harvard professor Jason Buenrostro, one of the paper’s coauthors. ATAC-seq measures the intensity of a signal at every spot on the genome. Peaks in the signal correspond to regions with DNA such that the fewer cells available, the noisier the data appears, making it difficult to identify which areas of the DNA are accessible.

Beauty is in the Brain of the Beholder: AI Generates Personally Attractive Images

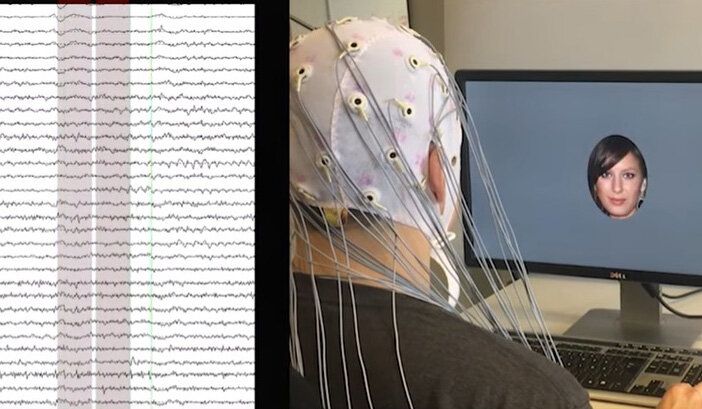

Summary: Combining brain activity data with artificial intelligence, researchers generated faces based upon what individuals considered to be attractive features.

Source: University of Helsinki.

Researchers at the University of Helsinki and University of Copenhagen investigated whether a computer would be able to identify the facial features we consider attractive and, based on this, create new images matching our criteria. The researchers used artificial intelligence to interpret brain signals and combined the resulting brain-computer interface with a generative model of artificial faces. This enabled the computer to create facial images that appealed to individual preferences.

Beauty is in the brain: AI reads brain data, generates personally attractive images

Researchers at the University of California San Diego School of Medicine have shown that they can block inflammation in mice, thereby protecting them from liver disease and hardening of the arteries while increasing their healthy lifespan.

Researchers have succeeded in making an AI understand our subjective notions of what makes faces attractive. The device demonstrated this knowledge by its ability to create new portraits that were tailored to be found personally attractive to individuals. The results can be used, for example, in modeling preferences and decision-making as well as potentially identifying unconscious attitudes.

Researchers at the University of Helsinki and University of Copenhagen investigated whether a computer would be able to identify the facial features we consider attractive and, based on this, create new images matching our criteria. The researchers used artificial intelligence to interpret brain signals and combined the resulting brain-computer interface with a generative model of artificial faces. This enabled the computer to create facial images that appealed to individual preferences.

“In our previous studies, we designed models that could identify and control simple portrait features, such as hair color and emotion. However, people largely agree on who is blond and who smiles. Attractiveness is a more challenging subject of study, as it is associated with cultural and psychological factors that likely play unconscious roles in our individual preferences. Indeed, we often find it very hard to explain what it is exactly that makes something, or someone, beautiful: Beauty is in the eye of the beholder,” says Senior Researcher and Docent Michiel Spapé from the Department of Psychology and Logopedics, University of Helsinki.

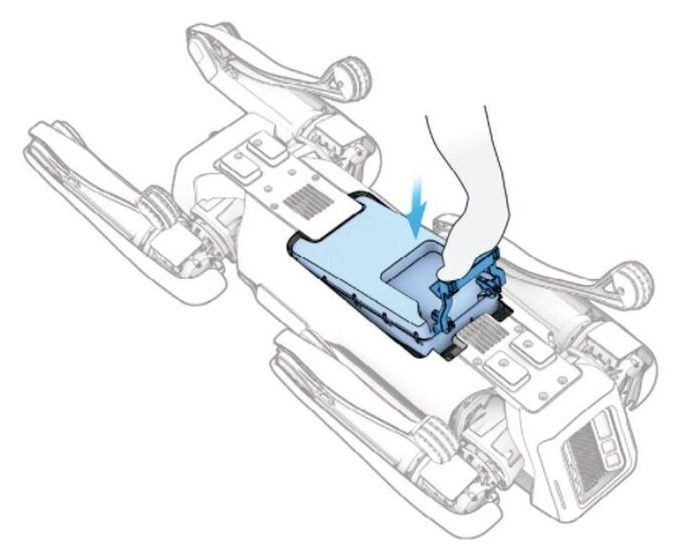

How to Defeat a Boston Dynamics Robot in Mortal Combat

“As several people mention in the replies to LenKusov, shooting or otherwise damaging that hefty lithium battery pack could make it explode—which is either very bad if you’re close-range, or exactly what you want if you’re somehow hitting it from a distance and trying for fireworks.”

It turns out that a flip through Spot’s user manual reveals its weaknesses.

Deep Science: AI adventures in arts and letters

There’s more AI news out there than anyone can possibly keep up with. But you can stay tolerably up to date on the most interesting developments with this column, which collects AI and machine learning advancements from around the world and explains why they might be important to tech, startups or civilization.

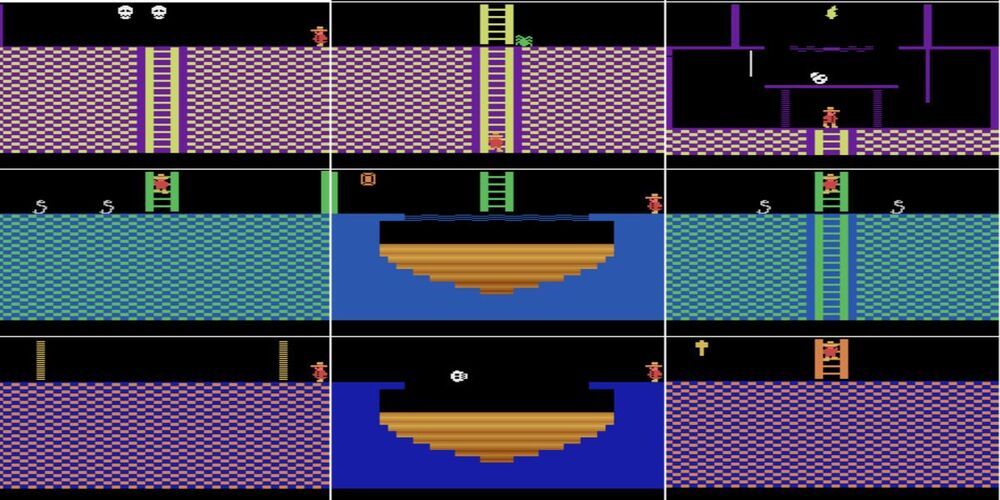

To begin on a lighthearted note: The ways researchers find to apply machine learning to the arts are always interesting — though not always practical. A team from the University of Washington wanted to see if a computer vision system could learn to tell what is being played on a piano just from an overhead view of the keys and the player’s hands.

Audeo, the system trained by Eli Shlizerman, Kun Su and Xiulong Liu, watches video of piano playing and first extracts a piano-roll-like simple sequence of key presses. Then it adds expression in the form of length and strength of the presses, and lastly polishes it up for input into a MIDI synthesizer for output. The results are a little loose but definitely recognizable.

Nanoprinted high-neuron-density optical linear perceptrons perform near-infrared inference on a CMOS chip

Today, machine learning permeates everyday life, with millions of users every day unlocking their phones through facial recognition or passing through AI-enabled automated security checks at airports and train stations. These tasks are possible thanks to sensors that collect optical information and feed it to a neural network in a computer.

Scientists in China have presented a new nanoscale AI optical circuit trained to perform unpowered all-optical inference at the speed of light for enhanced authentication solutions. Combining smart optical devices with imaging sensors, the system performs complex functions easily, achieving a neural density equal to 1/400th that of the human brain and a computational power more than 10 orders of magnitude higher than electronic processors.

Imagine empowering the sensors in everyday devices to perform artificial intelligence functions without a computer—as simply as putting glasses on them. The integrated holographic perceptrons developed by the research team at University of Shanghai for Science and Technology led by Professor Min Gu, a foreign member of the Chinese Academy of Engineering, can make that a reality. In the future, its neural density is expected to be 10 times that of human brain.

Online Public Offering

“” We’re looking at Flippy as a tool that helps us increase speed of service and frees team members up to focus more on other areas we want to concentrate on, whether that’s order accuracy or how we’re handling delivery partner drivers and getting them what they need when they come through the door.”, said White Castle’s Vice President, Jamie Richardson.”

Flippy is the world’s first autonomous robotic kitchen assistant that can learn from its surroundings and acquire new skills over time.