“Don’t knock it until you try it” should be for tasting new foods, not building robots capable of ending human life.

78% respondents said they were comfortable interacting with AI for business-related queries.

Artificial intelligence has come of age. Customers in the country seem to prefer to get responses from a machine than interacting with humans. The reason, according to a survey, answers given by an AI-system is backed with voluminous data that it crunches to deliver right responses.

A study commissioned by Pegasystems, a Nasdaq-listed digital transformation solutions company, has found that 60 per cent of people in the country are more likely to tell the truth to an AI system or chatbot as compared to a human, Suman Reddy, Managing Director of Pega India, told BusinessLine.

Boston Dynamics event on Feb. 22021.

We’re excited to reveal the latest in Spot’s expanded product line. Join us live on Tuesday, February 2nd @ 11 am EST, to hear how these products will extend Spot’s value for autonomous inspection and data collection.

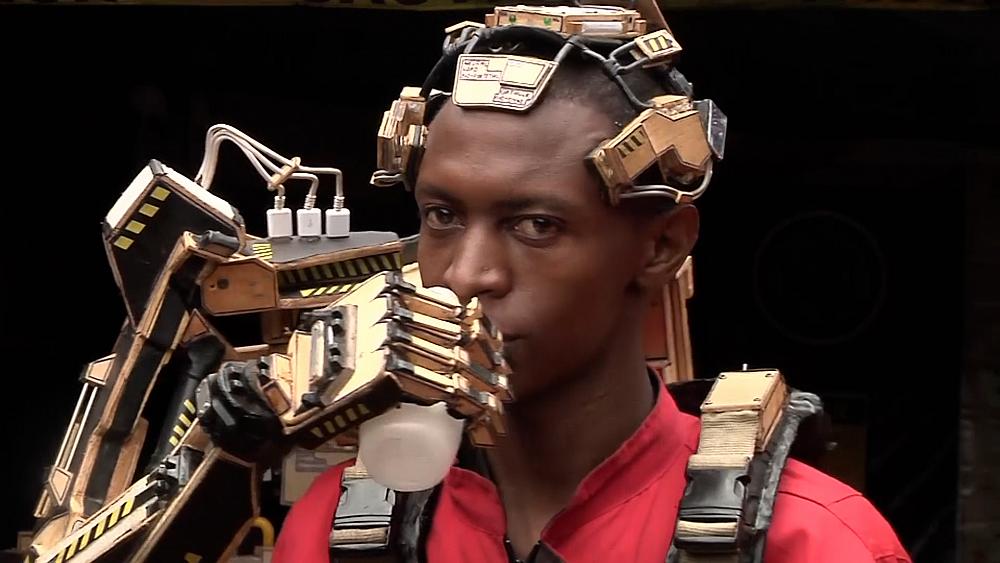

It was invented by David Gathu and Moses Kinyua and is powered by brain signals.

The signals are converted into an electric current by a “NeuroNode” biopotential headset receiver. This electrical current is then driven into the robot’s circuitry, which gives the arm its mobility.

The arm has several component materials including recycled wood and moves vertically and horizontally.

Juniorr Amenra.

· —3—h ·

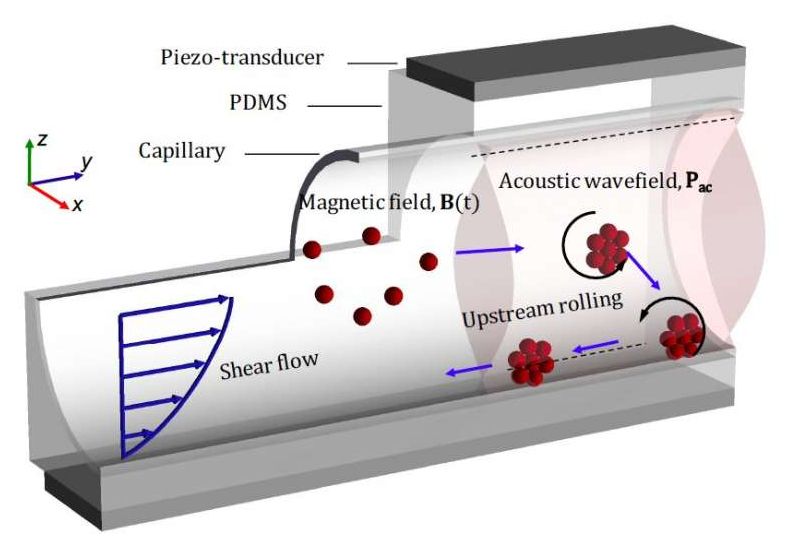

Micro-sized robots could bring a new wave of innovation in the medical field by allowing doctors to access specific regions inside the human body without the need for highly invasive procedures. Among other things, these tiny robots could be used to carry drugs, genes or other substances to specific sites inside the body, opening up new possibilities for treating different medical conditions.

Researchers at ETH Zurich and Helmholtz Institute Erlangen–Nürnberg for Renewable Energy have recently developed micro and nano-sized robots inspired by biological micro-swimmers (e.g., bacteria or spermatozoa). These small robots, presented in a paper published in Nature Machine Intelligence, are capable of upstream motility, which essentially means that they can autonomously move in the opposite direction to that in which a fluid (e.g., blood) flows. This makes them particularly promising for intervening inside the human body.

“We believe that the ideas discussed in our multidisciplinary study can transform many aspects of medicine by enabling tasks such as targeted and precise delivery of drugs or genes, as well as facilitating non-invasive surgeries,” Daniel Ahmed, lead author of the recent paper, told TechXplore.