Microsoft unveiled new tools for automatically generating computer code and formulas on Tuesday morning, in a new adaptation of the GPT-3 natural-language technology more commonly known for replicating human language.

Deepmind, Co-founder and CEO, Demis Hassabis discusses how we can avoid bias being built into AI systems and what’s next for DeepMind, including the future of protein folding, at WIRED Live 2020.

“If we build it right, AI systems could be less biased than we are.”

ABOUT WIRED LIVE

WIRED Live – the inspirational festival bringing the WIRED brand to life. Hear top-level talks from a curated smorgasbord of scientists, artists, innovators, disruptors and influencers. As we settle into a post-COVID world, WIRED Live will retain the rare combination of WIRED’s journalistic eye, diverse programme, and connections with innovators, designers, strategists and entrepreneurs whilst designed to reach our community remotely, around the world.

CONNECT WITH WIRED

Events: http://wired.uk/events

Subscribe for Events Information: http://wired.uk/signup

Web: http://bit.ly/VideoWired

Twitter: http://bit.ly/TwitterWired

Facebook: http://bit.ly/FacebookWired

Instagram: http://bit.ly/InstagramWired

Magazine: http://bit.ly/MagazineWired

Newsletter: http://bit.ly/NewslettersWired

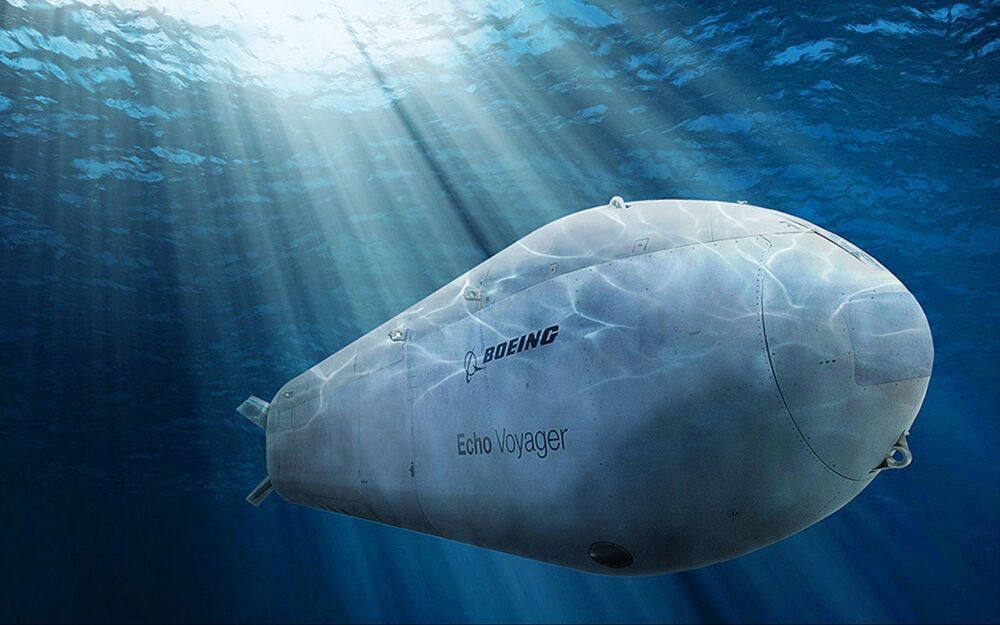

The 50-ton Voyager was developed by Boeing’s PhantomWorks division, which is devoted to advanced new technologies, succeeding a series of smaller Echo Seeker and Echo Ranger UUVs. The 15.5-meter long Echo Voyager has a range of nearly 7500 miles. It has also deployed at sea up to three months in a test, and theoretically could last as long as six months.

Supposedly, Voyager also can dive as deep as 3350 meters—while few military submarines are (officially) certified for dives below 500 meters.

And it isn’t the only robot submarine in the works.

Here’s What You Need to Know: The U.S. Navy has an ambitious vision for future warfare.

At a military parade celebrating its 70th anniversary, the People’s Republic of China unveiled, amongst many other exotic weapons, two HSU-001 submarines—the world’s first large diameter autonomous submarines to enter military service.

😃

✅ Instagram: https://www.instagram.com/pro_robots.

You’re on the PRO Robotics channel and in this issue of High Tech News. The latest news from Mars, the first flight of Elon Musk’s starship around the Earth, artificial muscles, a desktop bioprinter and why IBM teaches artificial intelligence to code? All the most interesting technology news in one issue!

Watch the video to the end and write in the comments which news interested you most.

Time Codes:

0:00 In this video.

0:22 News from Mars.

2:08 A system that recognizes the capitals presented in the brain with 94% accuracy.

2:47 SpaceX has scheduled a test orbital flight of Starship.

3:28 Japanese billionaire, Yusaku Maezawa to go to ISS in December.

3:55 Voyager 1

4:27 OSIRIS-REx probe.

4:50 China has launched “Tianhe” basic module into space.

5:25 Successful tests of the Module “Nauka“

6:00 IBM creates datasets to teach artificial intelligence programming.

6:45 Elon Musk promises to open access to FSD’s autopilot on a subscription basis in June.

7:08 Honda and AutoX report first 100 days of fully autonomous AutoX robot cabs.

7:25 Baidu.

7:41 Robot to untangle hair.

8:10 SoftBank sold Boston Dynamics, but continues to fund robot startups.

8:35 Boston University developers have created a robotic gripper capable of picking up even a single grain of sand.

9:06 U.S. Air Force unveils robot for washing F-16 Viper aircraft.

9:35 E Ink.

10:07 Artificial muscle fibers.

10:40 Gravity Industries jetpacks.

#prorobots #robots #robot #future technologies #robotics.

An iPhone app that estimates biological aging discovered that life expectancy has the capacity to be almost double the current norm.

GEYLANG, Singapore — Have you made any plans for the 22nd century yet? A new study finds you might want to think about it because it’s possible for humans to live to see their 150th birthday!

Scientists in Singapore have developed an iPhone app that accurately estimates biological aging. It discovered that life expectancy has the capacity to be almost double the current norm. The findings are based on blood samples from hundreds of thousands of people in the United States and United Kingdom.

The instrument, called DOSI, uses artificial intelligence to work out body resilience, the ability to recover from injury or disease. DOSI, which stands for dynamic organism state indicator, takes into account age, illnesses, and lifestyles to make its estimates.

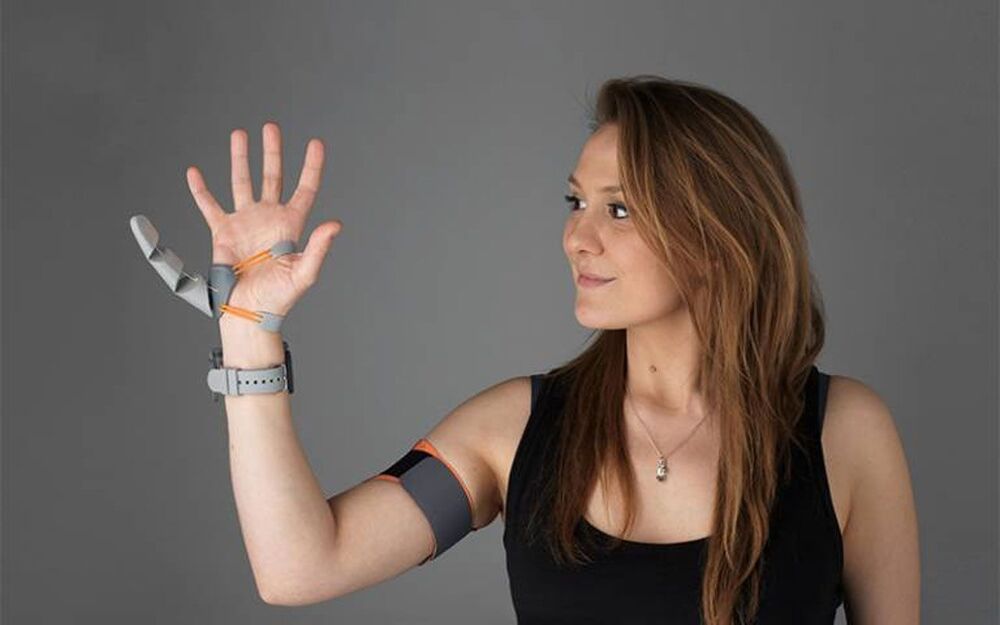

UCL researchers have created a strange robotic “third thumb” that attaches to the hand and adds a large extra digit on the opposite side of the hand from the thumb. Researchers found that using the robotic thumb can impact how the hand is represented in the brain. For the research, scientists trained people to use an extra robotic thumb and found they could effectively carry out dexterous tasks such as building a tower of blocks using a single hand with two thumbs.

Researchers said that participants trained to use the extra thumb increasingly felt like it was part of their body. Initially, the Third Thumb was part of a project seeking to reframe the way people view prosthetics from replacing a lost function to becoming an extension of the human body. UCL Professor Tamar Makin says body augmentation is a growing field aimed at extending the physical abilities of humans.

Anyone who spends a lot of time in the kitchen knows that there’s at least one gadget out there for every single step in the cooking process. But there has never been an appliance that could handle them all. Until now, that is.

Later this year, London-based robotics company Moley will begin selling the first robot chef, according to the Financial Times. The company claims the ceiling-mounted device, called the Moley Robotics Kitchen, will be able to cook over 5000 recipes and even clean up after itself when it’s done.