With artificial intelligence and painstaking study of sperm whales, scientists hope to understand what these aliens of the deep are talking about.

Category: robotics/AI – Page 1,985

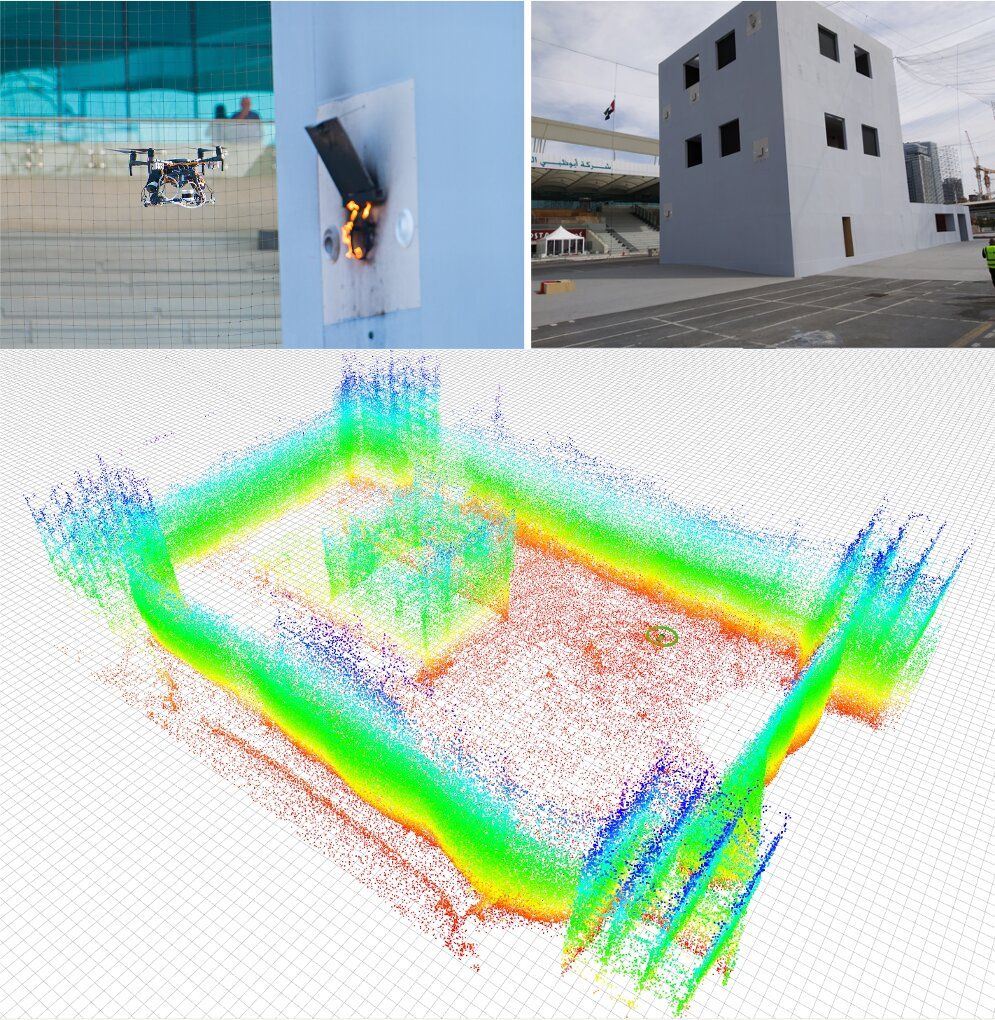

DLL: A map-based localization framework for aerial robots

To enable the efficient operation of unmanned aerial vehicles (UAVs) in instances where a global localization system (GPS) or an external positioning device (e.g., a laser reflector) is unavailable, researchers must develop techniques that automatically estimate a robot’s pose. If the environment in which a drone operates does not change very often and one is able to build a 3D map of this environment, map-based robot localization techniques can be fairly effective.

Ideally, map-based pose estimation approaches should be efficient, robust and reliable, as they should rapidly send a robot the information it needs to plan its future actions and movements. 3D light detection and ranging (LIDAR) systems are particularly promising map-based localization systems, as they gather a rich pool of 3D information, which drones can then use for localization.

Researchers at Universidad Pablo de Olavide in Spain have recently developed a new framework for map-based localization called direct LIDAR localization (DLL). This approach, presented in a paper pre-published on arXiv, could overcome some of the limitations of other LIDAR localization techniques introduced in the past.

CRISPR, AI & Brain-Machine Interface: The Future Is Faster Than You Think, Peter Diamandis

The only thing bad about Star Trek was they made the Borg evil.

Emerging technologies have unprecedented potential to solve some of the world’s most pressing issues. Among the most powerful — and controversial — is the gene-editing tech, CRISPR-Cas9, which will improve agricultural yields, cure genetic disorders, and eradicate infectious diseases like malaria. But CRISPR and other disruptive technologies, like brain-machine interfaces and artificial intelligence, also pose complex philosophical and ethical questions. Perhaps no one is better acquainted with these questions than Peter Diamandis, founder of the XPRIZE Foundation and co-founder of Singularity University and Human Longevity Inc. In this session, Peter will give a state of the union on the near future and explore the profound ethical implications we will face in the ongoing technological revolution.

This talk was recorded at Summit LA19.

Interested in attending Summit events? Apply to attend : https://summit.co/apply.

Connect with us:

Post-Human Species

Great episode from a great channel and creator. Though I’m sure almost everyone here is familiar with the channel in question, It’s still worth pointing out subscribing and supporting even if only 1 or 2 people who otherwise haven’t heard it get the opportunity to do so!

Get a free month of Curiosity Stream: https://curiositystream.com/isaacarthur.

As Humanity moves into the future, traveling to other worlds and exploring genetics, AI, transhumanism, and cybernetics, we may begin to diverge into a thousand post-human species.

Visit our Website: http://www.isaacarthur.net.

Support us on Patreon: https://www.patreon.com/IsaacArthur.

Facebook Group: https://www.facebook.com/groups/1583992725237264/

Reddit: https://www.reddit.com/r/IsaacArthur/

Twitter: https://twitter.com/Isaac_A_Arthur on Twitter and RT our future content.

SFIA Discord Server: https://discord.gg/53GAShE

Listen or Download the audio of this episode from Soundcloud: Episode’s Audio-only version: https://soundcloud.com/isaac-arthur-148927746/post-human-species.

Episode’s Narration-only version: https://soundcloud.com/isaac-arthur-148927746/post-human-species-narration-only.

Credits:

Cosmism: Russia’s religion for the rocket age

This brave new world seeks to meld space and cyber-space. For both Immortalists and Transhumanists, the human personality lies in the brain, which can live eternally if “uploaded” onto a computer, a favoured theme of science fiction writers. The company Neuralink aims to provide brain-machine interfaces which merge human consciousness and artificial intelligence – helping humans “stay relevant” in a world dominated by AI.

On 28 December 1903, during a particularly harsh Russian winter, a pauper died of pneumonia on a trunk he had rented in a room full of destitute strangers. Nikolai Fyodorov died in obscurity, and he remains almost unknown in the West, yet in life he was celebrated by Leo Tolstoy and Fyodor Dostoevsky, and by a devoted group of disciples – one of whom is credited with winning the Space Race for the Soviet Union.

Now, just as he prophesied, Fyodorov is living a strange afterlife. He has become an icon for transhumanists worldwide and a spiritual guide for interplanetary exploration.

Fyodorov’s poverty came by religious choice rather than material necessity. He was the illegitimate child of Prince Pavel Gagarin, and spent his early childhood on the family’s country estate, until the sudden death of both his father and grandfather, Prince Ivan Gagarin. While Fyodorov’s family had no connection to the first cosmonaut, Anastasia Gacheva of the Fyodorov Museum-Library in Moscow says there is “an important symbolic coincidence – between the Gagarin who foresaw spaceflight in a philosophical way, and Yuri Gagarin who became the world’s first cosmonaut”.

Now for AI’s Latest Trick: Writing Computer Code

Advances in machine learning have made it possible to automate a growing array of coding tasks, from auto-completing segments of code and fine tuning algorithms… See More.

Programs such as GPT-3 can compose convincing text. Some people are using the tool to automate software development and hunt for bugs.

Making Sense Podcast Special Episode: Engineering the Apocalypse

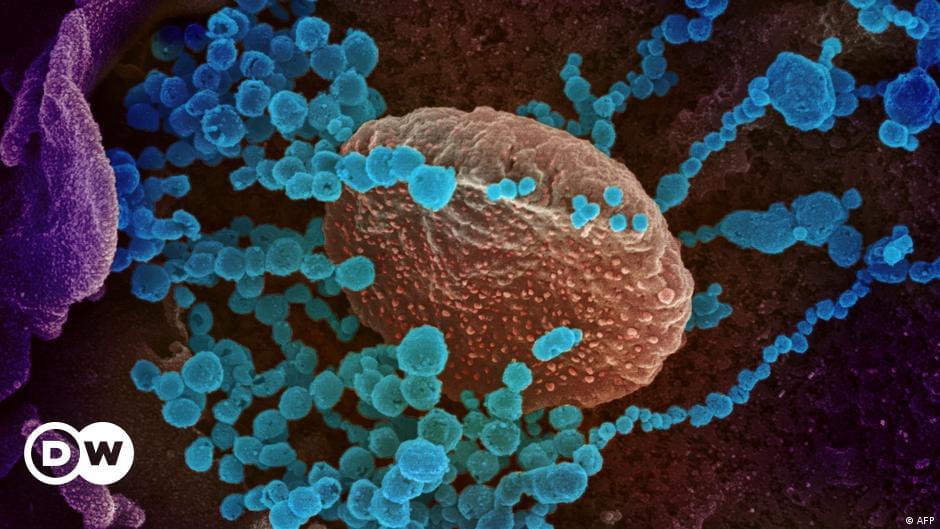

In this nearly 4-hour SPECIAL EPISODE, Rob Reid delivers a 100-minute monologue (broken up into 4 segments, and interleaved with discussions with Sam) about the looming danger of a man-made pandemic, caused by an artificially-modified pathogen. The risk of this occurring is far higher and nearer-term than almost anyone realizes.

Rob explains the science and motivations that could produce such a catastrophe and explores the steps that society must start taking today to prevent it. These measures are concrete, affordable, and scientifically fascinating—and almost all of them are applicable to future, natural pandemics as well. So if we take most of them, the odds of a future Covid-like outbreak would plummet—a priceless collateral benefit.

Rob Reid is a podcaster, author, and tech investor, and was a long-time tech entrepreneur. His After On podcast features conversations with world-class thinkers, founders, and scientists on topics including synthetic biology, super-AI risk, Fermi’s paradox, robotics, archaeology, and lone-wolf terrorism. Science fiction novels that Rob has written for Random House include The New York Times bestseller Year Zero, and the AI thriller After On. As an investor, Rob is Managing Director at Resilience Reserve, a multi-phase venture capital fund. He co-founded Resilience with Chris Anderson, who runs the TED Conference and has a long track record as both an entrepreneur and an investor. In his own entrepreneurial career, Rob founded and ran Listen.com, the company that created the Rhapsody music service. Earlier, Rob studied Arabic and geopolitics at both undergraduate and graduate levels at Stanford, and was a Fulbright Fellow in Cairo. You can find him at www.after-on.

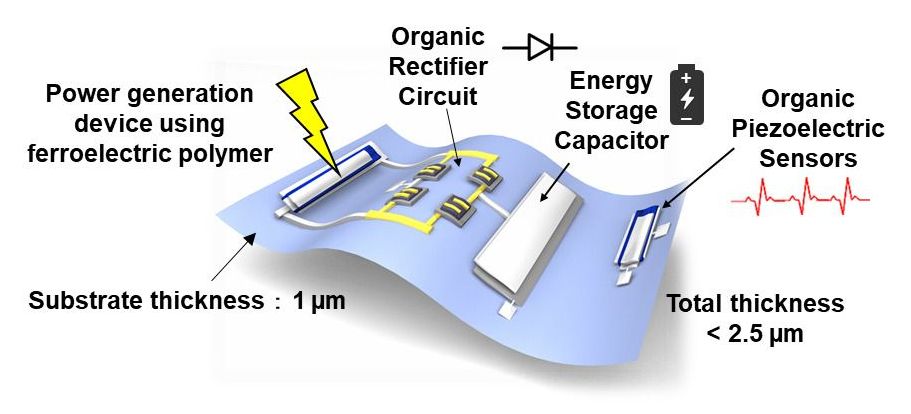

Researchers develop ultrathin, self-powered e-health patches that can monitor a user’s pulse and blood pressure

Scientists at Osaka University, in cooperation with Joanneum Research (Weiz, Austria), have developed wireless health monitoring patches that use embedded piezoelectric nanogenerators to power themselves with harvested biomechanical energy. This work may lead to new autonomous health sensors as well as battery-free wearable electronic devices.

As wearable technology and smart sensors become increasingly popular, the problem of providing power to all of these devices become more relevant. While the energy requirements of each component may be modest, the need for wires or even batteries become burdensome and inconvenient. That is why new energy harvesting methods are needed. Also, the ability for integrated health monitors to use ambient motion to both power and activate sensors will help accelerate their adoption in doctor’s offices.

Now, an international team of researchers from Japan and Austria has invented new ultraflexible patches with a ferroelectric polymer that can not only sense a patient’s pulse and blood pressure, but also power themselves from normal movements. The key was starting with a substrate just one micron thick. Using a strong electric field, ferroelectric crystalline domains in a copolymer were aligned so that the sample had a large electric dipole moment. Based on the piezoelectric effect, which is very efficient in converting natural motion into small electric voltages, the device responds rapidly to strain or pressure changes. These voltages can be transduced either into signals for the medical sensors or to directly harvest the energy. “Our e-health patches may be employed as part of screening for lifestyle-related diseases such as heart disorders, signs of stress, and sleep apnea,” first-author Andreas Petritz says.