AI, networked sensors, and heads up displays.

Autonomous weapons present some unique challenges to regulation. They can’t be observed and quantified in quite the same way as, say, a 1.5-megaton nuclear warhead. Just what constitutes autonomy, and how much of it should be allowed? How do you distinguish an adversary’s remotely piloted drone from one equipped with Terminator software? Unless security analysts can find satisfactory answers to these questions and China, Russia, and the US can decide on mutually agreeable limits, the march of automation will continue. And whichever way the major powers lead, the rest of the world will inevitably follow.

Military scholars warn of a “battlefield singularity,” a point at which humans can no longer keep up with the pace of conflict.

The human brain operates on roughly 20 watts of power (a third of a 60-watt light bulb) in a space the size of, well, a human head. The biggest machine learning algorithms use closer to a nuclear power plant’s worth of electricity and racks of chips to learn.

That’s not to slander machine learning, but nature may have a tip or two to improve the situation. Luckily, there’s a branch of computer chip design heeding that call. By mimicking the brain, super-efficient neuromorphic chips aim to take AI off the cloud and put it in your pocket.

The latest such chip is smaller than a piece of confetti and has tens of thousands of artificial synapses made out of memristors—chip components that can mimic their natural counterparts in the brain.

Deepfakes have struck a nerve with the public and researchers alike. There is something uniquely disturbing about these AI-generated images of people appearing to say or do something they didn’t.

With tools for making deepfakes now widely available and relatively easy to use, many also worry that they will be used to spread dangerous misinformation. Politicians can have other people’s words put into their mouths or made to participate in situations they did not take part in, for example.

That’s the fear, at least. To a human eye, the truth is that deepfakes are still relatively easy to spot. And according to a report from cybersecurity firm DeepTrace Labs in October 2019, still the most comprehensive to date, they have not been used in any disinformation campaign. Yet the same report also found that the number of deepfakes posted online was growing quickly, with around 15,000 appearing in the previous seven months. That number will be far larger now.

Duke University researchers have developed an AI tool that can turn blurry, unrecognizable pictures of people’s faces into eerily convincing computer-generated portraits, in finer detail than ever before.

Previous methods can scale an image of a face up to eight times its original resolution. But the Duke team has come up with a way to take a handful of pixels and create realistic-looking faces with up to 64 times the resolution, ‘imagining’ features such as fine lines, eyelashes and stubble that weren’t there in the first place.

“Never have super-resolution images been created at this resolution before with this much detail,” said Duke computer scientist Cynthia Rudin, who led the team.

After years of hype, many people feel AI has failed to deliver, says Tim Cross.

Technology Quarterly Jun 11th 2020 edition

Our newest water-seeking rover just booked a ride to the Moon’s South Pole.

Pittsburgh-based Astrobotic has been selected to deliver VIPER to the Moon in 2023 in preparation for future #Artemis missions to bring humanity to the lunar surface: https://go.nasa.gov/2YsxZFw

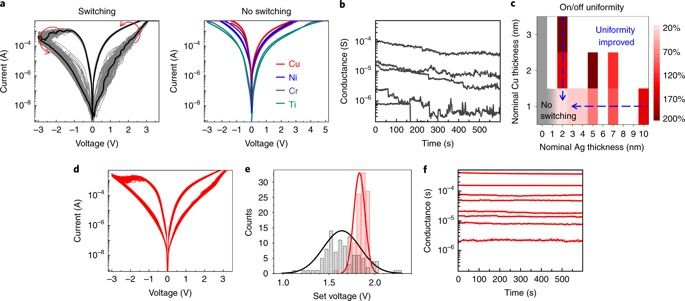

A memristor1 has been proposed as an artificial synapse for emerging neuromorphic computing applications2,3. To train a neural network in memristor arrays, changes in weight values in the form of device conductance should be distinct and uniform3. An electrochemical metallization (ECM) memory4,5, typically based on silicon (Si), has demonstrated a good analogue switching capability6,7 owing to the high mobility of metal ions in the Si switching medium8. However, the large stochasticity of the ion movement results in switching variability. Here we demonstrate a Si memristor with alloyed conduction channels that shows a stable and controllable device operation, which enables the large-scale implementation of crossbar arrays. The conduction channel is formed by conventional silver (Ag) as a primary mobile metal alloyed with silicidable copper (Cu) that stabilizes switching. In an optimal alloying ratio, Cu effectively regulates the Ag movement, which contributes to a substantial improvement in the spatial/temporal switching uniformity, a stable data retention over a large conductance range and a substantially enhanced programmed symmetry in analogue conductance states. This alloyed memristor allows the fabrication of large-scale crossbar arrays that feature a high device yield and accurate analogue programming capability. Thus, our discovery of an alloyed memristor is a key step paving the way beyond von Neumann computing.