This Robot Is The Future Of Warehouse Automation!! 🤖 😵

How do you teach a robot dog new tricks? Spot’s API makes it simple to create new behaviors like this machine learning-inspired game of fetch. Read the blog to see how it works. https://bit.ly/34GAJTe

The U.S. Navy successfully conducted its first-ever aerial refueling between a manned aircraft and an unmanned tanker. The unmanned tanker was being flown from the ground control station.

The Illinois-based mission lasted about four and a half hours and validated that an unmanned tanker could successfully use the Navy’s standard probe-and-drogue aerial refueling method.

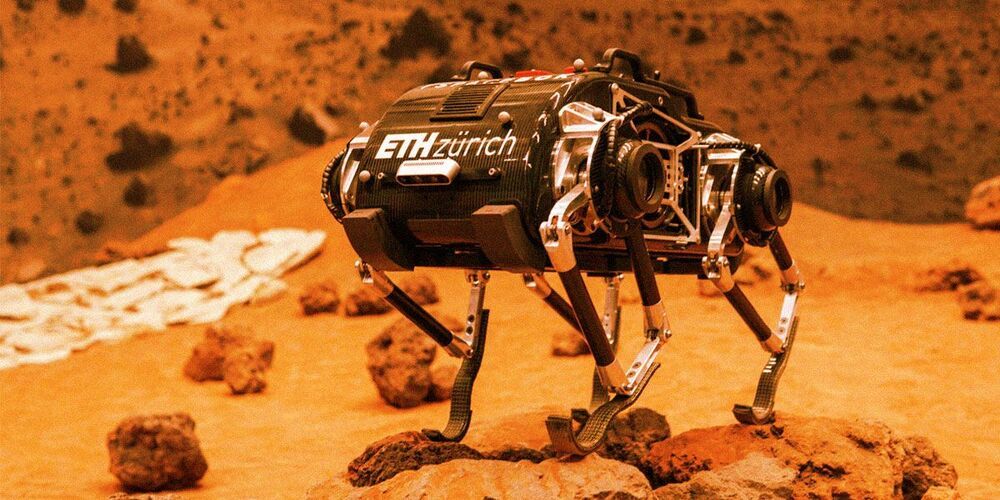

Engineers from ETH Zurich in Switzerland and the Max Planck Institute in Germany built a small quadrupedal robot meant to leap around on the surface of the Moon, much like the Apollo astronauts did half a century ago.

Now SpaceBok, named after the bounding springbok antelope, is getting a Mars upgrade — on the Red Planet, it will have to weather much stronger gravity than on the Moon and face more treacherous terrain, as Wired reports.

The concept is a strong one. If it were to ever land on Mars, a walking robot could explore terrain that has so far been off limits to wheeled rovers — and maybe even the planet’s mysterious caves.

Last week, I wrote an analysis of Reward Is Enough, a paper by scientists at DeepMind. As the title suggests, the researchers hypothesize that the right reward is all you need to create the abilities associated with intelligence, such as perception, motor functions, and language.

This is in contrast with AI systems that try to replicate specific functions of natural intelligence such as classifying images, navigating physical environments, or completing sentences.

The researchers go as far as suggesting that with well-defined reward, a complex environment, and the right reinforcement learning algorithm, we will be able to reach artificial general intelligence, the kind of problem-solving and cognitive abilities found in humans and, to a lesser degree, in animals.

The EU’s data protection agencies on Monday called for an outright ban on using artificial intelligence to identify people in public places, pointing to the “extremely high” risks to privacy.

In a non-binding opinion, the two bodies called for a “general ban” on the practice that would include “recognition of faces, gait, fingerprints, DNA, voice, keystrokes and other biometric or behavioural signals, in any context”.

Such practices “interfere with fundamental rights and freedoms to such an extent that they may call into question the essence of these rights and freedoms,” the heads of the European Data Protection Board and the European Data Protection Supervisor said.

Tesla has unveiled its new supercomputer, which is already the fifth most powerful in the world, and it’s going to be the predecessor of Tesla’s upcoming new Dojo supercomputer.

It is being used to train the neural nets powering Tesla’s Autopilot and upcoming self-driving AI.

Over the last few years, Tesla has had a clear focus on computing power both inside and outside its vehicles.