Video from PRO ROBOTS. 😃

-robot avatars.

-robots being tested for use in other planets.

-helmets that monitor the brain… See More.

✅ Instagram: https://www.instagram.com/pro_robots.

Video from PRO ROBOTS. 😃

-robot avatars.

-robots being tested for use in other planets.

-helmets that monitor the brain… See More.

✅ Instagram: https://www.instagram.com/pro_robots.

Generational shifts in the workforce are creating a loss of operational expertise. Veteran workers with years of institutional knowledge are retiring, replaced by younger employees fresh out of school, taught on technologies and concepts that don’t match the reality of many organizations’ workflows and systems. This dilemma is fueling the need for automated knowledge sharing and intelligence-rich applications that can close the skills gap.

Industrial organizations are accumulating massive volumes of data but deriving business value from only a small slice of it. Transient repositories like data lakes often become opaque and unstructured data swamps. Organizations are switching their focus from mass data accumulation to strategic industrial data management, homing in on data integration, mobility, and accessibility—with the goal of using AI-enabled technologies to unlock value hidden in these unoptimized and underutilized sets of industrial data. The rise of the digital executive (chief technology officer, chief data officer, and chief information officer) as a driver of industrial digital transformation has been a key influence on this trend.

According to recent Occupational Safety and Health Administration data, workers at Amazon fulfillment centers were seriously injured about twice as often as employees in other warehouses. To improve workplace safety, Amazon has been increasing its investment in robotic helpers to reduce injuries among its employees. With access granted for the first time ever, “Sunday Morning” correspondent David Pogue visited the company’s secret technology facility near Seattle to observe some of the most advanced warehouse robots yet developed, and to experience how high-tech tools are being used to aid human workers.

“CBS Sunday Morning” features stories on the arts, music, nature, entertainment, sports, history, science and Americana, and highlights unique human accomplishments and achievements. Check local listings for CBS Sunday Morning broadcast times.

Get more of “CBS Sunday Morning”: http://cbsn.ws/1PlMmAz.

Follow “CBS Sunday Morning” on Instagram: http://bit.ly/23XunIh.

Like “CBS Sunday Morning” on Facebook: https://bit.ly/3sRgLPG

Follow “CBS Sunday Morning” on Twitter: http://bit.ly/1RquoQb.

Download the CBS News app: http://cbsn.ws/1Xb1WC8

Try Paramount+ free: https://bit.ly/2OiW1kZ

For video licensing inquiries, contact: [email protected]

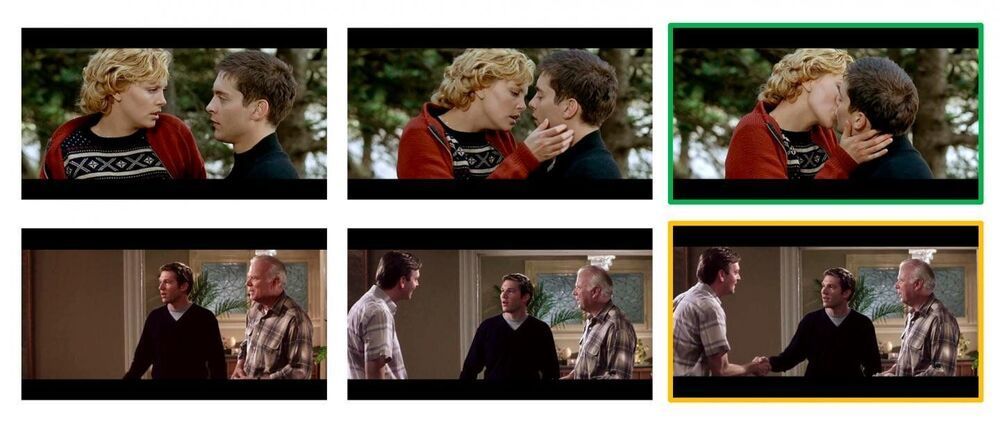

An outstanding idea, because for one there has been a video/ TV show/ movie, etc… showing every conceivable action a human can do; and secondly the AI could watch all of these at super high speeds.

Predicting what someone is about to do next based on their body language comes naturally to humans but not so for computers. When we meet another person, they might greet us with a hello, handshake, or even a fist bump. We may not know which gesture will be used, but we can read the situation and respond appropriately.

In a new study, Columbia Engineering researchers unveil a computer vision technique for giving machines a more intuitive sense for what will happen next by leveraging higher-level associations between people, animals, and objects.

“Our algorithm is a step toward machines being able to make better predictions about human behavior, and thus better coordinate their actions with ours,” said Carl Vondrick, assistant professor of computer science at Columbia, who directed the study, which was presented at the International Conference on Computer Vision and Pattern Recognition on June 24, 2021. “Our results open a number of possibilities for human-robot collaboration, autonomous vehicles, and assistive technology.”

Part of the problem mirrors the rise of automation in any other industry — performers told Input that they’re nervous that game studios might try to replace them with sophisticated algorithms in order to save a few bucks. But the game modder’s decision also raises questions about the agency that performers have over their own voices, as well as the artistry involved in bringing characters to life.

“If this is true, this is just heartbreaking,” video game voice actor Jay Britton tweeted about the mod. “Yes, AI might be able to replace things but should it? We literally get to decide. Replacing actors with AI is not only a legal minefield but an utterly soulless choice.”

“Why not remove all human creativity from games and use AI…” he added.

Sanofi will apply Google’s artificial intelligence (AI) and cloud computing capabilities toward developing new drugs, through a collaboration whose value was not disclosed.

The companies said they have agreed to create a virtual Innovation Lab to “radically” transform how future medicines and health services are developed and delivered.

Sanofi has articulated three goals for the collaboration with Google: better understand patients and diseases, increase Sanofi’s operational efficiency, and improve the experience of Sanofi patients and customers.

The challenges of making AI work at the edge—that is, making it reliable enough to do its job and then justifying the additional complexity and expense of putting it in our devices—are monumental. Existing AI can be inflexible, easily fooled, unreliable and biased. In the cloud, it can be trained on the fly to get better—think about how Alexa improves over time. When it’s in a device, it must come pre-trained, and be updated periodically. Yet the improvements in chip technology in recent years have made it possible for real breakthroughs in how we experience AI, and the commercial demand for this sort of functionality is high.

AI is moving from data centers to devices, making everything from phones to tractors faster and more private. These newfound smarts also come with pitfalls.

Satellite imagery is becoming ubiquitous. Research has demonstrated that artificial intelligence applied to satellite imagery holds promise for automated detection of war-related building destruction. While these results are promising, monitoring in real-world applications requires high precision, especially when destruction is sparse and detecting destroyed buildings is equivalent to looking for a needle in a haystack. We demonstrate that exploiting the persistent nature of building destruction can substantially improve the training of automated destruction monitoring. We also propose an additional machine-learning stage that leverages images of surrounding areas and multiple successive images of the same area, which further improves detection significantly. This will allow real-world applications, and we illustrate this in the context of the Syrian civil war.

Existing data on building destruction in conflict zones rely on eyewitness reports or manual detection, which makes it generally scarce, incomplete, and potentially biased. This lack of reliable data imposes severe limitations for media reporting, humanitarian relief efforts, human-rights monitoring, reconstruction initiatives, and academic studies of violent conflict. This article introduces an automated method of measuring destruction in high-resolution satellite images using deep-learning techniques combined with label augmentation and spatial and temporal smoothing, which exploit the underlying spatial and temporal structure of destruction. As a proof of concept, we apply this method to the Syrian civil war and reconstruct the evolution of damage in major cities across the country. Our approach allows generating destruction data with unprecedented scope, resolution, and frequency—and makes use of the ever-higher frequency at which satellite imagery becomes available.

The “technology intelligence engine” uses A.I. to sift through hundreds of millions of documents online, then uses all that information to spot trends.

Build back better

Tarraf was fed up with incorrect predictions. He wanted a more data-driven approach to forecasting that could help investors, governments, pundits, and anyone else to get a more accurate picture of the shape of tech-yet-to-come. Not only could this potentially help make money for his firm, but it could also, he suggested, illuminate some of the blind spots people have which may lead to bias.

Tarraf’s technology intelligence engine uses natural language processing (NLP) to sift through hundreds of millions of documents — ranging from academic papers and research grants to startup funding details, social media posts, and news stories — in dozens of different languages. The futurist and science fiction writer William Gibson famously opined that the future is already here, it’s just not evenly distributed. In other words, tomorrow’s technology has already been invented, but right now it’s hidden away in research labs, patent applications, and myriad other silos around the world. The technology intelligence engine seeks to unearth and aggregate them.

“It would be difficult to introduce a single thing and it causes crime to go down,” one expert said.

“Are we seeing dramatic changes since we deployed the robot in January?” Lerner, the Westland spokesperson said. “No. But I do believe it is a great tool to keep a community as large as this, to keep it safer, to keep it controlled.”

For its part, Knightscope maintains on its website that the robots “predict and prevent crime,” without much evidence that they do so. Experts say this is a bold claim.

“It would be difficult to introduce a single thing and it causes crime to go down,” said Ryan Calo, a law professor at the University of Washington, comparing the Knightscope robots to a “roving scarecrow.”