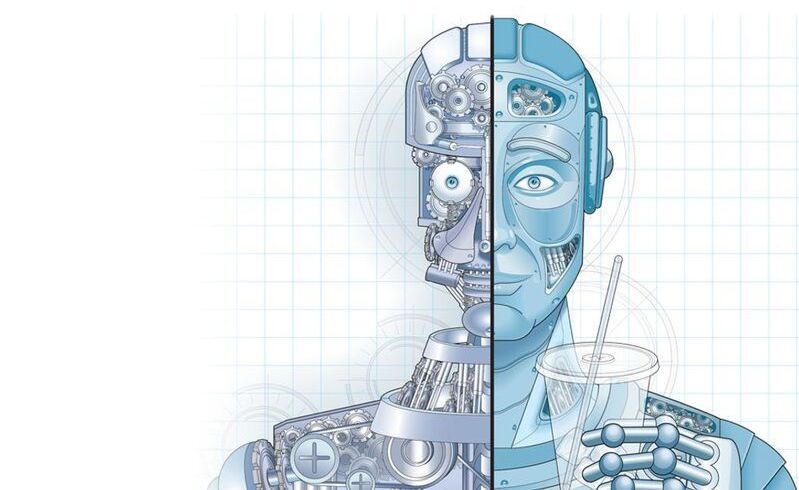

The US Defense Advanced Research Projects Agency (DARPA) has selected three teams of researchers led by Raytheon, BAE Systems, and Northrop Grumman to develop event-based infrared (IR) camera technologies under the Fast Event-based Neuromorphic Camera and Electronics (FENCE) program. It is designed to make computer vision cameras more efficient by mimicking how the human brain processes information. DARPA’s FENCE program aims to develop a new class of low-latency, low-power, event-based infrared focal plane array (FPA) and digital signal processing (DSP) and machine learning (ML) algorithms. The development of these neuromorphic camera technologies will enable intelligent sensors that can handle more dynamic scenes and aid future military applications.

New intelligent event-based — or neuromorphic — cameras can handle more dynamic scenes.