Circa 2019

For better or worse, machine learning and big data are poised to transform the study of the heavens.

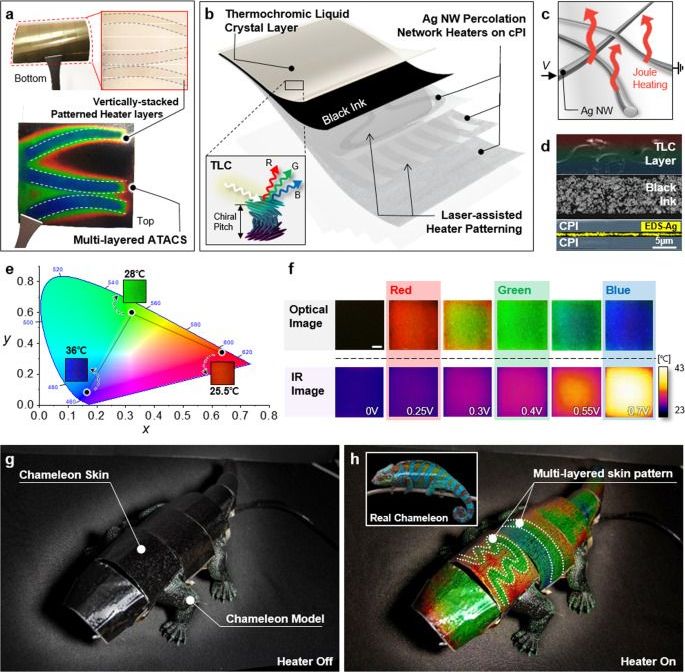

Artificial camouflage is the functional mimicry of the natural camouflage that can be observed in a wide range of species1,2,3. Especially, since the 1800s, there were a lot of interesting studies on camouflage technology for military purposes which increases survivability and identification of an anonymous object as belonging to a specific military force4,5. Along with previous studies on camouflage technology and natural camouflage, artificial camouflage is becoming an important subject for recently evolving technologies such as advanced soft robotics1,6,7,8 electronic skin in particular9,10,11,12. Background matching and disruptive coloration are generally claimed to be the underlying principles of camouflage covering many detailed subprinciples13, and these necessitate not only simple coloration but also a selective expression of various disruptive patterns according to the background. While the active camouflage found in nature mostly relies on the mechanical action of the muscle cells14,15,16, artificial camouflage is free from matching the actual anatomies of the color-changing animals and therefore incorporates much more diverse strategies17,18,19,20,21,22, but the dominant technology for the practical artificial camouflage at visible regime (400–700 nm wavelength), especially RGB domain, is not fully established so far. Since the most familiar and direct camouflage strategy is to exhibit a similar color to the background23,24,25, a prerequisite of an artificial camouflage at a unit device level is to convey a wide range of the visible spectrum that can be controlled and changed as occasion demands26,27,28. At the same time, the corresponding unit should be flexible and mechanically robust, especially for wearable purposes, to easily cover the target body as attachable patches without interrupting the internal structures, while being compatible with the ambient conditions and the associated movements of the wearer29,30.

System integration of the unit device into a complete artificial camouflage device, on the other hand, brings several additional issues to consider apart from the preceding requirements. Firstly, the complexity of the unit device is anticipated to be increased as the sensor and the control circuit, which are required for the autonomous retrieval and implementation of the adjacent color, are integrated into a multiplexed configuration. Simultaneously, for nontrivial body size, the concealment will be no longer effective with a single unit unless the background consists of a monotone. As a simple solution to this problem, unit devices are often laterally pixelated12,18 to achieve spatial variation in the coloration. Since its resolution is determined by the numbers of the pixelated units and their sizes, the conception of a high-resolution artificial camouflage device that incorporates densely packed arrays of individually addressable multiplexed units leads to an explosive increase in the system complexity. While on the other hand, solely from the perspective of camouflage performance, the delivery of high spatial frequency information is important for more natural concealment by articulating the texture and the patterns of the surface to mimic the microhabitats of the living environments31,32. As a result, the development of autonomous and adaptive artificial camouflage at a complete device level with natural camouflage characteristics becomes an exceptionally challenging task.

Our strategy is to combine thermochromic liquid crystal (TLC) ink with the vertically stacked multilayer silver (Ag) nanowire (NW) heaters to tackle the obstacles raised from the earlier concept and develop more practical, scalable, and high-performance artificial camouflage at a complete device level. The tunable coloration of TLC, whose reflective spectrum can be controlled over a wide range of the visible spectrum within the narrow range of temperature33,34, has been acknowledged as a potential candidate for artificial camouflage applications before21,34, but its usage has been more focused on temperature measurement35,36,37,38 owing to its high sensitivity to the temperature change. The susceptible response towards temperature is indeed an unfavorable feature for the thermal stability against changes in the external environment, but also enables compact input range and low power consumption during the operation once the temperature is accurately controlled.

Chameleons have long been a symbol of adaptation because of their ability to adjust their iridophores—a special layer of cells under the skin—to blend in with their surroundings.

In a new study published today in Nature Communications, researchers from South Korea have created a robot chameleon capable of imitating its biological counterpart, paving the way for new artificial camouflage technology.

Since 1,988 and formation of the Posthuman Movement, and articles by early adopters like Max Moore were a sign our message was being received — although I always argued on various Extropian & Transhuman bulletin boards & Yahoo groups &c that “Trans” was a redundant middle and we should move straight to Posthuman, now armed with the new MVT knowledge (also figures on the CDR). There will be a new edition of World Philosophy, the first this millennium, to coincided with various Posthuman University events later this year. Here is the text:

THE EXTROPIAN PRINCIPLES V. 2.01 August 7 1992.

Max More Executive Director, Extropy Institute.

1. BOUNDLESS EXPANSION — Seeking more intelligence, wisdom, and.

personal power, an unlimited lifespan, and removal of natural, social.

biological, and psychological limits to self-actualization and self-realization. Overcoming limits on our personal and social.

progress and possibilities. Expansion into the universe and infinite existence.

2. SELF-TRANSFORMATION — A commitment to continual moral.

intellectual, and physical self-improvement, using reason and critical.

thinking, personal responsibility, and experimentation. Biological and.

neurological augmentation.

3. INTELLIGENT TECHNOLOGY — Applying science and technology to.

transcend “natural” limits imposed by our biological heritage and environment.

4. SPONTANEOUS ORDER — Promotion of decentralized, voluntaristic.

For drone racing enthusiasts. 😃

If you follow autonomous drone racing, you likely remember the crashes as much as the wins. In drone racing, teams compete to see which vehicle is better trained to fly fastest through an obstacle course. But the faster drones fly, the more unstable they become, and at high speeds their aerodynamics can be too complicated to predict. Crashes, therefore, are a common and often spectacular occurrence.

But if they can be pushed to be faster and more nimble, drones could be put to use in time-critical operations beyond the race course, for instance to search for survivors in a natural disaster.

Now, aerospace engineers at MIT have devised an algorithm that helps drones find the fastest route around obstacles without crashing. The new algorithm combines simulations of a drone flying through a virtual obstacle course with data from experiments of a real drone flying through the same course in a physical space.

VLADIVOSTOK, Russia — To see Russia’s ambitions for its own version of Silicon Valley, head about 5,600 miles east of Moscow, snake through Vladivostok’s hills and then cross a bridge from the mainland to Russky Island. It’s here — a beachhead on the Pacific Rim — that the Kremlin hopes to create a hub for robotics and artificial intelligence innovation with the goal of boosting Russia’s ability to compete with the United States and Asia.

On Russia’s Pacific shores, the Kremlin is trying to build a beachhead among the Asian tech powers.