Normally, computer chips consist of electronic components that always do the same thing. In the future, however, more flexibility will be possible: New types of adaptive transistors can be switched in a flash, so that they can perform different logical tasks as needed. This fundamentally changes the possibilities of chip design and opens up completely new opportunities in the field of artificial intelligence, neural networks or even logic that works with more values than just 0 and 1.

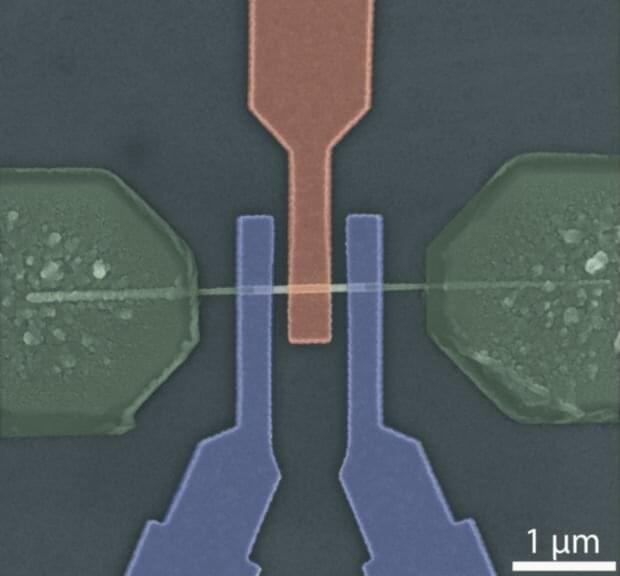

In order to achieve this, scientists at TU Wien (Vienna) did not rely on the usual silicon technology, but on germanium. This was a success: The most flexible transistor in the world has now been produced using germanium. It has been presented in the journal ACS Nano. The special properties of germanium and the use of dedicated program gate electrodes made it possible to create a prototype for a new component that may usher in a new era of chip technology.