Please welcome Samantha Higgins, who defines herself as a professional writer with a passion for research, observation, and innovation. She resides in Portland, Oregon with her husband and her two twin boys. When she’s not writing about artificial intelligence and other technology subjects, Samantha loves kayaking and reading creative non-fiction. In this her first contribution to 21st Century Tech Blog, she talks about the progress being made by those who create the neural networks that make computers learn about the patterns in human existence. That’s what machine learning is all about.

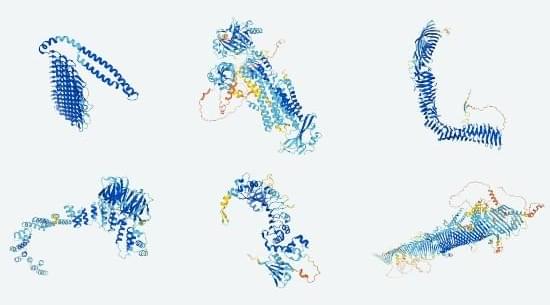

Machine learning is a technology that gives us language translation applications, word prediction when composing emails and texts, and suggestions on the order presentation within social media feeds. It is a technology used by many industries from healthcare where it can aid in medical diagnosis and interpretation of radiology images, as well as in the operation of autonomous vehicles.

Machine learning is a subcategory of artificial intelligence (AI), software tools that learn without explicitly relying on programming. Many companies deploying AI today are primarily using machine learning to help reduce labor costs and increase productivity.