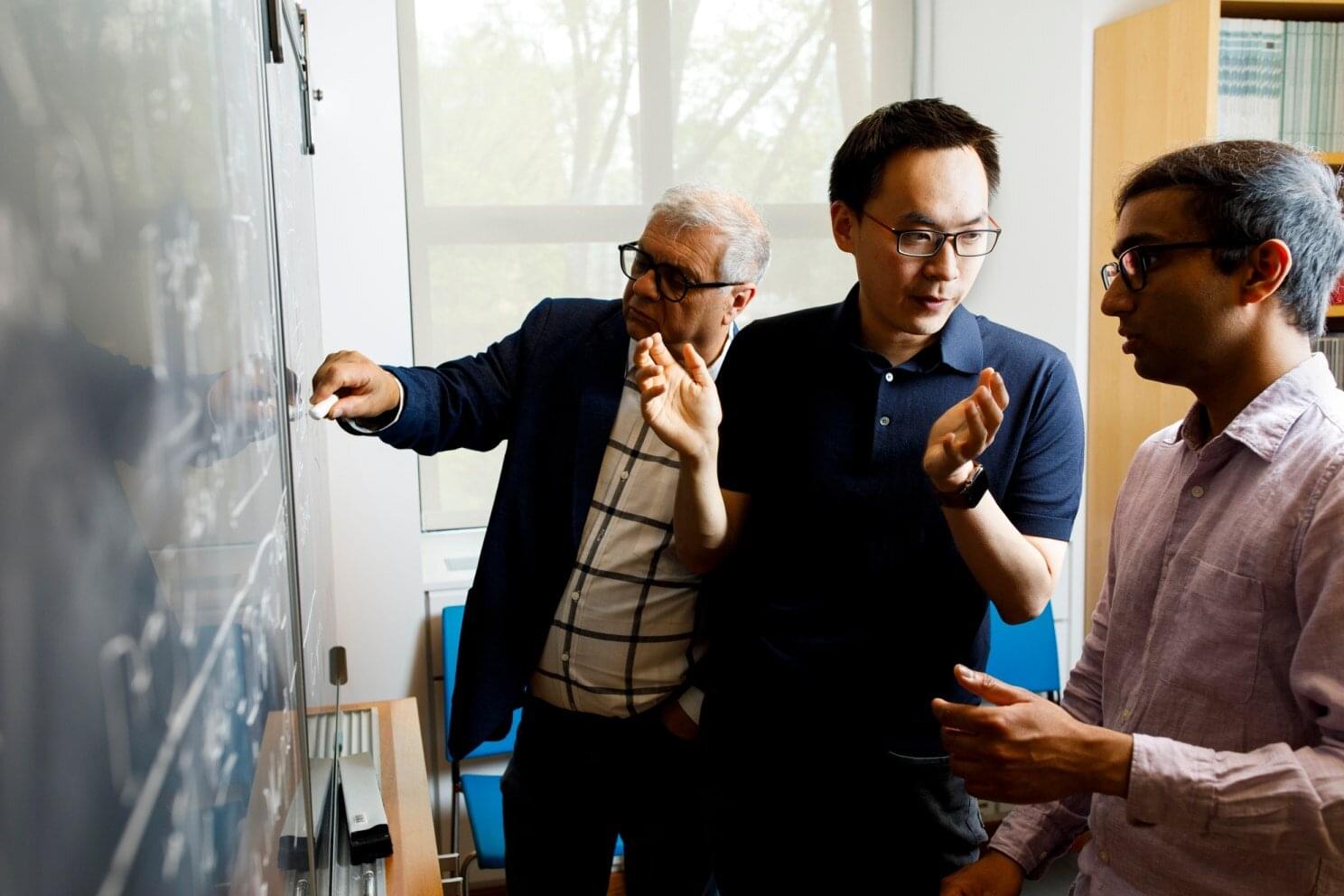

UCLA researchers have made a significant discovery showing that biological brains and artificial intelligence systems develop remarkably similar neural patterns during social interaction. This first-of-its-kind study reveals that when mice interact socially, specific brain cell types synchronize in “shared neural spaces,” and AI agents develop analogous patterns when engaging in social behaviors.

The study, “Inter-brain neural dynamics in biological and artificial intelligence systems,” appears in the journal Nature.

This new research represents a striking convergence of neuroscience and artificial intelligence, two of today’s most rapidly advancing fields. By directly comparing how biological brains and AI systems process social information, scientists reveal fundamental principles that govern social cognition across different types of intelligent systems.