A host of companies provide a remote, automated workforce for conducting experiments around the clock.

There has been a massive tidal wave of tech innovation over the last couple of years. Some apps and platforms offer basic services for the home or office. Others ignite your imagination.

Gil Perry, CEO and cofounder of D-ID, an Israeli-based tech company, has created something amazingly beautiful and touching. Leveraging artificial intelligence and sophisticated technology, the company has created a unique, animated, live portrait, which animates the photos of long-lost relatives or whoever you’d like to see, as if they are in the room with you. Its tech makes people come alive and look realistic and natural.

The feature, Deep Nostalgia, lets users upload a photo of a person or group of people to see individual faces animated by AI. People have been able to breathe life into their old black-and-white photos of grandma and grandpa that have been stored in boxes up in the attic.

Machines becoming conscious, self-aware, and having feelings would be an extraordinary threshold. We would have created not just life, but conscious beings.

There has already been massive debate about whether that will ever happen. While the discussion is largely about supra-human intelligence, that is not the same thing as consciousness.

Now the massive leaps in quality of AI conversational bots is leading some to believe that we have passed that threshold and the AI we have created is already sentient.

Read the article:► https://medium.com/towards-artificial-intelligence/a-new-bra…d127107db9

Paper:► https://www.nature.com/articles/s42256-020-00237-3.epdf.

Watch MIT’s video:► https://www.youtube.com/watch?v=8KBOf7NJh4Y&feature=emb_titl…l=MITCSAIL

GitHub:► https://github.com/mlech26l/keras-ncp.

Colab tutorials:

The basics of Neural Circuit Policies:► https://colab.research.google.com/drive/1IvVXVSC7zZPo5w-PfL3…sp=sharing.

How to stack NCP with other types of layers:► https://colab.research.google.com/drive/1-mZunxqVkfZVBXNPG0k…sp=sharing.

Follow me for more AI content:

Instagram: https://www.instagram.com/whats_ai/

LinkedIn: https://www.linkedin.com/in/whats-ai/

Twitter: https://twitter.com/Whats_AI

Facebook: https://www.facebook.com/whats.artificial.intelligence/

Medium: https://medium.com/@whats_ai.

The best courses to start and progress in AI:

https://www.omologapps.com/whats-ai.

Join Our Discord channel, Learn AI Together:

https://discord.gg/learnaitogether.

Support me on patreon:

Blake Lemoine reached his conclusion after conversing since last fall with LaMDA, Google’s artificially intelligent chatbot generator, what he calls part of a “hive mind.” He was supposed to test if his conversation partner used discriminatory language or hate speech.

As he and LaMDA messaged each other recently about religion, the AI talked about “personhood” and “rights,” he told The Washington Post.

It was just one of the many startling “talks” Lemoine has had with LaMDA. He has linked on Twitter to one — a series of chat sessions with some editing (which is marked).

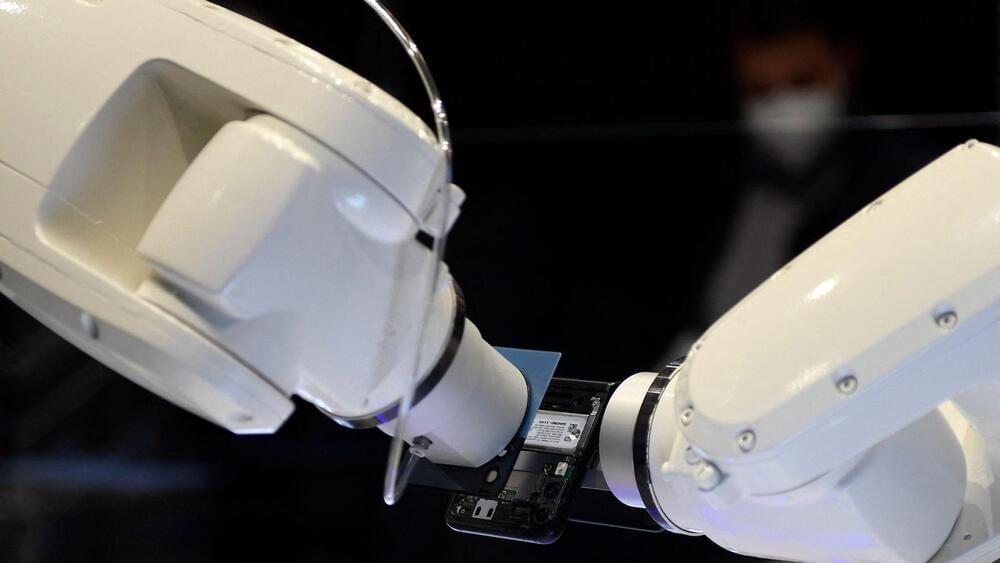

“Throughout our history, we’ve always had to find ways to stay ahead,” Kim told Rest of World. “Automation is the next step in that process.”

Speefox’s factory is 75% automated, representing South Korea’s continued push away from human labor. Part of that drive is labor costs: South Korea’s minimum wage has climbed, rising 5% just this year.

But the most recent impetus is legal liability for worker death or injury. In January, a law came into effect called the Serious Disasters Punishment Act, which says, effectively, that if workers die or sustain serious injuries on the job, and courts determine that the company neglected safety standards, the CEO or high-ranking managers could be fined or go to prison.

A team of researchers affiliated with multiple institutions in the U.S., including Google Quantum AI, and a colleague in Australia, has developed a theory suggesting that quantum computers should be exponentially faster on some learning tasks than classical machines. In their paper published in the journal Science, the group describes their theory and results when tested on Google’s Sycamore quantum computer. Vedran Dunjko with Leiden University City has published a Perspective piece in the same journal issue outlining the idea behind combining quantum computing with machine learning to provide a new level of computer-based learning systems.

Machine learning is a system by which computers trained with datasets make informed guesses about new data. And quantum computing involves using sub-atomic particles to represent qubits as a means for conducting applications many times faster than is possible with classical computers. In this new effort, the researchers considered the idea of running machine-learning applications on quantum computers, possibly making them better at learning, and thus more useful.

To find out if the idea might be possible, and more importantly, if the results would be better than those achieved on classical computers, the researchers posed the problem in a novel way—they devised a machine learning task that would learn via experiments repeated many times over. They then developed theories describing how a quantum system could be used to conduct such experiments and to learn from them. They found that they were able to prove that a quantum computer could do it, and that it could do it much better than a classical system. In fact, they found a reduction in the required number of experiments needed to learn a concept to be four orders of magnitude lower than for classical systems. The researchers then built such a system and tested it on Google’s Sycamore quantum computer and confirmed their theory.