Scientists from the Institute of Industrial Science at The University of Tokyo fabricated three-dimensional vertically formed field-effect transistors to produce high-density data storage devices by ferroelectric gate insulator and atomic-layer-deposited oxide semiconductor channel. Furthermore, by using antiferroelectric instead of ferroelectric, they found that only a tiny net charge was required to erase data, which leads to more efficient write operations. This work may allow for new, even smaller and more eco-friendly data-storage memory.

While consumer flash drives already boast huge improvements in size, capacity, and affordability over previous computer media formats in terms of storing data, new machine learning and Big Data applications continue to drive demand for innovation. In addition, mobile cloud-enabled devices and future Internet of Things nodes will require memory that is energy-efficient and small in size. However, current flash memory technologies require relatively large currents to read or write data.

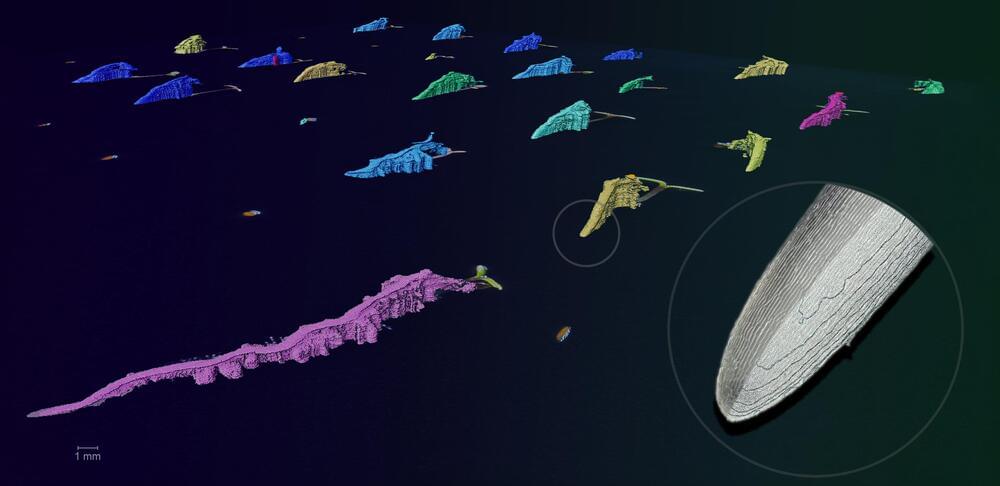

Now, a team of researchers at The University of Tokyo have developed a proof-of-concept 3D stacked memory cell based on ferroelectric and antiferroelectric field-effect transistors (FETs) with atomic-layer-deposited oxide semiconductor channel. These FETs can store ones and zeros in a non-volatile manner, which means they do not require power to be supplied at all times. The vertical device structure increases information density and reduces operation energy needs. Hafnium oxide and indium oxide layers were deposited in a vertical trench structure. Ferroelectric materials have electric dipoles that are most stable when aligned in the same direction. Ferroelectric Hafnium Oxide spontaneously enables the vertical alignment of the dipoles. Information is stored by the degree of polarization in the ferroelectric layer, which can be read by the system owing to changes in electrical resistance.