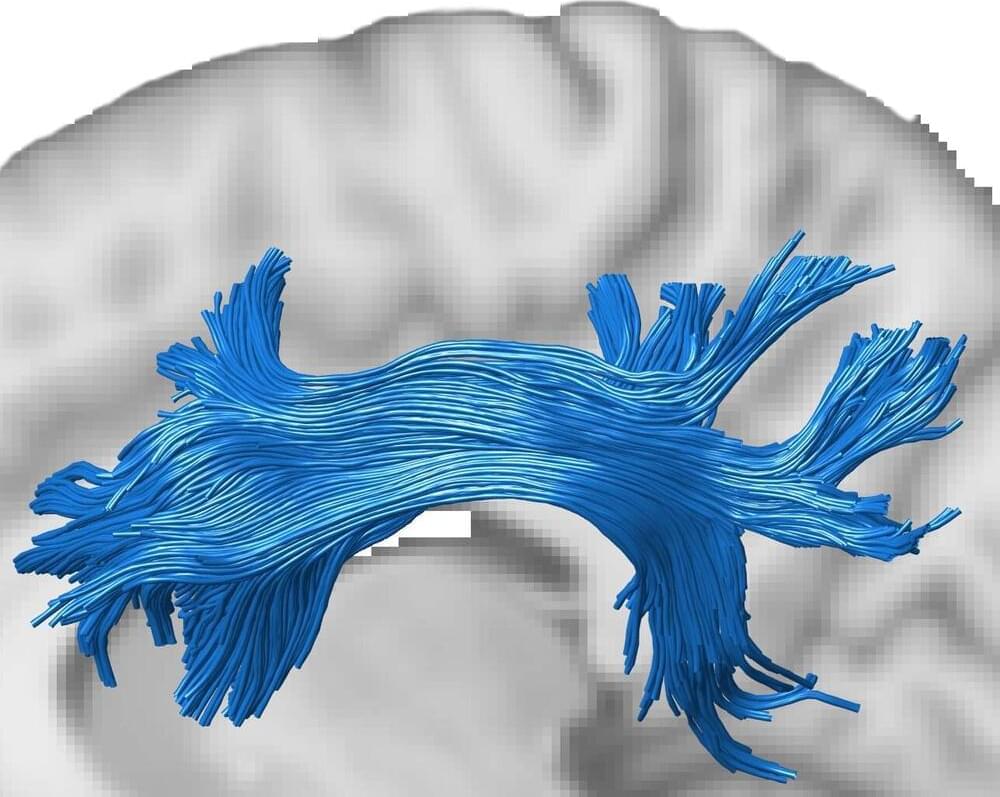

Anticipation of mind uploading in this movie.

In a post-nuclear-war society, blue-skinned, silver-eyed human-like robots have become a common sight as the surviving population suffers from a decreasing birth rate and has grown dependent on their assistance. A fanatical organization tries to prevent the robots from becoming too human, fearing that they will take over. Meanwhile, a scientist experiments with creating human replicas that have genuine emotions and memories…

Enjoy wink