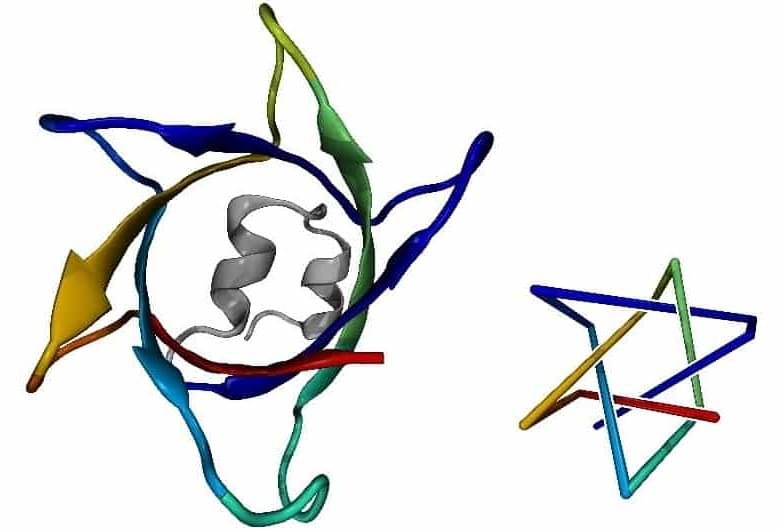

Scientists in Germany and the US have predicted the most topologically complex knot ever found in a protein using AlphaFold, the artificial intelligence (AI) system developed by Google’s DeepMind. Their complete analysis of the data produced by AlphaFold also revealed the first composite knots in proteins: topological structures containing two separate knots on the same string. If the discovered protein knots can be recreated experimentally it will serve to verify the accuracy of predictions made by AlphaFold.

Proteins can fold to form complex topological structures. The most intriguing of these are protein knots – shapes that would not disentangle if the protein were pulled from both ends. Peter Virnau, a theoretical physicist at Johannes Gutenberg University Mainz, tells Physics World that there are currently around 20 to 30 known knotted proteins. These structures, Virnau explains, raise interesting questions around how they fold and why they exist.

A protein’s shape can be closely linked with its function, but while there are a few theories on the functionality and purpose of protein knots there is little hard evidence to back these up. Virnau says that they might help to keep the proteins stable, by being particularly resistant to thermal fluctuations, for instance, but these are open questions. While protein knots are rare, they also appear to be highly preserved by evolution.