Behind China’s AI boom lies a cooling crisis.

To fuel the AI race, China is building massive data centers. But keeping them cool is becoming the country’s biggest engineering challenge.

Researchers at UNIST have developed an innovative AI technology capable of reconstructing highly detailed three-dimensional (3D) models of companion animals from a single photograph, enabling realistic animations. This breakthrough allows users to experience lifelike digital avatars of their companion animals in virtual reality (VR), augmented reality (AR), and metaverse environments.

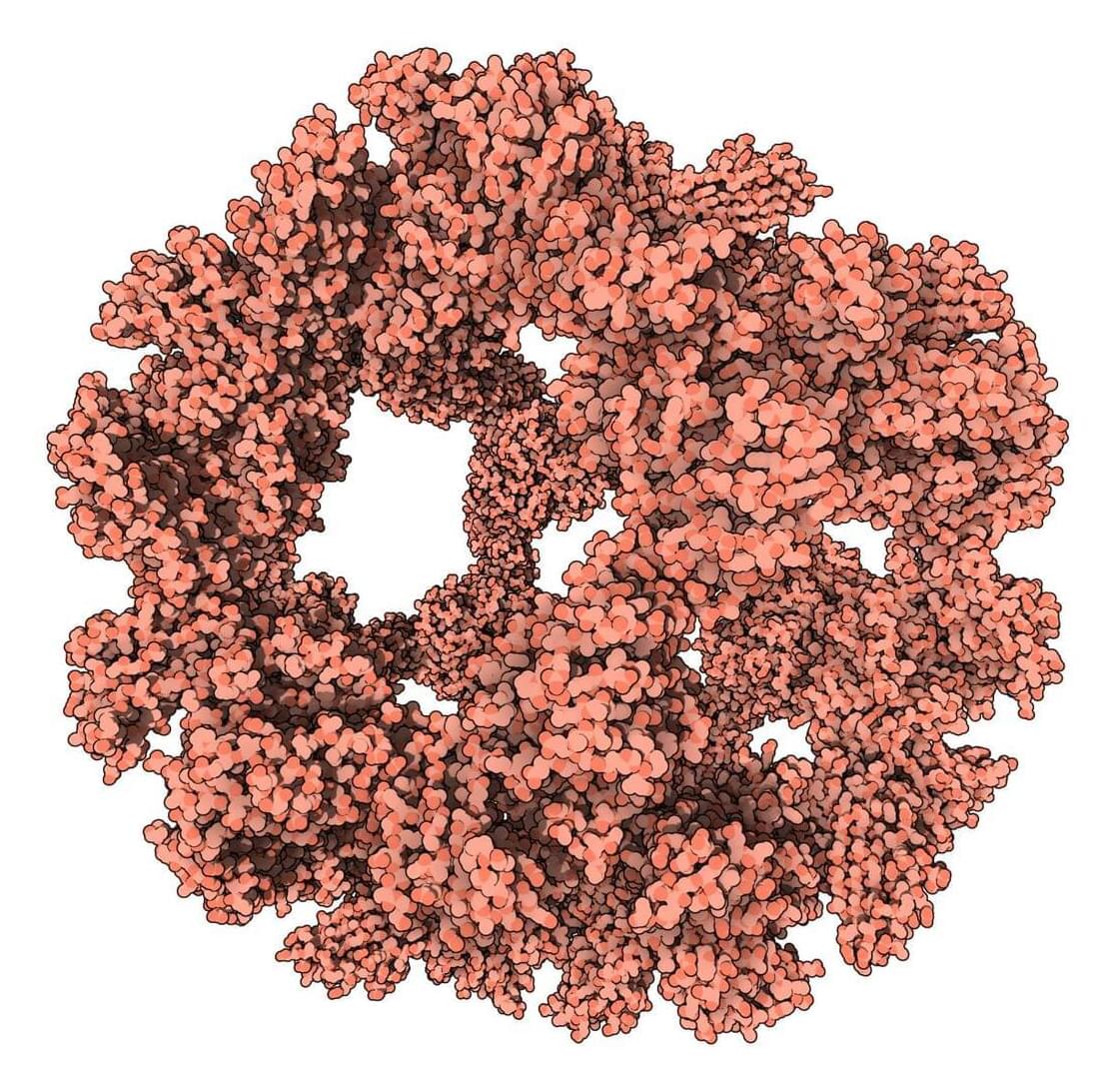

Researchers have tapped into the power of generative artificial intelligence to aid them in the fight against one of humanity’s most pernicious foes: antibiotic-resistant bacteria. | Researchers have tapped into the power of generative artificial intelligence to aid them in the fight against one of humanity’s most pernicious foes: antibiotic-resistant bacteria. Using a model trained on a library of about 40,000 chemicals, scientists were able to build never-before-seen antibiotics that killed two of the most notorious multidrug-resistant bacteria on earth.

Ultrahigh frequency bandwidths are easily blocked by objects, so users can lose transmissions walking between rooms or even passing a bookcase. Now, researchers at Princeton engineering have developed a machine-learning system that could allow ultrahigh frequency transmissions to dodge those obstacles.