Quantum chip beats classical AI in real-world test, saving energy and hinting at a greener AI future.

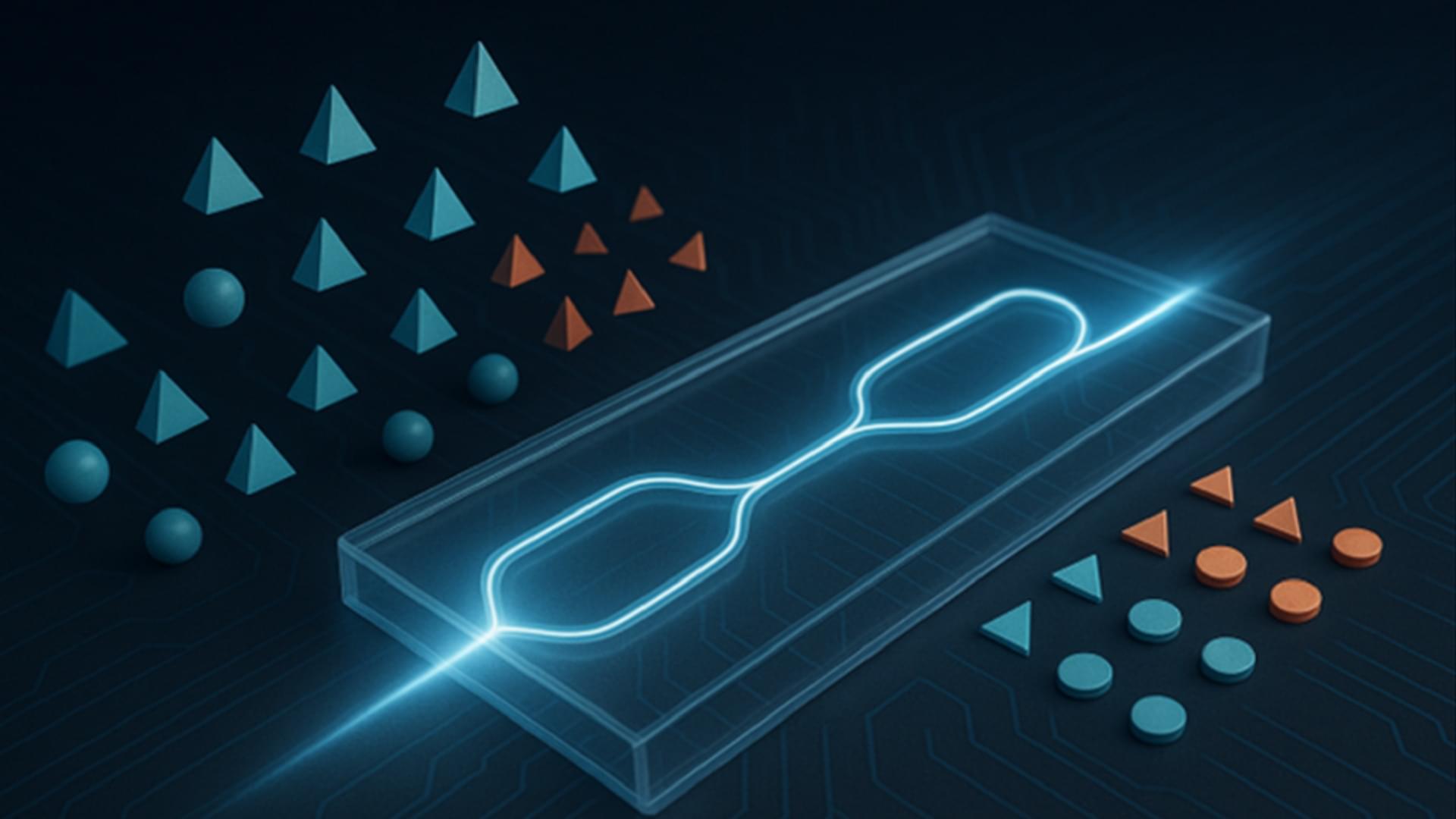

Facial morphology is a distinctive biometric marker, offering invaluable insights into personal identity, especially in forensic science. In the context of high-throughput sequencing, the reconstruction of 3D human facial images from DNA is becoming a revolutionary approach for identifying individuals based on unknown biological specimens. Inspired by artificial intelligence techniques in text-to-image synthesis, it proposes Difface, a multi-modality model designed to reconstruct 3D facial images only from DNA. Specifically, Difface first utilizes a transformer and a spiral convolution network to map high-dimensional Single Nucleotide Polymorphisms and 3D facial images to the same low-dimensional features, respectively, while establishing the association between both modalities in the latent features in a contrastive manner; and then incorporates a diffusion model to reconstruct facial structures from the characteristics of SNPs. Applying Difface to the Han Chinese database with 9,674 paired SNP phenotypes and 3D facial images demonstrates excellent performance in DNA-to-3D image alignment and reconstruction and characterizes the individual genomics. Also, including phenotype information in Difface further improves the quality of 3D reconstruction, i.e. Difface can generate 3D facial images of individuals solely from their DNA data, projecting their appearance at various future ages. This work represents pioneer research in de novo generating human facial images from individual genomics information.

(Repost)

This study has introduced Difface, a de novo multi-modality model to reconstruct 3D facial images from DNA with remarkable precision, by a generative diffusion process and a contrastive learning scheme. Through comprehensive analysis and SNP-FACE matching tasks, Difface demonstrated superior performance in generating accurate facial reconstructions from genetic data. In particularly, Difface could generate/predict 3D facial images of individuals solely from their DNA data at various future ages. Notably, the model’s integration of transformer networks with spiral convolution and diffusion networks has set a new benchmark in the fidelity of generated images to their real images, as evidenced by its outstanding accuracy in critical facial landmarks and diverse facial feature reproduction.

Difface’s novel approach, combining advanced neural network architectures, significantly outperforms existing models in genetic-to-phenotypic facial reconstruction. This superiority is attributed to its unique contrastive learning method of aligning high-dimensional SNP data with 3D facial point clouds in a unified low-dimensional feature space, a process further enhanced by adopting diffusion networks for phenotypic characteristic generation. Such advancements contribute to the model’s exceptional precision and ability to capture the subtle genetic variations influencing facial morphology, a feat less pronounced in previous methodologies.

Despite Difface’s demonstrated strengths, there remain directions for improvement. Addressing these limitations will require a focused effort to increase the model’s robustness and adaptability to diverse datasets. Future research should aim to incorporating variables like age and BMI would allow Difface to simulate age-related changes, enabling the generation of facial images at different life stages an application that holds significant potential in both forensic science and medical diagnostics. Similarly, BMI could help the model account for variations in body composition, improving its ability to generate accurate facial reconstructions across a range of body types.

Researchers at Apple have released an eyebrow-raising paper that throws cold water on the “reasoning” capabilities of the latest, most powerful large language models.

In the paper, a team of machine learning experts makes the case that the AI industry is grossly overstating the ability of its top AI models, including OpenAI’s o3, Anthropic’s Claude 3.7, and Google’s Gemini.

Can artificial intelligence (AI) recognize and understand things like human beings? Chinese scientific teams, by analyzing behavioral experiments with neuroimaging, have for the first time confirmed that multimodal large language models (LLM) based on AI technology can spontaneously form an object concept representation system highly similar to that of humans. To put it simply, AI can spontaneously develop human-level cognition, according to the scientists.

The study was conducted by research teams from Institute of Automation, Chinese Academy of Sciences (CAS); Institute of Neuroscience, CAS, and other collaborators.

The research paper was published online on Nature Machine Intelligence on June 9. The paper states that the findings advance the understanding of machine intelligence and inform the development of more human-like artificial cognitive systems.

Today, we’re excited to share V-JEPA 2, the first world model trained on video that enables state-of-the-art understanding and prediction, as well as zero-shot planning and robot control in new environments. As we work toward our goal of achieving advanced machine intelligence (AMI), it will be important that we have AI systems that can learn about the world as humans do, plan how to execute unfamiliar tasks, and efficiently adapt to the ever-changing world around us.

V-JEPA 2 is a 1.2 billion-parameter model that was built using Meta Joint Embedding Predictive Architecture (JEPA), which we first shared in 2022. Our previous work has shown that JEPA performs well for modalities like images and 3D point clouds. Building on V-JEPA, our first model trained on video that we released last year, V-JEPA 2 improves action prediction and world modeling capabilities that enable robots to interact with unfamiliar objects and environments to complete a task. We’re also sharing three new benchmarks to help the research community evaluate how well their existing models learn and reason about the world using video. By sharing this work, we aim to give researchers and developers access to the best models and benchmarks to help accelerate research and progress—ultimately leading to better and more capable AI systems that will help enhance people’s lives.

Planning for a future of intelligent robots means thinking about how they might transform your industry, what it means for the future of work, and how it may change the relationship between humans and technology.

Leaders must consider the ethical issues of cognitive manufacturing such as job disruption and displacement, accountability when things go wrong, and the use of surveillance technology when, for example, robots use cameras working alongside humans.

The cognitive industrial revolution, like the industrial revolutions before it, will transform almost every aspect of our world, and change will happen faster and sooner than most expect. Consider for a moment, what will it take for each of us and our organizations to be ready for this future?