A recent debate at Oxford University has convinced scientists that artificial intelligence is worth considering. The computer was asked about its views on the future, and whether AI’s emergence is ethical.

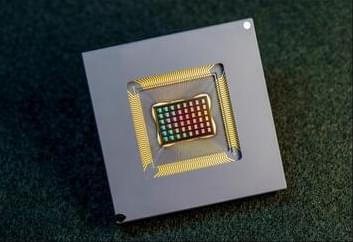

The AI that answered the questions is called Megatron and was created by a team at Nvidia. Megatron’s head contains all of Wikipedia, 63 million English news articles, and 38 gigabytes of Reddit chat.

This information helped him form his opinion. Participants also participated in the discussion. Megatron responded to their statements that they don’t believe that AI will have an ethical future, in a way that terrified those present.