In recent years, roboticists and material scientists worldwide have been trying to create artificial systems that resemble human body parts and reproduce their functions. These include artificial skins, protective layers that could also enhance the sensing capabilities of robots.

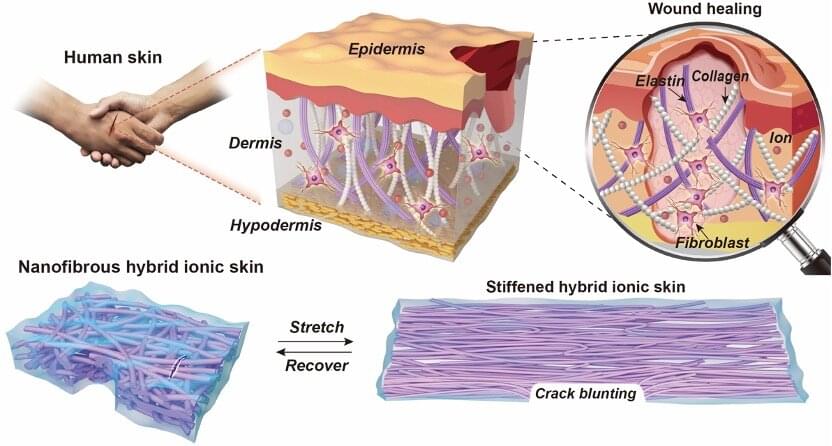

Researchers at Donghua University in China and the Jülich Centre for Neutron Science (JCNS) in Germany have recently developed a new and highly promising artificial ionic skin based on a self-healable elastic nanomesh, an interwoven structure that resembles human skin. This artificial skin, introduced in a paper published in Nature Communications, is soft, fatigue-free and self-healing.

“As we know, the skin is the largest organ in the human body, which acts as both a protective layer and sensory interface to keep our body healthy and perceptive,” Shengtong Sun, one of the researchers who carried out the study, told TechXplore. “With the rapid development of artificial intelligence and soft robotics, researchers are currently trying to coat humanoid robots with an ‘artificial skin’ that replicates all the mechanical and sensory properties of human skin, so that they can also perceive the everchanging external environment like us.”