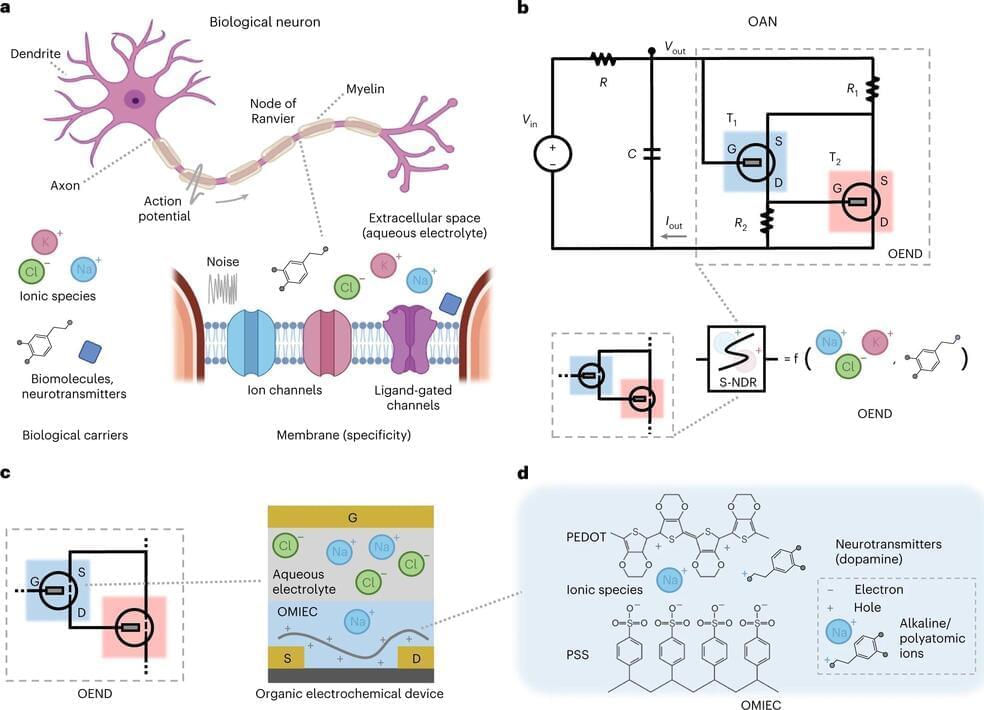

Artificial intelligence has long been a hot topic: a computer algorithm “learns” by being taught by examples: What is “right” and what is “wrong.” Unlike a computer algorithm, the human brain works with neurons—cells of the brain. These are trained and pass on signals to other neurons. This complex network of neurons and the connecting pathways, the synapses, controls our thoughts and actions.

Biological signals are much more diverse when compared with those in conventional computers. For instance, neurons in a biological neural network communicate with ions, biomolecules and neurotransmitters. More specifically, neurons communicate either chemically—by emitting the messenger substances such as neurotransmitters—or via electrical impulses, so-called “action potentials” or “spikes”.

Artificial neurons are a current area of research. Here, the efficient communication between the biology and electronics requires the realization of artificial neurons that emulate realistically the function of their biological counterparts. This means artificial neurons capable of processing the diversity of signals that exist in biology. Until now, most artificial neurons only emulate their biological counterparts electrically, without taking into account the wet biological environment that consists of ions, biomolecules and neurotransmitters.