Have you ever been cut off while driving and found yourself swearing and laying on the horn? Or come home from a long day at work and lashed out at whoever left the dishes unwashed? From petty anger to the devastating violence we see in the news, acts of aggression can be difficult to comprehend. Research has yielded puzzling paradoxes about how rage works in the brain. But a new study from Caltech, pioneering a machine-learning research technique in the hypothalamus, reveals unexpected answers on the nature of aggression.

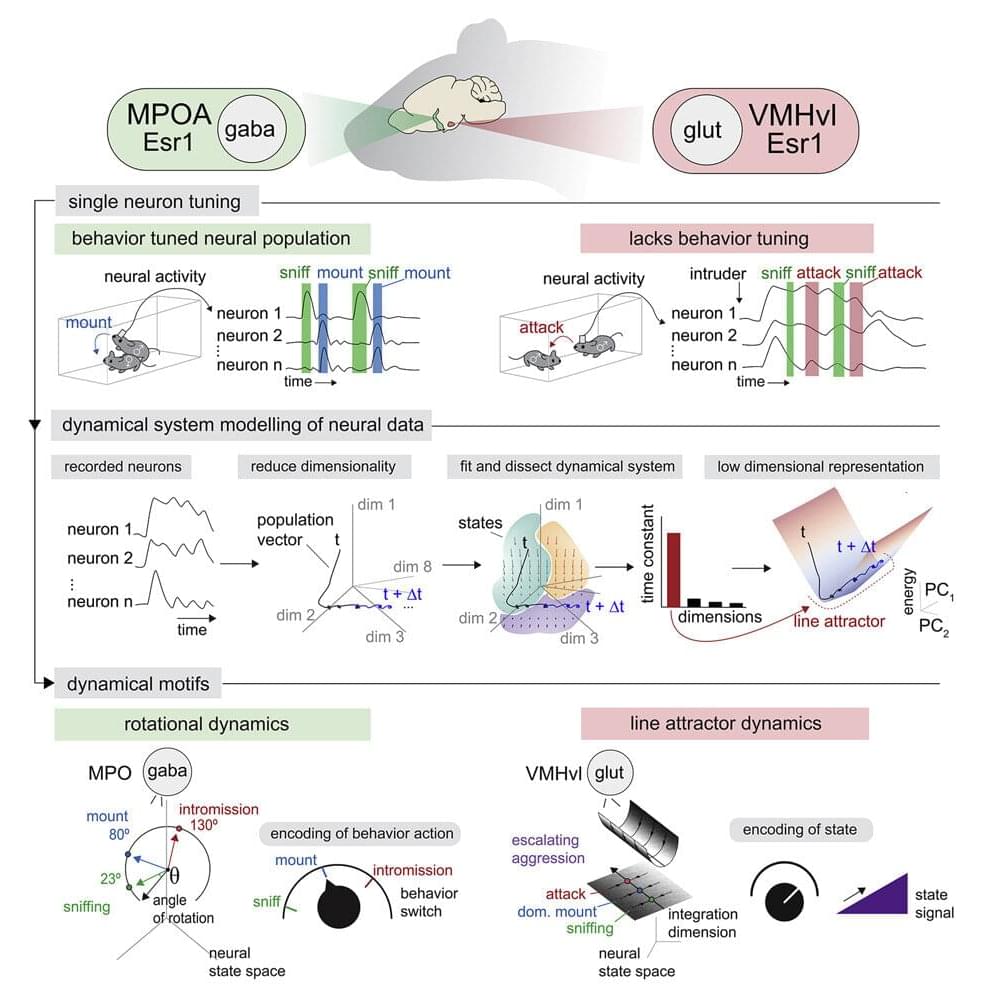

The hypothalamus is a brain region linked to many innate survival behaviors like mating, hunting, and the fight-or-flight response. Scientists have long believed that neurons in the hypothalamus are functionally specific—that is, certain groups of neurons correlate to certain specific behaviors. This seems to be the case in mating behavior, where neuron groups in the medial preoptic area (MPOA) of the hypothalamus, when stimulated, cause a male mouse to mount a female mouse. These same neurons are active when mounting behavior occurs naturally. The logical conclusion is that these neurons control mounting in mice.

But when looking at the analogous neurons that control aggression in another part of the hypothalamus, the VMHvl, researchers found a different story. These neurons could be stimulated to cause a male mouse to attack another male mouse, yet they did not show specific activity when the same neurons were observed in naturally fighting mice. This paradox indicated that something distinct was happening when it came to aggression.