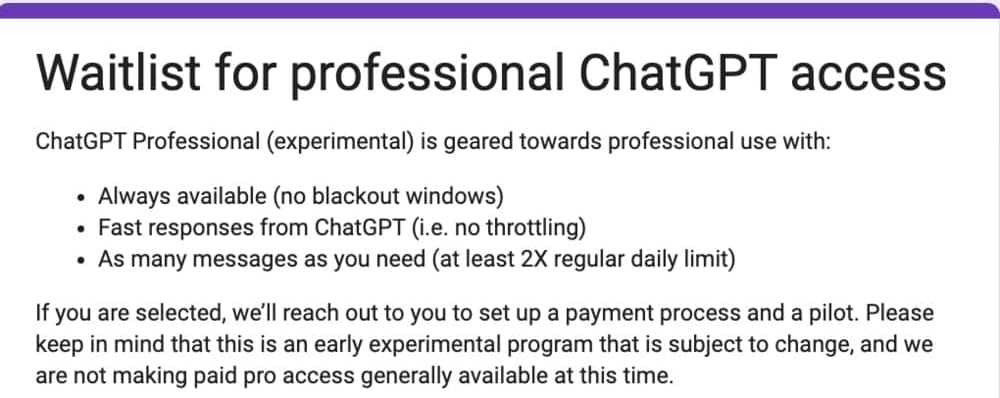

In the last week, I’ve been experimenting with the hot new version of ChatGPT to discover how it might conserve a leader’s scarcest resource: time. When OpenAI launched the AI chatbot at the end of November, it instantly attracted millions of users, with breathless predictions of its potential to disrupt business models and jobs.

It certainly promises to deliver on a prediction I made in 2019 in my book The Human Edge, which explores the skills needed in a world of artificial intelligence and digitization. I forecasted: “…AI can offer us more free time by automating the stupid stuff we currently have to do, thereby reducing our cognitive burden.”

This new chatbot can help time-poor managers by writing emails and talking points — but also in delivering complex tasks like HR performance reviews.