Join Wilfred G. van der Wiel as he explores his research in the field of brain-inspired nano systems, in the first in our series of Dutch Science Lectures. Watch the Q&A here: https://youtu.be/kwPKe8tat8E

Subscribe for regular science videos: http://bit.ly/RiSubscRibe.

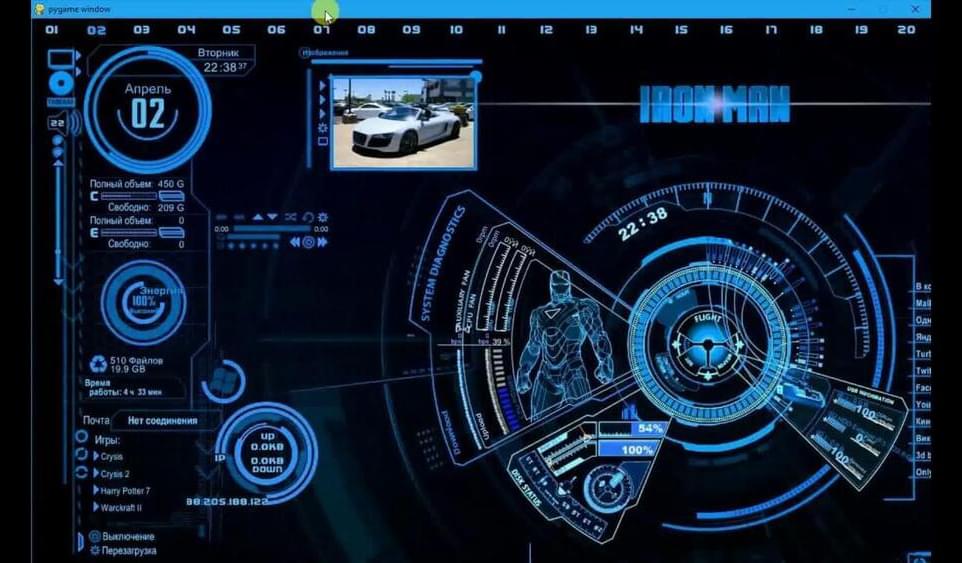

So far, intelligence has been the domain of living systems. Would it be possible to bring intelligence to the inanimate realm and use it for information processing? What would we gain from such an approach? Could we make more efficient computers? What would be the technological and societal implications?

This event is supported by the Embassy of the Kingdom of the Netherlands. The Netherlands and the United Kingdom share a long history of scientific exchange and collaboration. The Netherlands treasures and stimulates these excellent relations, especially in an era where complex societal challenges are not contained by borders, not even the stretch of water between us as North Sea Neighbours. The solutions to these challenges demand international collaboration in research and ongoing exchange of scientific ideas.

This event was recorded at the Ri on 3 October 2022.

Wilfred G. van der Wiel (Gouda, 1975) is full professor of Nanoelectronics and director of the BRAINS Centre for Brain-Inspired Nano Systems at the University of Twente, The Netherlands. He holds a second professorship at the Institute of Physics of the Westfälische Wilhems-Universität Münster, Germany. his research focuses on unconventional electronics for efficient information processing.