Generative AI represents a big breakthrough towards models that can make sense of the world by dreaming up visual, textual and conceptual representations, and are becoming increasingly generalist. While these AI systems are currently based on scaling up deep learning algorithms with massive amounts of data and compute, biological systems seem to be able to make sense of the world using far less resources. This phenomenon of efficient intelligent self-organization still eludes AI research, creating an exciting new frontier for the next wave of developments in the field. Our panelists will explore the potential of incorporating principles of intelligent self-organization from biology and cybernetics into technical systems as a way to move closer to general intelligence. Join in on this exciting discussion about the future of AI and how we can move beyond traditional approaches like deep learning!

Category: robotics/AI – Page 1,443

Deepmind Ada brings foundation models to reinforcement learning

Deepmind’s AdA shows that foundation models also enable generalist systems in reinforcement learning that learn new tasks quickly.

In AI research, the term foundation model is used by some scientists to refer to large pre-trained AI models, usually based on transformer architectures. One example is OpenAI’s large language model GPT-3, which is trained to predict text tokens and can then perform various tasks through prompt engineering in a few-shot setting.

In short, a foundation model is a large AI model that, because of its generalist training with large datasets, can later perform many tasks for which it was not explicitly trained.

Researcher uses AI to make texts that are thousands of years old readable

How should we live when we know we must die? This question is posed by the first work of world literature, the Gilgamesh epic. More than 4,000 years ago, Gilgamesh set out on a quest for immortality. Like all Babylonian literature, the saga has survived only in fragments. Nevertheless, scholars have managed to bring two-thirds of the text into readable condition since it was rediscovered in the 19th century.

The Babylonians wrote in cuneiform characters on clay tablets, which have survived in the form of countless fragments. Over centuries, scholars transferred the characters imprinted on the pieces of clay onto paper. Then they would painstakingly compare their transcripts and—in the best case—recognize which fragments belong together and fill in the gaps. The texts were written in the languages Sumerian and Akkadian, which have complicated writing systems. This was a Sisyphean task, one that the experts in the Electronic Babylonian Literature project can scarcely imagine today.

Enrique Jiménez, Professor of Ancient Near Eastern Literatures at LMU’s Institute of Assyriology, and his team have been working on the digitization of all surviving cuneiform tablets since 2018. In that time, the project has processed as many as 22,000 text fragments.

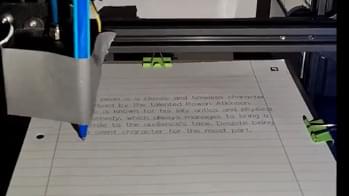

This genius student uses the power of AI and a 3D printer to ‘handwrite’ their homework

As technology advances, you can always count on one thing: students will use it to avoid doing homework. One industrious student not only got an AI chatbot to do their homework assignment, but they also rigged it to a 3D printer to write it out on pen and paper, expending the maximum amount of effort required to do the minimum amount of homework. Bravo!

TikTok user 3D_printer_stuff (opens in new tab) shared a series of videos on how they programmed a 3D printer to produce homework with the answers that ChatGPT (opens in new tab) wrote.

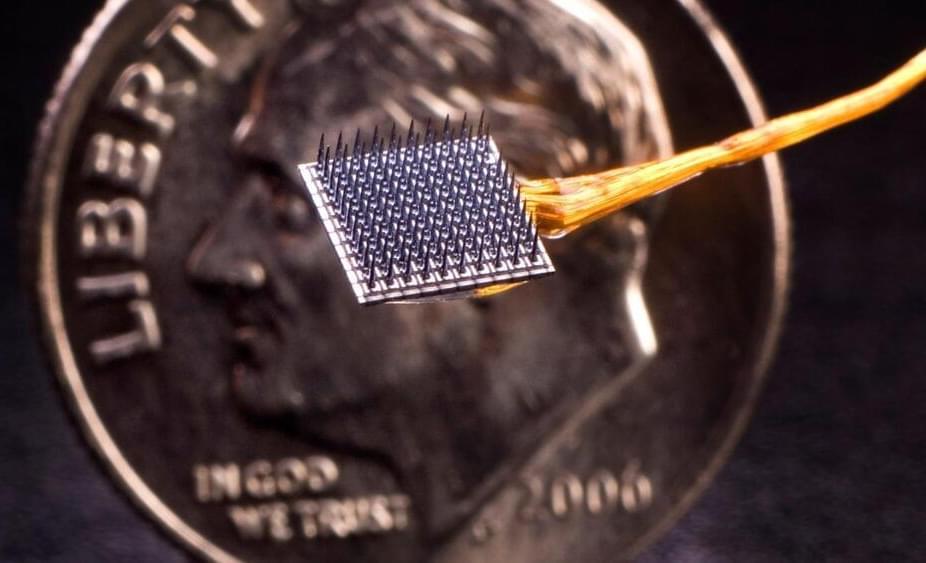

Clinical trials show encouraging safety profile for brain-computer interface turning thoughts into action

PROVIDENCE, R.I. [Brown University] — More than two decades ago, a team of Brown University researchers set out with an ambitious goal to provide people with paralysis a revolutionary neurotechnology capable of turning thoughts about movement into actual action, using a tiny device that would one day be implanted in the surface of the brain. Their work led to an ongoing, multi-institution effort to create the BrainGate brain-computer interface, designed to allow clinical trial participants with paralysis to control assistive devices like computers or robotic limbs just by thinking about the action they want to initiate.

Open Access Paper:

https://n.neurology.org/content/early/2023/01/13/WNL.

In an important step toward a medical technology that could help restore independence of people with paralysis, researchers find the investigational BrainGate neural interface system has low rates of associated adverse events.

Top 10 AI Tools Like ChatGPT You Must Try in 2023

10 AI Tools you must try in 2023. If you have used ChatGPT and noticed the power that Chat GPT provides, and you think it’s time to check out more artificial intelligence tools for business or life, here are some awesome ones!

Check out Codedamn to learn some programming below! They can help you to code and get your first job in the field too!

https://cdm.sh/adrian.

00:00 — Introduction.

00:07 — MidJourney AI Art.

01:24 — Adobe Podcast AI Voice.

02:18 — Nvidia Broadcast — AI Video Geforce.

03:10 — Codedamn — With ChatGPT Support.

04:19 — Descript — AI Video.

05:12 — Notion AI — AI Text Generation.

06:26 — Synthesia — AI Avatar.

07:00 — Resemble AI — Voice AI

07:39 — Soundraw AI — AI Music and Sound.

08:13 — Futurepedia — AI Tools Database.

#ai #tools #chatgpt.

Learn Design for Developers!

A book I’ve created to help you improve the look of your apps and websites.

📘 Enhance UI: https://www.enhanceui.com/

Feel free to follow me on:

This NEW A I Animation Software Is INSANE

The first 1,000 people to use the link will get a 1 month free trial of Skillshare: https://skl.sh/smeaf01231

A.I is here to stay, whether you like it or not.

Instead of fighting against it, lets adapt and start utilizing it within our workflows!

Cascadeur recently released its software to the public for free, and the A.I assisted tools are absolutely unreal.

Grab Cascadeur for free below.

https://cascadeur.com/download.

🔽SUPPORT THE CHANNEL (& Yourself ❤️)🔽

📚Level Up Academii📚

Future World: A Million Years Later — Artificial Intelligence Tech That Will Change The Universe

https://youtube.com/watch?v=V8cPdjO3a_U&feature=share

Find out what the world will be like a million years from now, as well as what kind of technology we’ll have available.

► All-New Echo Dot (5th Generation) | Smart Speaker with Clock and Alexa | Cloud Blue: https://amzn.to/3ISUX1u.

► Brilliant: Interactive Science And Math Learning: https://bit.ly/JasperAITechUniNet.

Timestamps:

0:00 No Physical Bodies.

1:51 Wormhole Creation.

2:44 Travel At Speed Of Light.

3:21 Type 3 Civilization.

4:52 Gravitational Waves.

5:46 Computers the Size of Planets.

6:56 Computronium.

I explain the following ideas on this channel:

* Technology trends, both current and anticipated.

* Popular business technology.

* The Impact of Artificial Intelligence.

* Innovation In Space and New Scientific Discoveries.

* Entrepreneurial and Business Innovation.

Subscribe link.

https://www.youtube.com/channel/UCpaciBakZZlS3mbn9bHqTEw.

Disclaimer:

Some of the links contained in this description are affiliate links.

As an Amazon Associate, I get commissions on orders that qualify.

This video describes the world and its technologies in a million years. Future technology, future technologies, tech universe, computronium, the world in a million years, digital immortality, wormhole, wormholes, faster than light, type 3 civilization, control gravity, planet sized computer, black hole energy extraction, black hole, black hole energy, and so on.

Google’s ChatGPT rival to be released in coming ‘weeks and months’

“We are just at the beginning of our AI journey, and the best is yet to come,” said Google CEO.

Search engine giant Google is looking to deploy its artificial intelligence (A.I.)-based large language models available as a “companion to search,” CEO Sundar Pichai said during an earnings report on Thursday, Bloomberg.

A large language model (LLM) is a deep learning algorithm that can recognize and summarize content from massive datasets and use it to predict or generate text. OpenAI’s GPT-3 is one such LLM that powers the hugely popular chatbot, ChatGPT.

Study claims ChatGPT not a threat but potential electronic assistant

The results highlight some potential strengths and weaknesses of ChatGPT.

Some of the world’s biggest academic journal publishers have banned or curbed their authors from using the advanced chatbot, ChatGPT. Because the bot uses information from the internet to produce highly readable answers to questions, the publishers are worried that inaccurate or plagiarised work could enter the pages of academic literature.

Several researchers have already listed the chatbot as a co-author in academic studies, and some publishers have moved to ban this practice.

Andrey Suslov/iStock.

It’s not surprising the use of such chatbots is of interest to academic publishers. Our recent study, published in Finance Research Letters, showed ChatGPT could be used to write a finance paper that would be accepted for an academic journal. Although the bot performed better in some areas than in others, adding in our own expertise helped overcome the program’s limitations in the eyes of journal reviewers.