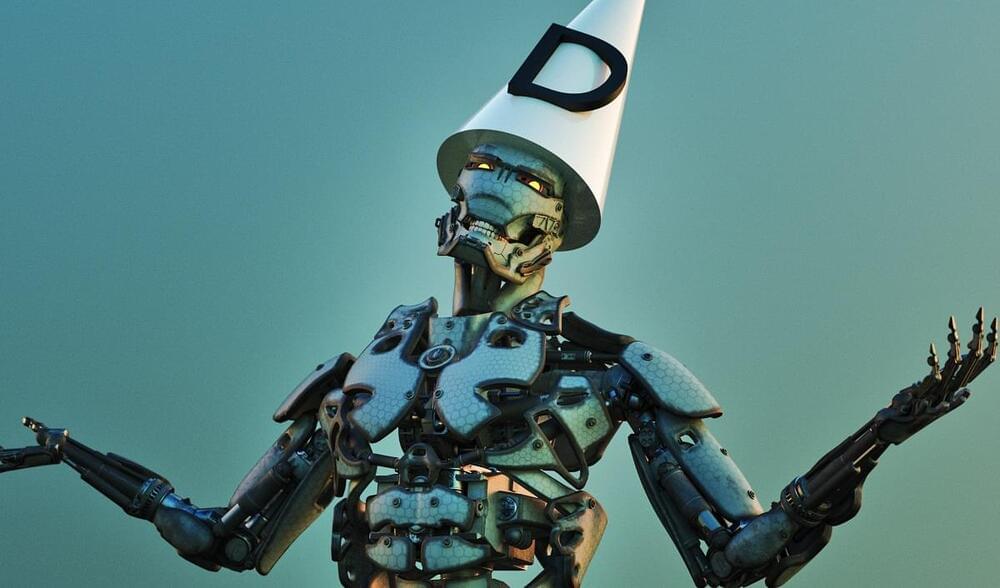

Boston Dynamics has trained its Atlas robot to develop a new set of skills.

In this video, the humanoid robot manipulates the world around it – interacting with objects and modifying the course to reach its goal – pushing the limits of locomotion, sensing, and athleticism.

“We’re not just thinking about how to make the robot move dynamically through its environment, like we did in Parkour and Dance,” said Scott Kuindersma, the company’s team leader of research on Atlas. “Now, we’re starting to put Atlas to work and think about how the robot should be able to perceive and manipulate objects in its environment.